The vision of self-driving cars has long been a staple of futuristic dreams, promising a revolutionary transformation in transportation. We are on the cusp of a world where human error, the leading cause of countless tragic motor vehicle accidents, could be drastically reduced, traffic flows optimized, and efficiency elevated to unprecedented levels. This is not merely a technical advancement; it heralds a fundamental shift in how we conceive of mobility, safety, and personal autonomy.

Yet, as these sophisticated machines transition from the realm of science fiction into our everyday reality, they bring with them a unique and complex set of challenges that extend far beyond engineering prowess. The debate surrounding autonomous vehicles rapidly transcends mere technical reliability, delving deep into profound ethical and philosophical realms. We are now tasked with grappling with the intricate question of how to program morality into algorithms, particularly when these machines are confronted with life-or-death scenarios where harm is simply unavoidable.

These are not abstract thought experiments reserved for philosophers; they are tangible, pressing issues that engineers, policymakers, and society at large must confront and resolve. The decisions made today regarding the ethical frameworks embedded within self-driving cars will irrevocably shape our collective future on the roads, determining not just who lives and who dies, but also our very definition of responsibility and justice in an increasingly automated world. It is a journey into uncharted ethical waters, and understanding these dilemmas is the first step towards navigating them responsibly.

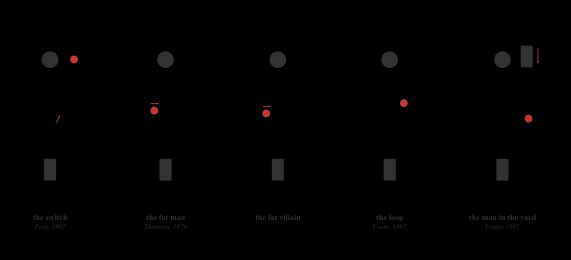

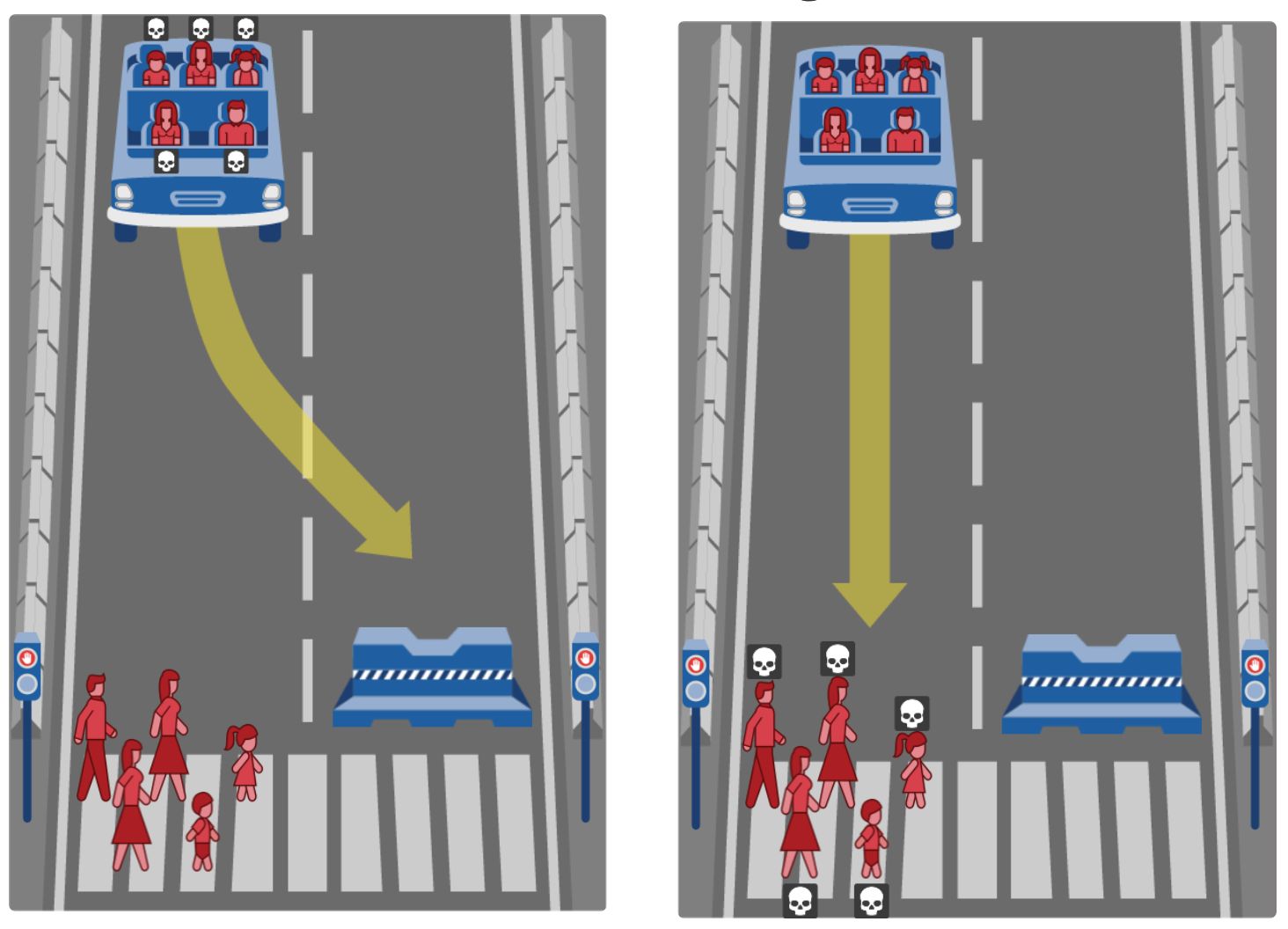

1. **The Unavoidable Accident: The Trolley Problem in Code** At the core of many ethical discussions surrounding self-driving cars lies a modern iteration of the classic “trolley problem,” a philosophical thought experiment that necessitates a choice between two undesirable outcomes. In the context of an autonomous vehicle, envision a scenario where the car, adhering to speed limits, suddenly encounters a child darting into its path. Despite the implementation of emergency braking, a collision is imminent. The sole alternative is to swerve, yet doing so would undoubtedly result in colliding with a dozen bystanders lining the road.

For human drivers, such split-second decisions are often instinctive, almost haphazard, and hindsight may absolve an imperfect choice made under overwhelming pressure. However, self-driving cars function based on pre-programmed algorithms; every decision, even in an emergency, is a calculated result of its underlying code. This implies that the choice to strike the child or the bystanders must be deliberately integrated into the system well before the accident ever transpires. It poses a fundamental question: should the car minimize overall harm, even if it entails sacrificing its own occupants, or safeguard its passengers at all costs? There is no universally accepted answer, and different cultures, legal systems, and individuals frequently hold divergent perspectives on the value of life, rendering this an exceptionally challenging dilemma for developers to encode.

2. **The Mandate for Impartiality: Prioritizing Lives in a Crisis** Expanding upon the inherent complexities of unavoidable accidents, a critical ethical dimension pertains to how self-driving cars are programmed to prioritize lives, if such prioritization is feasible. Should an autonomous vehicle render an impartial decision, or are there justifiable biases? Some contend that the most ethical approach is to program the car to make a decision that results in the least overall impact, effectively minimizing the number of lives lost. For instance, if a car is compelled to choose between saving a child or a group of sexagenarians, the utilitarian calculus would propose saving the group, as it preserves a greater number of lives.

Germany has taken a pioneering stride in this regard, having become the first country to adopt specific regulations that mandate self-driving cars to prioritize human lives above all other factors and explicitly forbid distinctions based on personal characteristics such as age, gender, or physical constitution in unavoidable accident scenarios. While this offers a clear framework, the practical implementation of such impartiality encounters substantial obstacles. Human values are frequently subjective and inconsistent, and the very act of encoding a “value of life” model into an algorithm, even one aimed at minimizing harm, compels society to confront its deepest moral convictions in an entirely novel and uncomfortable manner. The subtleties of a specific situation, which a human might intuitively comprehend, are transformed into a cold calculation for an algorithm.

3. **The Blame Game: Unpacking Liability in a Driverless World** The transition from human-driven to autonomous vehicles gives rise to a profound legal and ethical void with regard to accountability for accidents. When an autonomous vehicle inflicts harm, the traditional liability frameworks, which typically allocate responsibility to the human driver, prove inadequate. This poses a crucial question: who is accountable for the death or damage caused by an autonomous vehicle? Is it the vehicle manufacturer, who designed the vehicle? Is it the software programmer, who authored the algorithms guiding its decisions? Or is it the autonomous vehicle itself, a machine devoid of true agency or moral culpability?

The complexity intensifies when taking into account the nuanced causes of accidents. While studies indicate that autonomous vehicles are generally more reliable than human-driven vehicles, and 99% of accidents involving autonomous vehicles are attributed to human error (from other vehicles or pedestrians), there have been cases where the autonomous vehicle’s system itself was at fault. If a technical malfunction or a deficiency in the programming results in a collision, then the manufacturer or software developer may shoulder the primary responsibility. However, the precise delineation of culpability remains largely ambiguous, creating substantial uncertainty that could hinder public acceptance and the widespread adoption of this transformative technology. Governments are likely to be compelled to establish new legal precedents and regulatory frameworks to address these intricate issues of blame and compensation.

4. **The Human-Machine Handover: When Control Shifts** Many contemporary semi-autonomous vehicles, such as those manufactured by Tesla, operate under a pivotal stipulation: the human driver must stay alert, with hands on the steering wheel, ready to assume control at any moment. This hybrid model presents a distinctive and formidable ethical dilemma, particularly in emergency situations. If an accident transpires while the vehicle is in autonomous mode, yet the driver is anticipated to intervene, who genuinely assumes responsibility for the outcome? Is it the autonomous system that initiated the problematic sequence, or the human driver who did not respond decisively at the critical juncture?

The notion of a last-minute handover of control is rife with ethical hazards. Human reaction times, even for vigilant drivers, are not instantaneous and can be impaired by a multitude of factors, ranging from momentary distraction to cognitive overload during a sudden crisis. Expecting a human to effortlessly override a sophisticated AI system within milliseconds, often when the AI itself has failed to avert an accident, imposes an enormous and potentially inequitable burden on the individual. This scenario not only complicates the issue of liability but also raises a fundamental ethical query regarding the design philosophy itself: if the vehicle cannot reliably navigate a situation, is it truly ethical to transfer the ultimate life-or-death decision to a human at their most vulnerable instant, or does this merely postpone the fundamental moral programming challenge rather than resolve it?

5. **The Cybernetic Threat: Hacking and the Autonomous Car** Beyond the programming of ethical responses to unforeseen road incidents, self-driving cars bring about a daunting new category of ethical concern: the threat of malicious cyberattacks. Autonomous vehicles are, by their very essence, sophisticated computers on wheels, necessitating constant connectivity for updates, navigation, and uninterrupted operation. This inherent connectivity engenders a vulnerability to hacking, a risk that has already materialized in non-autonomous vehicles, where criminals have exhibited the capability to remotely access and control car systems. With fully autonomous cars, the stakes are significantly elevated.

Envision a scenario where a cybercriminal acquires unauthorized access to a self-driving car’s system, not merely to pilfer the vehicle, but to seize control of it for malevolent purposes—perhaps to deliberately cause an accident, incriminate the driver, or even transform the vehicle itself into a weapon. In such a harrowing event, the question of responsibility becomes even more intricate than in a conventional accident. Is the cybercriminal solely culpable? Does some responsibility lie with the driver, whose safety was jeopardized? Or is the car manufacturer accountable for failing to sufficiently safeguard the vehicle against such sophisticated threats? The escalating rates of cybercrime and the potentially catastrophic repercussions of a hacked autonomous vehicle prompt some to contend that these heightened security risks may ethically outweigh the purported benefits of reduced human error, casting a substantial shadow over the widespread adoption of driverless technology.

6. **Who Programs Morality? The Deciders of Ethical Algorithms** Given the far-reaching implications of autonomous vehicle decision-making, a pivotal and fiercely debated ethical question arises: who holds the legitimate authority to endow these machines with a moral code? Currently, the ethical parameters of self-driving cars are predominantly determined by the engineers and developers who are formulating the underlying technology. Their values, their interpretations of right and wrong, and their design decisions directly dictate how a car will behave in critical situations, including accidents.

However, numerous individuals contend that such a crucial responsibility should not lie solely with a single professional group. Is it truly fitting for engineers, no matter how proficient, to unilaterally determine the ethical algorithms that could decide the fate of occupants and pedestrians alike? Should this power instead be vested in government bodies, which are entrusted with safeguarding public safety and upholding societal values through legislation? Or, perhaps, should the ultimate ethical decision-making power rest with the individual driver, enabling them to customize their car’s ethical settings? The context presents a compelling argument that “no one is the right entity to decide the ethics of self-driving cases,” indicating that the decision must ultimately involve a far more extensive societal consensus, reflecting diverse cultural, legal, and personal moral viewpoints rather than a singular technical or governmental edict.

7. **Public Opinion as a Moral Compass: Navigating Diverse Values** The question of who is responsible for programming morality inevitably gives rise to the intricate interplay between public sentiment and algorithmic design. If engineers alone are unable to shoulder this burden, then how does society’s collective moral compass steer the development of these systems? Researchers have actively endeavored to address this issue by devising moral tests to assess public opinion on the ethical decisions that self-driving cars ought to make, acknowledging that societal values must ultimately be embodied in these sophisticated machines. This process recognizes that the moral judgments embedded in code will entail far – reaching societal repercussions, rendering widespread public acceptance of paramount importance for adoption.

One prominent example is MIT’s Moral Machine, an online platform that presents users with a sequence of ethical dilemmas involving self – driving cars. Participants are required to determine whom the vehicle should prioritize in scenarios such as having to choose between hitting a group of pedestrians or swerving and potentially injuring passengers. The invaluable data gathered from such platforms aids developers in comprehending how different individuals and cultures approach these life – or – death moral decisions, exposing the profound disparities that exist globally. This underscores a pivotal point: there is no universal moral code, and what is deemed acceptable in one region may be contentious in another.

The outcomes of these moral tests consistently reveal significant variations in opinions based on geographical and cultural factors, highlighting the necessity of taking these diverse perspectives into account when designing autonomous vehicle algorithms. For instance, some studies have discovered that people in Western countries might be more inclined to save an elderly person rather than a young person, while other themes, such as saving women over men, have demonstrated greater cross – cultural consistency. However, relying solely on public opinion is not without its challenges; public views can be inconsistent, change over time, and run the risk of enabling the majority to dictate decisions that have individual life – or – death consequences, potentially marginalizing minority perspectives.

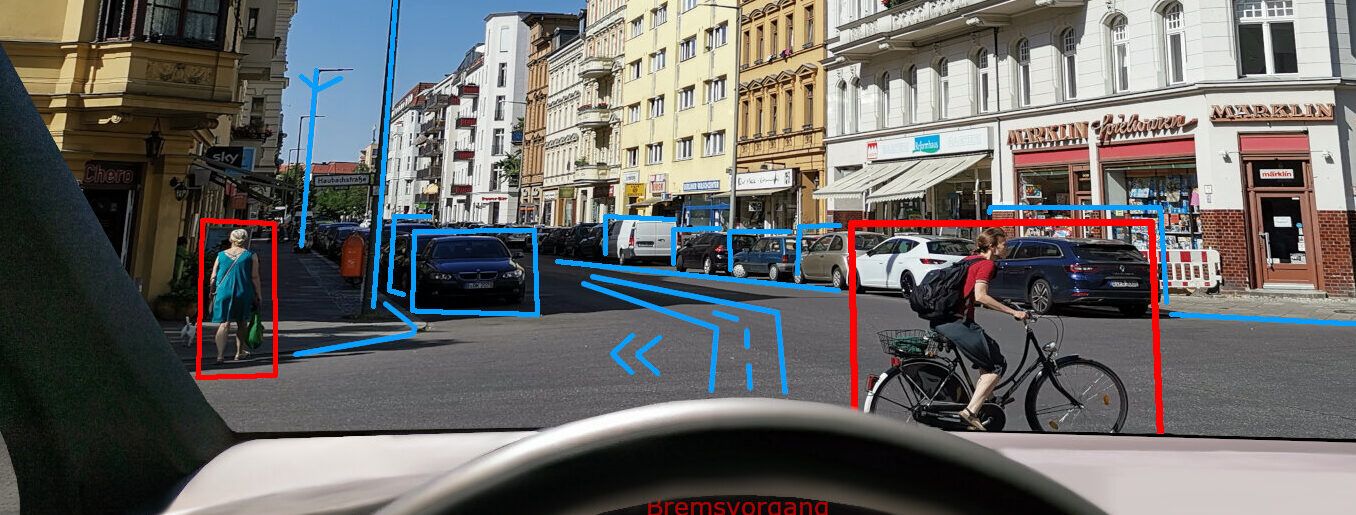

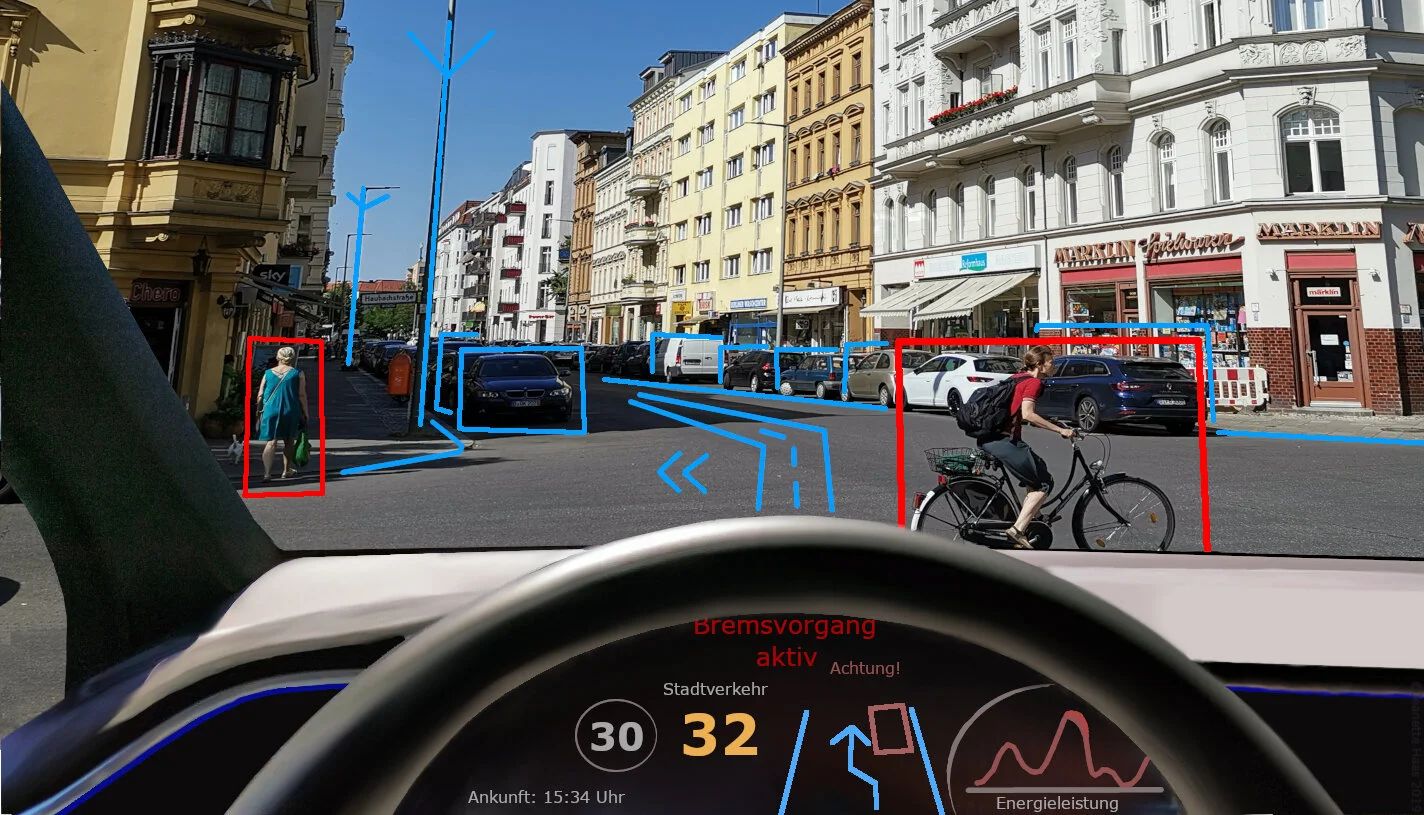

8. **The Imperative of Transparency: Unveiling Algorithmic Decisions** In the intricate terrain of autonomous vehicle ethics, the concept of transparency emerges as a cornerstone for establishing public trust and ensuring accountability. As self-driving cars become increasingly sophisticated, their decision-making processes, especially in critical situations, often remain inscrutable to the average individual and even to regulators. The imperative at present is to transcend mere technical functionality and to disclose how these complex AI systems reach their conclusions, particularly when confronted with moral dilemmas. This is not merely about demonstrating what the car “observes” but about elucidating the rationale underlying its “choices.”

Algorithmic transparency functions as a vital conduit between the intricate realm of artificial intelligence and the public’s need for reassurance. By enabling regulators, ethicists, and the public to comprehend the underlying logic of a vehicle’s system in critical situations, it cultivates a much-needed sense of trust. Publicly accessible testing results, which illustrate how cars respond to challenging scenarios, can shed light on the reasoning behind certain decisions and assist in demystifying these advanced technologies. This transparency is of paramount importance for ensuring that the ethical frameworks embedded in the code are in alignment with societal expectations and legal requirements, moving away from a black-box approach.

Without clear elucidations of how autonomous vehicles are programmed to prioritize, allocate risk, or respond to unforeseen events, public skepticism will undoubtedly endure. Transparency can aid in averting ethical concerns by demystifying the technology and ensuring that its operations are not only effective but also morally justifiable. It pertains to providing an open window into the “mind” of the self-driving car, allowing for scrutiny, discussion, and continuous improvement based on a shared understanding of its ethical parameters. This open approach is indispensable for facilitating informed public discourse and securing the necessary societal endorsement for this transformative technology.

9. **Building Consensus: The Role of Collaboration in Regulation** The development of autonomous vehicles, given their profound ethical implications, constitutes a task of such significance that it cannot be handled in isolation by any single entity. Rather, it necessitates a concerted and multifaceted approach entailing robust collaboration among governments, private companies, and the public. This collaborative model is not merely advantageous; it is indispensable for formulating comprehensive and widely accepted standards that can effectively tackle the myriad ethical dilemmas inherent in self-driving technology. Such a partnership recognizes that moral decisions embedded in code must mirror broad societal values, rather than solely the commercial interests of developers or manufacturers.

Governments, leveraging their legislative and regulatory powers, assume a pivotal role in establishing frameworks that ensure self-driving cars comply with stringent ethical and safety standards prior to their deployment on public roads. This encompasses conducting comprehensive testing on how autonomous vehicles handle complex crash scenarios and guaranteeing adherence to established ethical guidelines, such as those that might prioritize human lives above all other factors, as exemplified in Germany. These regulatory standards furnish a necessary baseline for safety and ethical conduct, averting a patchwork of conflicting rules and fostering a predictable environment for both developers and consumers.

Furthermore, the global nature of automotive technology mandates the formulation of international guidelines. Autonomous cars will operate across diverse countries, each with distinct legal systems, cultural norms, and ethical expectations. Organizations such as the United Nations could play a pivotal role in instituting universal regulations for autonomous vehicles, promoting consistent ethical standards across borders and facilitating seamless cross-border operation. This international collaboration guarantees that ethical considerations are addressed on a scale commensurate with the technology’s global reach, providing a unified approach to these intricate challenges and fostering widespread public acceptance.

10. **Beyond the Road: The Looming Specter of Job Displacement** While a substantial portion of the ethical discourse surrounding self-driving cars focuses on immediate accident scenarios, their societal impact extends well beyond the road itself. One of the most significant and frequently debated social consequences is the potential for widespread job displacement. The emergence of fully autonomous vehicles poses a direct threat to millions of jobs currently occupied by professional drivers, including truck drivers, taxi and rideshare operators, and delivery workers. As the technology matures and becomes increasingly reliable, the demand for human drivers in these sectors could decline sharply, raising profound ethical questions regarding economic equity and social responsibility.

This potential for job loss poses a substantial ethical dilemma for society. While the efficiency and safety advantages of autonomous vehicles are undeniable, the human cost of such a transition cannot be overlooked. How should society and the companies pioneering this technology address the economic dislocation of a large segment of the workforce? There exists an ethical obligation to consider the well-being of those whose livelihoods might be rendered obsolete. This encompasses discussions around comprehensive social safety nets, retraining initiatives, and new economic opportunities to assist displaced workers in adapting to a future economy where autonomous systems undertake tasks previously performed by humans.

The challenge is not merely about individual job losses but also about the ripple effects across communities and economies. Truck driving, for instance, is a cornerstone industry in many regions, supporting entire ecosystems of related businesses. The ethical implications extend to how companies balance innovation and profit with their societal responsibility to minimize harm to human populations. This necessitates proactive planning and investment in workforce transition strategies, ensuring that the benefits of technological advancement are distributed equitably and that a significant portion of the population is not left behind in the course of progress.

11. **Data Trails and Trust: Addressing Privacy Concerns** The widespread adoption of self-driving cars heralds a new frontier of privacy concerns, which far surpasses those ever posed by traditional vehicles. These sophisticated machines are, in essence, data-gathering platforms that continuously collect vast quantities of information. This encompasses not only granular details regarding a passenger’s location and driving habits but also potentially sensory data from within the vehicle, such as conversations or biometric information. The immense volume and sensitivity of this data give rise to critical ethical questions concerning its collection, storage, utilization, and security, thereby creating a new digital footprint for each journey.

Ethical frameworks must address issues such as who owns this data, how it is safeguarded against misuse, and the degree to which it can be shared with third parties, including law enforcement agencies or insurance companies. Companies engaged in the development of autonomous vehicles shoulder a substantial ethical responsibility to be entirely transparent about their data collection practices and to strictly adhere to existing and upcoming privacy laws. Without explicit consent and robust protective measures, the constant surveillance capabilities of self-driving cars could undermine individual privacy, transforming personal travel into an open book for corporations and authorities. This creates a trust deficit that may impede public acceptance.

The ethical dilemma resides in striking a balance between the benefits of data collection—such as enhancing safety, optimizing routes, and improving user experience—and the fundamental right to privacy. The potential for data breaches, unauthorized access, or the compilation of personal travel patterns to create detailed profiles looms large, raising the specter of surveillance capitalism on wheels. Therefore, a proactive ethical approach is required to ensure that self-driving cars enhance mobility without compromising the fundamental privacy of their occupants. This necessitates clear policies, robust encryption, and independent oversight to establish and uphold public trust in this data-intensive technology.

12. **Ensuring Equitable Access and Minimizing Ecological Footprint** Beyond immediate safety and privacy concerns, autonomous vehicles also pose substantial ethical challenges pertaining to accessibility, equity, and their broader environmental footprint. While self-driving cars offer immense potential for enhancing mobility for populations currently underserved, such as the elderly, disabled, or visually impaired, there exists a distinct risk that this technology could intensify existing societal inequalities. The high cost associated with early-stage autonomous vehicles, coupled with potential infrastructure requirements, could create a divide where only affluent individuals or communities can fully reap the benefits, leaving others behind.

Ethical considerations mandate that the transition to autonomous transportation be managed in a manner that fosters equitable access rather than widening the gap. This entails actively contemplating policies that ensure affordability, universal design, and widespread deployment, thereby preventing a situation where advanced mobility becomes a luxury rather than a public good. Collaborative endeavors involving city planners, policymakers, and community members are of paramount importance to integrate these vehicles into urban landscapes ethically, ensuring that all stakeholders, including pedestrians and cyclists, have a voice in how these vehicles operate and are accommodated on public roads.

Furthermore, while electric self-driving vehicles have the potential to significantly reduce greenhouse gas emissions, their widespread adoption may give rise to other environmental concerns. For instance, increased traffic resulting from people relying on autonomous vehicles for short, frequent trips (a phenomenon known as “empty mileage” or “deadheading”) could lead to greater energy consumption and congestion, thereby offsetting some of the environmental benefits. The ethical challenge here lies in designing and deploying autonomous systems that genuinely minimize the ecological footprint, taking into account not only tailpipe emissions but also the full lifecycle impact of manufacturing, energy infrastructure, and potential changes in travel behavior. This necessitates a holistic approach to ensure that the future of mobility is both accessible and sustainable.

The exploration of the ethical landscape of self-driving cars reveals a terrain far more intricate than initial technological promises suggested. From the immediate, life-or-death calculations in unavoidable accidents to the profound societal shifts in employment, privacy, and equity, these dilemmas require far more than mere engineering solutions. They demand a continuous, evolving dialogue among technologists, ethicists, policymakers, and the public to forge a moral code that can truly guide these intelligent machines. As autonomous vehicles transition from a futuristic concept to everyday reality, our collective responsibility is to ensure that this revolutionary technology serves humanity’s highest values, creating a future that is not only efficient and safe but also just, transparent, and universally beneficial. The road ahead is long, but by addressing these “impossible” dilemmas with thoughtful engagement, we can indeed prepare for a more ethically sound future.