In the ever-accelerating march of technological progress, few fields ignite the imagination quite like quantum mechanics. It is a realm that challenges the very fabric of our intuitive understanding, governing the behavior of matter and energy at scales so minuscule—the atomic and subatomic levels—that their rules seem alien to our everyday experience. Yet, despite its profound complexity and often abstract nature, this enigmatic domain has proven remarkably successful in unraveling the universe’s most fundamental workings, paving the way for revolutionary technologies that are now integral to our lives, from semiconductors and lasers to advanced medical imaging. It stands not merely as a scientific theory, but as a lens through which we glimpse the deeper, stranger realities of existence, pushing the boundaries of what is conceivable in computing and beyond.

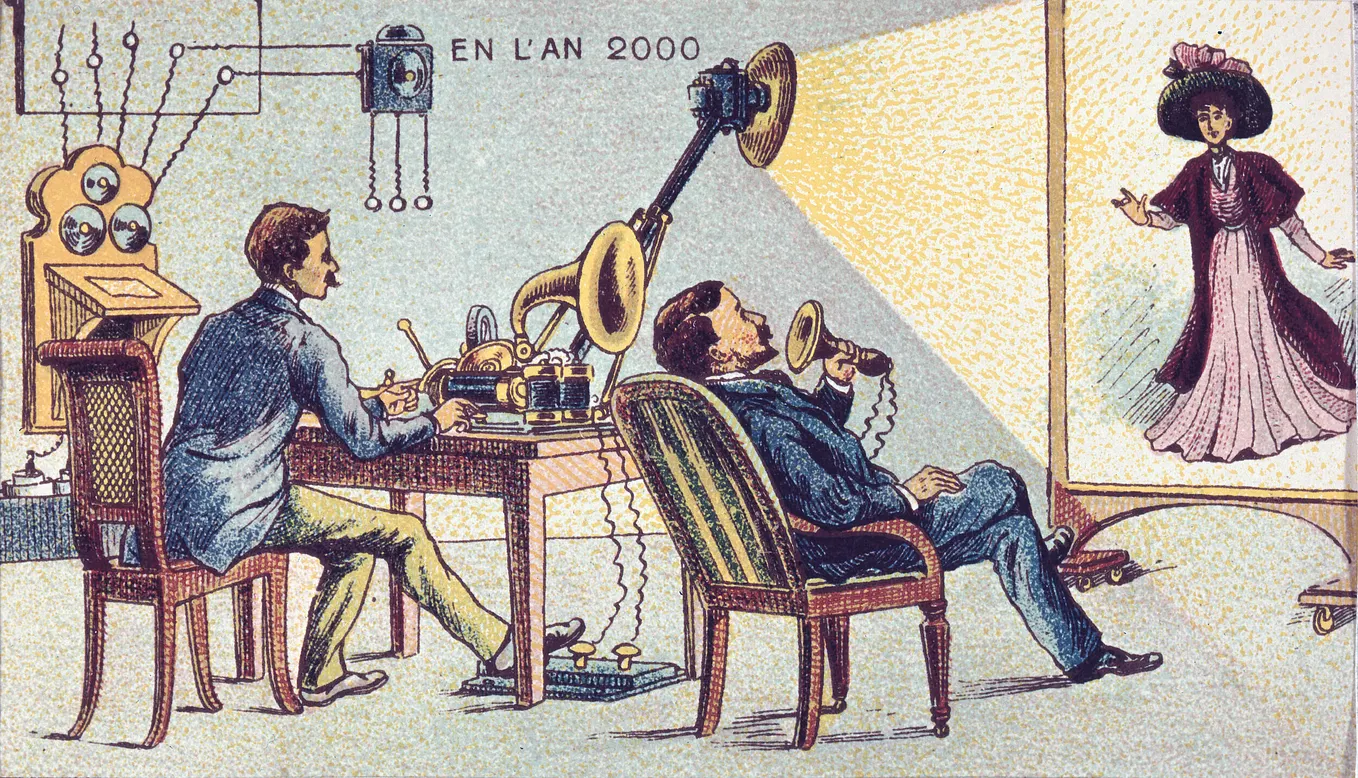

This revolutionary paradigm emerged in the early 20th century, born from the perplexing observations that classical physics, with its elegant Newtonian mechanics and predictable electromagnetism, simply could not reconcile. Scientists, grappling with phenomena like the mysterious emissions of blackbody radiation and the curious behavior of light, found themselves at a crossroads. A new theoretical framework was desperately needed to account for the strange results observed in experiments involving microscopic particles, leading to the groundbreaking idea that energy is not a continuous flow but is instead emitted or absorbed in discrete, indivisible packets. This radical notion of quantization marked the pivotal first step in what would become the sprawling and transformative journey of quantum mechanics.

Today, the insights gleaned from these early discoveries are more pertinent than ever, particularly as we stand on the cusp of a new computational era. Companies like Google are not just theorizing about quantum possibilities; they are actively engineering devices, such as the advanced Willow chip, that harness these fundamental quantum principles to achieve computational feats previously deemed impossible. This article embarks on an in-depth exploration of the core concepts that define the quantum world, tracing its historical genesis and unpacking the profound implications of its most foundational ideas. We will discover how these principles are not only reshaping our scientific understanding but are also actively being manipulated within cutting-edge quantum processors to unlock unprecedented capabilities, propelling us towards a future where the impossible becomes routine.

1. **The Quantum: A Fundamental Concept**

At the very heart of quantum mechanics lies the concept of the “quantum” itself. In physics, a quantum, or quanta in its plural form, represents the absolute minimum amount of any physical entity—any physical property, for that matter—that can be involved in an interaction. This foundational notion, that a property can only exist in discrete, indivisible units rather than as a continuous spectrum, is formally known as “the hypothesis of quantization.” It fundamentally alters our perception of reality, moving away from infinitely divisible quantities to distinct, measurable packets of existence.

What this implies is that the magnitude of a given physical property is not arbitrary; it can only adopt specific, discrete values. These values are not random, but rather consist of integer multiples of one fundamental quantum unit. Imagine a staircase where you can only stand on individual steps, not anywhere in between; quantum properties behave similarly, existing only at specific, allowed energy levels or states. This profound principle is ubiquitous in the quantum realm, dictating how particles behave and interact at the most granular levels of the universe.

Perhaps the most widely recognized example of this principle is the photon. A photon is, in essence, a single quantum of light, or indeed, of any other form of electromagnetic radiation, associated with a specific frequency. Similarly, when we consider the energy of an electron as it is bound within the confines of an atom, we find that its energy is also quantized. It cannot simply possess any amount of energy; instead, it is restricted to existing only in certain discrete values. This inherent quantization of electron energy levels is not merely a theoretical curiosity; it is the fundamental reason why atoms, and indeed matter in general, exhibit such remarkable stability. Without these discrete energy levels, electrons would spiral into the nucleus, and the stable atomic structures we observe would cease to exist. This foundational concept underpins much of quantum mechanics and is crucial for understanding how energy and matter interact, forming the bedrock of quantum electrodynamics.

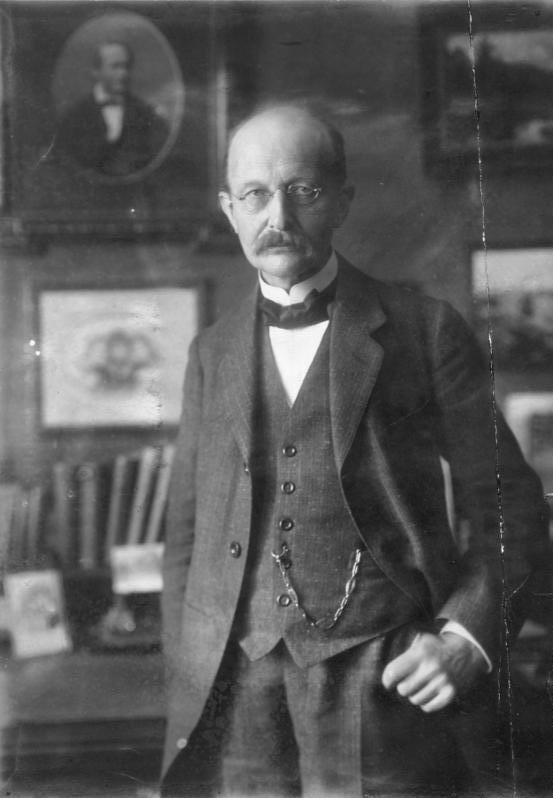

2. **Max Planck and the Birth of Quantization**

The modern concept of the quantum, as we understand it in physics, has a precise origin point in history. It dates back to December 14, 1900, a day when the German physicist Max Planck presented his groundbreaking findings to the German Physical Society. Planck’s work was revolutionary because he successfully demonstrated that by modeling harmonic oscillators as entities with discrete energy levels, he could finally resolve a perplexing and longstanding problem that had plagued the theory of blackbody radiation for years. His insight profoundly altered the scientific landscape, laying the groundwork for a completely new understanding of energy.

Intriguingly, in his initial report, Planck did not employ the term “quantum” in the exact modern sense we use today. Instead, he opted for the term *Elementarquantum* when referring to what he called the “quantum of electricity,” which we now commonly recognize as the elementary charge. For the smallest unit of energy itself, he used the term *Energieelement*, or “energy element,” rather than directly labeling it a quantum. This linguistic nuance highlights the nascent stage of the concept, as scientists were still grappling with the language to describe these newly discovered, fundamental units of nature.

Not long after, in a paper published in the prestigious *Annalen der Physik*, Planck formalized his discovery even further. It was in this seminal work that he introduced a constant, universally denoted as ‘h’, which he famously termed the “quantum of action” (*elementares Wirkungsquantum*) in 1906. This constant would become inextricably linked to his name, now known globally as the Planck constant, a cornerstone of quantum theory. His meticulous research also yielded more precise values for the elementary charge and the Avogadro–Loschmidt number, which quantifies the number of molecules in one mole of substance. The profound significance of Planck’s theory was recognized with the highest honor, as he was deservedly awarded the Nobel Prize in Physics in 1918 for his monumental discovery.

3. **Albert Einstein’s “Light Quanta”**

While Max Planck laid the groundwork for quantization, it was another titan of physics, Albert Einstein, who dramatically expanded upon Planck’s initial insights and deepened our understanding of the quantum nature of light. In 1905, a year that would become famous for his annus mirabilis, Einstein put forth a radical hypothesis: he suggested that electromagnetic radiation itself does not travel as a continuous wave, but instead exists in spatially localized packets. He coined a term for these discrete packets, referring to them as “quanta of light” (*Lichtquanta*). This was a revolutionary proposition that directly challenged the prevailing classical wave theory of light, which had dominated scientific thought for centuries.

Einstein’s genius lay in his ability to leverage this bold hypothesis to recast Planck’s earlier treatment of the blackbody problem. By viewing light as composed of these discrete quanta, he was able to develop a formulation that not only aligned with Planck’s work but also provided a clear and compelling explanation for the voltages observed in Philipp Lenard’s experiments on the photoelectric effect. This effect, where light striking a metal surface causes electrons to be ejected, had been a stubborn puzzle for classical physics. Einstein showed that it was the individual photons—these “quanta of light”—transferring their energy to the electrons that caused their ejection, rather than the intensity of a continuous wave.

This groundbreaking work solidified the idea that light possessed both wave-like and particle-like properties, a concept that would become known as wave-particle duality. Shortly after Einstein’s pivotal paper, the term “energy quantum” was formally introduced to represent the quantity *hν*, where *h* is Planck’s constant and *ν* (nu) is the frequency of the radiation. Einstein’s explanation of the photoelectric effect, for which he would later receive the Nobel Prize, was a definitive turning point, firmly embedding the concept of light quanta into the very fabric of modern physics and forever altering our perception of the fundamental nature of light and energy.

4. **Wave-Particle Duality: The Dual Nature of Reality**

One of the most profoundly mind-bending and counter-intuitive aspects of quantum mechanics is the principle of wave-particle duality. This concept shatters our everyday understanding of what constitutes a ‘particle’ or a ‘wave’ by suggesting that fundamental entities like electrons and photons can exhibit characteristics of both, depending entirely on how they are observed or interacted with. It’s a phenomenon that forces us to reconcile two seemingly contradictory descriptions of reality into a unified, albeit strange, framework.

Consider, for instance, the electron. When an electron collides with another object, it behaves precisely as one would expect a discrete particle to act, transferring momentum and energy in a localized fashion. However, when these same electrons are directed through tiny slits, they astonishingly behave like waves, spreading out and creating an interference pattern—a signature characteristic of waves overlapping and reinforcing or canceling each other out. This dual behavior isn’t limited to electrons; photons, the quanta of light that Einstein described, also exhibit this perplexing split personality, acting as particles in some contexts and waves in others.

The most famous demonstration of this phenomenon is the double-slit experiment. In this ingenious setup, particles such as electrons or photons, when left unobserved, generate an interference pattern on a detector screen, much like water waves passing through two openings. This clearly indicates wave-like behavior. Yet, the moment a measurement is introduced, attempting to determine which slit the particle passes through, the wave-like behavior instantaneously collapses. The particles then behave as discrete entities, creating two distinct bands on the detector, as if they had chosen a single path. This radical shift in behavior based on observation has immense implications, leading to deep philosophical questions: are particles truly both waves and particles simultaneously, or does the very act of observation fundamentally alter their nature, shaping reality itself?

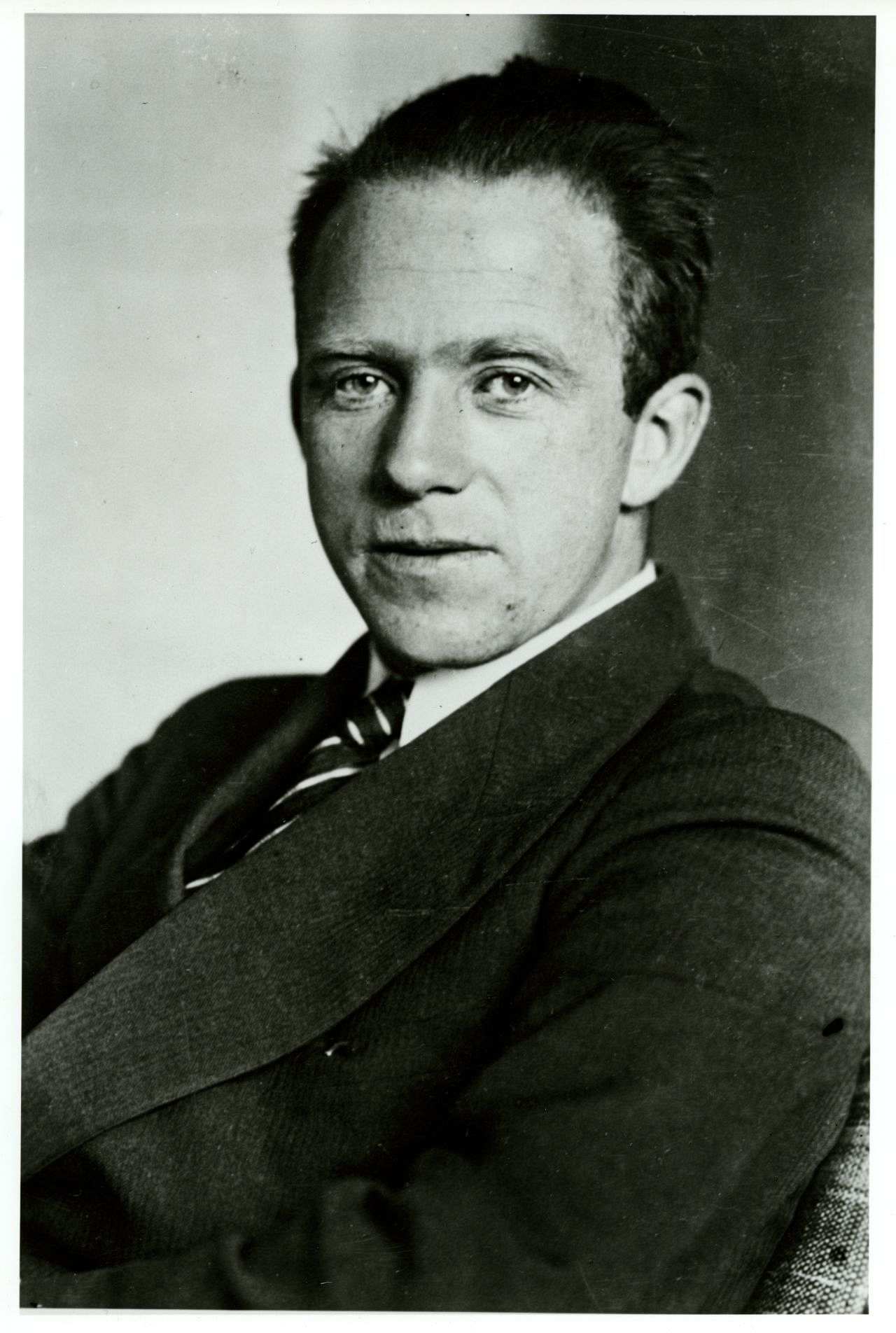

5. **Heisenberg’s Uncertainty Principle: Limits to Precision**

Further deepening the enigmatic nature of the quantum realm is Werner Heisenberg’s uncertainty principle, formulated in 1927. This cornerstone of quantum mechanics introduces a fundamental constraint on our ability to precisely know certain pairs of physical properties of a particle simultaneously. It asserts that for specific conjugate variables, such as a particle’s position and its momentum, there is an inherent, inescapable limit to how accurately both can be measured at the same time. This is not a limitation of our measuring instruments, but rather a profound characteristic of nature itself at the quantum scale.

The principle dictates an inverse relationship between the precision of these paired measurements. In simpler terms, the more accurately we endeavor to measure one of these properties, for instance, a particle’s exact position, the less accurately we can simultaneously determine its corresponding property, in this case, its momentum. Conversely, if we pinpoint a particle’s momentum with high precision, our knowledge of its precise location becomes inherently fuzzy and uncertain. This isn’t about human error or technological shortcomings; it’s a statement about the intrinsic nature of quantum reality, where perfect knowledge of certain complementary properties is simply unattainable.

The uncertainty principle carries profound implications for our classical intuitions about reality. In classical physics, it was theoretically possible, given enough information and sufficiently precise instruments, to know everything about a system with infinite accuracy. The quantum world, however, shatters this deterministic ideal. It suggests that there is a fundamental, irreducible limit to the amount of information we can simultaneously gather about a quantum system. This intrinsic fuzziness means that the universe at its most fundamental level is not as predictable or precisely defined as classical physics once led us to believe, introducing an element of inherent probability into its very fabric.

6. **Superposition: Existing in Multiple States**

Another profoundly captivating and central tenet of quantum mechanics is the concept of superposition. This principle posits that a quantum system is capable of existing in multiple states simultaneously until the moment it is observed or measured. It’s a radical departure from classical physics, where an object is always in a definite state at any given time. In the quantum domain, a particle can effectively occupy all its possible states at once, a ghostly blend of possibilities, until a conscious act of observation collapses this wave of probabilities into a single, definitive outcome.

The quintessential illustration of this mind-bending idea is Schrödinger’s famous thought experiment involving a cat in a sealed box. Inside the box, the cat’s fate is linked to a quantum event with a 50% chance of occurring. According to superposition, before the box is opened and the cat is observed, the cat exists in a combined state of being both alive and dead simultaneously. Only when an observer opens the box does the quantum state “collapse,” revealing the cat in one definite state—either alive or dead. While a macroscopic example, it brilliantly highlights the bizarre nature of quantum states before measurement.

Far from being a mere theoretical curiosity, superposition is a concept with profound real-world consequences and is a driving force behind emerging technologies. Perhaps its most celebrated application is in the burgeoning field of quantum computing. Here, traditional bits, which can only represent a 0 or a 1 at any given time, are replaced by quantum bits, or qubits. Thanks to superposition, a single qubit can exist in a state of 0, 1, or both simultaneously. This extraordinary capability allows quantum computers to perform certain calculations exponentially faster than even the most powerful classical supercomputers, as they can process vast amounts of information in parallel. Indeed, superposition is one of the pivotal reasons why quantum mechanics holds the potential to utterly revolutionize computing, cryptography, materials science, and countless other fields, unlocking computational power previously unimaginable.

7. **Quantum Entanglement: “Spooky Action at a Distance”**

Among the many perplexing phenomena of quantum mechanics, quantum entanglement stands out as perhaps the most mysterious and genuinely mind-boggling. This occurs when two or more particles become intrinsically linked in such a way that the quantum state of one particle is instantaneously connected to the state of the other, regardless of the vast distances separating them. It’s as if they share an invisible, unbreakable bond, communicating information faster than the speed of light—a concept that profoundly challenges our conventional understanding of locality and causality in the universe.

The implications of entanglement are truly extraordinary. If two particles are entangled, and a measurement is performed on one of them, its state instantly becomes definite. Simultaneously, the state of its entangled partner is also instantly determined, even if that partner is light-years away. There is no measurable time delay; the connection appears to be instantaneous. This seemingly impossible connection led none other than Albert Einstein to famously refer to it as “spooky action at a distance,” initially considering it a fundamental flaw or incompleteness in quantum theory itself, as it appeared to violate his theory of relativity which places a universal speed limit on information transfer.

Despite Einstein’s initial skepticism, numerous rigorous experiments have repeatedly demonstrated the reality and robustness of entanglement. It is no longer a mere theoretical construct but is now firmly established as a real and essential component of the quantum world, a fundamental aspect of how particles interact and correlate at the deepest levels. Its existence forces us to reconsider our classical notions of separate entities and independent properties.

Beyond its profound philosophical implications, entanglement also possesses immense practical applications, especially in the rapidly evolving landscape of quantum technology. It is an indispensable ingredient in the emerging field of quantum computing, where entangled qubits can perform complex calculations with unparalleled efficiency. Furthermore, entanglement is a cornerstone of quantum cryptography, enabling the creation of ultra-secure communication systems that are theoretically impervious to eavesdropping. The very act of attempting to intercept an entangled signal would alter its quantum state, immediately alerting the communicating parties to a breach. This ‘spooky action’ is thus being harnessed to create a new paradigm of secure data transmission, promising virtually unbreakable encryption for our most sensitive information.

8. **The Observer Effect: Reality Shaped by Perception**

Beyond the intrinsic properties of particles, quantum mechanics introduces a truly profound concept: the observer effect. This principle suggests that the very act of observing or measuring a quantum system can fundamentally alter its behavior. It’s a notion that challenges our classical assumptions, where the observer is typically considered separate and passive, with no influence over the observed phenomenon. In the quantum realm, this clear-cut separation blurs, hinting at a more interconnected reality.

This idea was vividly underscored by the double-slit experiment, a classic demonstration we touched upon earlier. When particles, such as electrons or photons, are allowed to pass through the slits unobserved, they create an interference pattern, behaving like waves. However, the moment an attempt is made to detect which slit a particle traverses, its wave-like behavior collapses, and it acts as a discrete particle, producing two distinct bands. The astonishing implication is that merely attempting to gather information about a quantum system forces it to pick a definite state.

Such a radical idea has led to deep philosophical debates among physicists. Some interpretations of quantum mechanics, including certain aspects of the Copenhagen interpretation, suggest that the observer plays an active and integral role in shaping the reality of a quantum system. It’s not just about seeing what’s there; it’s about participating in the manifestation of its state. This means that the universe at its most fundamental level might not be a fixed, objective entity, but rather a dynamic tapestry woven with the threads of observation and probability.

Read more about: The Crimson Divide: Sydney Sweeney, ‘MAGA Red,’ and the Cultural Firestorm at the 2025 Emmys

9. **Quantum Tunneling: Defying Classical Barriers**

Another phenomenon that thoroughly upends our classical intuition is quantum tunneling. Imagine a ball rolling towards a hill. Classically, if the ball doesn’t have enough energy to roll over the top, it will simply roll back down. In the quantum world, however, a particle can, against all odds, effectively ‘tunnel’ through that barrier, even if it lacks the classical energy required to surmount it. It’s a probabilistic escape, a ghost-like passage through what should be an impenetrable obstacle.

This counter-intuitive process arises from the wave-like nature of particles and the uncertainty principle. Instead of following a predictable, deterministic path, quantum particles are described by wave functions that extend through space. Even if a barrier is present, there’s a non-zero probability that the wave function will have an amplitude on the other side, meaning the particle has a chance, however small, of appearing on the ‘wrong’ side of the barrier without ever having traversed it in a classical sense. It’s a testament to the inherent fuzziness and probabilistic character of the quantum world.

Far from being a mere theoretical curiosity, quantum tunneling is a cornerstone of many real-world physical processes and advanced technologies. It is, for instance, the very mechanism that powers our sun and other stars, enabling nuclear fusion to occur by allowing atomic nuclei to overcome their mutual electrostatic repulsion. Without quantum tunneling, stars wouldn’t shine, and life as we know it would not exist. Furthermore, it underpins the operation of essential devices like tunnel diodes, which are critical in high-speed electronics, and the scanning tunneling microscope (STM), which provides atomic-scale images of surfaces by exploiting the quantum tunneling of electrons between a sharp tip and a sample. This ‘impossible’ phenomenon is thus at the heart of both cosmic energy and nanoscale engineering.

10. **The Copenhagen Interpretation: Making Sense of the Quantum World**

Given the profoundly strange and counter-intuitive nature of quantum phenomena—from superposition to entanglement and the observer effect—physicists have grappled with how to interpret what the mathematical equations of quantum mechanics actually mean for reality. Among the various frameworks proposed, the Copenhagen interpretation, developed primarily by Niels Bohr and Werner Heisenberg in the 1920s, emerged as one of the most widely accepted and influential understandings of the quantum world.

At its core, the Copenhagen interpretation asserts that a quantum system does not possess definite properties until it is measured or observed. Before observation, the system exists in a probabilistic ‘superposition’ of all its possible states, mathematically described by a wave function. The act of measurement is not a passive revelation of a pre-existing reality; rather, it actively ‘collapses’ the wave function, forcing the system to settle into one definite outcome from its multitude of possibilities. In this view, the wave function describes the probabilities of different outcomes, not the outcomes themselves.

However, this interpretation, for all its success in predicting experimental results, is not without its critics and complexities. It raises challenging questions about the role of the observer and the exact moment of wave function collapse, often leaving macroscopic reality ambiguous, as famously illustrated by Schrödinger’s thought experiment. These conceptual difficulties have spurred alternative interpretations, such as the Many-Worlds Interpretation, which postulates that every quantum measurement causes the universe to split into parallel realities where each possible outcome is realized. Another, the de Broglie-Bohm interpretation, offers a deterministic view, suggesting that particles have definite positions guided by a ‘pilot wave,’ contrasting with the inherent probabilistic nature of Copenhagen. These ongoing debates highlight the deep conceptual chasm between our classical intuition and the true nature of quantum reality.

11. **Mathematics: The Language of the Quantum Realm**While the concepts of quantum physics are often difficult, if not impossible, for us to visualize in our everyday macroscopic terms, mathematics provides the essential language and framework to describe and predict these phenomena with remarkable precision. Unlike the familiar mechanics of classical physics, where we can often intuitively grasp the trajectory of a ball or the flow of water, the quantum world’s inherent strangeness demands a more abstract, rigorous descriptive tool. Equations become the bedrock upon which our understanding of quantum objects and their interactions is built, offering an exactitude that our imagination simply cannot conjure.

While the concepts of quantum physics are often difficult, if not impossible, for us to visualize in our everyday macroscopic terms, mathematics provides the essential language and framework to describe and predict these phenomena with remarkable precision. Unlike the familiar mechanics of classical physics, where we can often intuitively grasp the trajectory of a ball or the flow of water, the quantum world’s inherent strangeness demands a more abstract, rigorous descriptive tool. Equations become the bedrock upon which our understanding of quantum objects and their interactions is built, offering an exactitude that our imagination simply cannot conjure.

Crucially, mathematics is indispensable for representing the fundamentally probabilistic nature of quantum phenomena. Consider the position of an electron, for instance. We cannot often know its exact location at a given moment. Instead, its whereabouts might be described as being distributed across a range of possible locations, perhaps within an atomic orbital, with each specific point associated with a probability of finding the electron there. This isn’t a deficiency in our measurement capabilities; it’s a reflection of the quantum reality itself, where certainty is often replaced by probabilities.

To capture this inherent uncertainty and multi-state existence, quantum objects are frequently described using mathematical ‘wave functions.’ These functions are the solutions to the famous Schrödinger equation, a central pillar of quantum mechanics. Unlike waves in water or sound waves, which track physical properties like height or air compression, wave functions don’t represent a directly observable physical property. Instead, their solutions provide the likelihoods of where an observer might locate a particular object across a spectrum of potential options. Just as a ripple on a pond or a note from a trumpet is spread out and not confined to a single point, wave functions illustrate how quantum objects can exist in multiple places—and indeed, take on multiple states, as in superposition—all at once, underscoring the deep connection between quantum mathematics and the bizarre realities it describes.

Read more about: Exploring the Power of Eight: A Deep Dive into Its Engineering, Performance, and Global Impact

12. **Google’s Quantum Advantage: Pioneering ‘Quantum Echoes’**

In the relentless pursuit of transformative computing power, Google has once again made significant strides, moving beyond earlier debates about ‘quantum supremacy’ to demonstrably achieve ‘quantum advantage’ with its cutting-edge ‘quantum echoes’ algorithm. This breakthrough, building on the capabilities of Google’s current-generation Willow chip, represents a crucial step toward practical and useful quantum computing, performing calculations in a fraction of the time it would take even the most powerful classical supercomputers available today.

The ‘quantum echoes’ approach is an ingenious series of operations meticulously performed on the hardware qubits of Google’s quantum machine. These qubits, which uniquely hold a single bit of quantum information in a superposition between two values, are entangled with their neighbors, allowing their probabilities to influence one another. The core of the ‘echoes’ involves applying a set of two-qubit gates, which alters the system’s state, followed by a reverse set of gates. However, critically, randomized single-qubit gates are inserted between these forward and reverse operations. This ‘small butterfly perturbation,’ as Google’s Tim O’Brien explains, ensures the system doesn’t return to its exact original state, creating an imperfect copy, much like an acoustic echo.

What makes this approach so powerful and fundamentally quantum is how these forward and backward evolutions interfere with each other. O’Brien elaborates that on a quantum computer, these evolutions interweave, affecting the probabilities of various paths the system could take between its starting point, reflection point, and final state. This intricate quantum interference ultimately dictates the system’s final configuration. The advantage becomes starkly clear in performance: a measurement that took Google’s quantum computer a mere 2.1 hours would, according to estimates, demand approximately 3.2 years on the Frontier supercomputer. This staggering difference, assuming no major classical algorithmic improvements, solidifies Google’s claim to quantum advantage, underscoring the disruptive potential of these novel computational paradigms.

13. **The TARDIS Experiment: Unlocking Molecular Secrets with Quantum Echoes**

While demonstrating quantum advantage is a monumental achievement, the real power of quantum computing lies in its utility. Google’s latest work takes a significant step in this direction, proposing that its ‘quantum echoes’ algorithm can be harnessed to understand real-world physical systems, specifically small molecules within Nuclear Magnetic Resonance (NMR) machines. This pivot from abstract computational feats to tangible scientific application highlights the growing maturity of quantum technology.

This innovative application, fittingly dubbed TARDIS—Time-Accurate Reversal of Dipolar InteractionS—leverages the quantum property of ‘spin’ inherent in every atomic nucleus. In molecules, these spins influence one another, forming intricate networks. The TARDIS experiment involves synthesizing a molecule with a specific carbon-13 isotope at a known location, which then acts as a source of a signal that propagates through this spin network. The team describes this as a ‘many-body echo, in which polarization initially localized on a target spin migrates through the spin network, before a Hamiltonian-engineered time-reversal refocuses to the initial state.’ The refocusing is exquisitely sensitive to perturbations on distant ‘butterfly spins,’ allowing researchers to measure how far polarization propagates.

The TARDIS process, a meticulously crafted sequence of control pulses sent to the NMR sample, initiates a perturbation of the molecule’s nuclear spin network, followed by a second set of pulses that reflect an echo back to the source. These returning reflections are inherently imperfect, with noise originating from hardware limitations and, crucially, from the influence of fluctuations in distant atoms along the spin network. By either observing natural fluctuations or intentionally inserting them with randomized control signals, researchers can use quantum echoes to extract structural information from molecules at greater distances than is currently possible with classical NMR techniques. The ability to accurately model how these echoes propagate through complex molecules, a task prohibitive for classical computations, is well within the capabilities of quantum computing, as demonstrated by Google’s paper, thus promising a new era for molecular analysis.

14. **The Future Quantum Horizon: Uniting the Universe and Revolutionizing Technology**

As Google’s recent breakthroughs vividly illustrate, quantum mechanics is not a static theory confined to textbooks; it is a vibrant, evolving field at the forefront of scientific discovery and technological innovation. While the current demonstrations, such as the TARDIS experiment, remain proofs of concept using relatively small molecules and hardware (requiring 15 hardware qubits, simulable classically), the trajectory for future development is clear. Researchers are highly optimistic about extracting structural information from molecules at distances currently unattainable with traditional NMR, recognizing the immense potential upsides. Achieving this, however, will necessitate significant improvements in hardware fidelity, estimated by O’Brien to be a factor of three or four, to model molecules beyond classical simulation capabilities.

Beyond these immediate applications, the grander vision for quantum mechanics continues to inspire physicists worldwide. Despite its unparalleled success in explaining the microscopic world, quantum mechanics has yet to fully integrate with Albert Einstein’s general relativity, the theory that elegantly describes gravity and the macroscopic universe. The quest for a unified theory—often termed quantum gravity or explored through frameworks like string theory—remains one of the most significant challenges in modern physics. This ongoing endeavor seeks to bridge the conceptual chasm between the very large and the very small, promising an even deeper understanding of the universe’s fundamental fabric.

As we continue to probe the quantum realm, it promises to unveil even more astonishing phenomena, challenging our deepest intuitions about the very nature of reality itself. From the bizarre interconnectedness of entanglement to the mind-bending possibilities of superposition and tunneling, quantum mechanics opens doors to possibilities that, just a century ago, belonged purely to the realm of science fiction. The ongoing development of quantum computing and quantum cryptography, fueled by these fundamental principles, points towards a future where computational power is exponentially enhanced, and data security is virtually impenetrable. Quantum mechanics is not just a theory; it is a profound invitation to look beyond the familiar and embrace the delightful weirdness that lies at the heart of nature, shaping the future of science and technology in ways we are only just beginning to imagine.

The journey inside the quantum chip, and indeed, inside the quantum universe, is a testament to human ingenuity and our insatiable drive to understand the cosmos. Each discovery, each engineered qubit, each novel algorithm, brings us closer to a future where the enigmatic rules of the smallest particles unlock the greatest advancements, propelling us toward an era where the impossible truly becomes routine.