The digital age, powered by an ever-expanding universe of data and the algorithms that process it, stands at a fascinating crossroads. On one hand, artificial intelligence offers unprecedented capabilities to optimize complex systems, promising a future of enhanced efficiency and potentially vast energy savings. Yet, on the other, the very infrastructure that fuels this AI revolution – particularly immense data centers – consumes staggering amounts of power, raising urgent questions about its environmental footprint. It’s a paradox that demands a closer look, a balancing act between exponential technological advancement and the imperative for global sustainability.

For years, the sheer scale of energy required to keep servers humming, cool vast halls of processors, and transmit torrents of data has been a growing concern. Data centers alone accounted for about 1.5% of the world’s electricity consumption last year, a figure predicted to more than double by 2030, according to the International Energy Agency. This trajectory could inevitably lead to increased reliance on fossil fuels, exacerbating climate change through greenhouse gas emissions. However, within this challenge lies a profound opportunity: to turn AI’s analytical power inward, making the digital engine of our world more efficient.

This article delves into the intricate ways AI is being leveraged to not only curb its own energy demands but also to fundamentally transform how various sectors consume power. From the nuanced algorithms optimizing data center cooling to the broader strategies for selecting energy-efficient models, we will uncover the deep technological innovations driving this shift. It’s a journey into the heart of machine learning, where complex systems learn to minimize waste, and the promise of a more sustainable future begins to emerge from the buried layers of server code.

1. **AI’s Fundamental Role in Data Center Energy Optimization**

At the heart of the drive for sustainable AI lies a foundational challenge: the monumental energy appetite of data centers. These digital behemoths, the very engines of our connected world, are notorious for their high electricity consumption. However, the integration of advanced AI algorithms into their operational fabric has emerged as a revolutionary strategy, offering a potent pathway to dramatically reduce this energy demand and redefine efficiency standards.

One pioneering project, specifically designed to “Minimize-Energy-consumption-with-Deep-Learning-model,” directly addresses this issue. It leverages an AI deep learning model to optimize and reduce the energy consumption of a data center by up to an impressive 70%. This isn’t just a marginal improvement; it represents a seismic shift in how we conceive of and manage the power requirements of our digital infrastructure, pushing the boundaries of what was previously thought possible.

The approach taken by this project is inspired by real-world successes, notably the substantial 40% reduction achieved at Google’s data centers through the application of a DeepMind AI model. Such benchmarks underscore the profound potential of AI to transform industrial energy use. By deploying intelligent systems that continuously learn and adapt, data centers can move beyond static, inefficient management practices towards dynamic, responsive, and ultimately, far more sustainable operations.

Read more about: The Ultimate 2025 SUV Fuel Economy Showdown: How Top Models Perform on Long-Distance Journeys

2. **The Mechanics of Q-Learning for Energy Management**

The core intelligence behind many of these energy optimization projects, including the one aiming for a 70% reduction, often resides in a sophisticated form of reinforcement learning known as Q-Learning. This algorithm is not merely a tool for automation; it’s a powerful framework that allows an “agent” — in this case, the AI managing the data center — to learn the “quality of actions” to take under a vast array of circumstances, without requiring an explicit model of the environment.

Q-Learning operates by recursively determining the value of all possible actions given a certain state, continuously exploring the environment and refining its understanding through countless iterations. Its objective is to discover an “optimal policy,” which is essentially a sequence of actions designed to “maximiz[e] the expected value of the total reward over any and all successive steps, starting from the current state.” This means the AI isn’t just reacting to immediate conditions but strategically planning for future rewards, making highly informed decisions.

The theoretical underpinning of Q-Learning is deeply rooted in the “Bellman equations,” which are fundamental to reinforcement learning. These equations break down the value of a decision into two critical components: the immediate reward gained from an action and the discounted future values that action might unlock. In the context of energy management, the “reward is defined as the absolute difference between the energy required by the cooling system vs the energy required by the AI model,” effectively quantifying the “energy saved by AI.” This allows the system to prioritize actions that lead to the greatest energy conservation.

3. **Deep Learning Architecture for Energy Prediction**

To effectively implement Q-Learning in a complex environment like a data center, it needs a robust underlying structure to process information and make predictions. This is where the deep learning model comes into play, providing the neural network architecture that transforms raw data into actionable insights. The project described utilizes a relatively “simple neural network made of 3 fully connected layers,” a testament to how even streamlined designs can yield significant results when properly applied.

This neural network is designed to take a “normalized vector representing the state” as its primary input. In the specific context of data center energy optimization, this crucial “state is represented by the server temperature, the number of users and the data transmission rate.” These factors are continuously monitored and updated “at each time step,” providing the AI with a real-time snapshot of the operating environment upon which to base its decisions.

The internal processing power of the network is housed within “2 hidden layers [that] have 64 and 32 nodes respectively,” allowing for complex pattern recognition and data correlation. The final layer, termed the “output layer,” is responsible for “predict[ing] the Q-values for 5 potential actions covering the options available to the system.” A “softmax activation function” then translates these values into a “probability distribution over the actions,” ensuring that the action corresponding to the “highest probability corresponds to the highest Q-value,” thereby guiding the AI towards the most energy-efficient choice. Furthermore, the learning process is enhanced by the “Experience Replay” technique, allowing the model to learn efficiently from past interactions.

Read more about: Ranking 10 EV Battery Packs: Which Brands Retain Nearly 80% Capacity After 10 Years?

4. **Tangible Results: Proving AI’s Energy Saving Power**

The theoretical elegance of Q-Learning and deep neural networks finds its ultimate validation in measurable, real-world impact. The project’s findings underscore the significant potential for AI to dramatically curb energy consumption within industrial settings, particularly data centers. While the “percentage of energy saved varies depending on the experiments,” the core premise of substantial reduction holds true across diverse simulated scenarios.

To provide a comprehensive assessment, the energy saving percentage is determined by “simulating one full year cycle,” offering a robust and long-term view of the AI’s efficacy. This extensive simulation allows for the capture of seasonal variations, fluctuations in user demand, and other dynamic environmental factors that influence a data center’s energy footprint. It moves beyond short-term observations to deliver a compelling case for sustained energy efficiency.

A concrete example from these simulations revealed an impressive achievement: one sample scenario “achieved 68% energy consumption thanks to AI compared to the usual integrated cooling system.” This figure is remarkably close to the project’s ambitious target of up to 70% reduction, highlighting the practical success of the AI model. Crucially, both the AI system and the traditional cooling system were tasked with the same objective: “to maintain the server within an optimal temperature range of 18° to 24°C,” ensuring that energy savings do not come at the cost of operational integrity or server performance. The simulation’s granular approach, performed for “time steps of one minute over a full year,” further emphasizes the precision and continuous optimization capabilities of the AI.

5. **The Alarming Energy Footprint of AI Itself**

While AI presents a powerful solution for optimizing energy use, it’s crucial to acknowledge the elephant in the server room: AI itself is a significant consumer of energy. The complex computations required to train and run sophisticated AI models demand colossal amounts of electricity, primarily housed within the same data centers that AI is now helping to make more efficient. This creates a critical feedback loop, where the technology designed to save energy also contributes substantially to its consumption.

The statistics are indeed stark. Data centers, indispensable for fueling AI, accounted for approximately “1.5% of the world’s electricity consumption last year,” a figure projected to “more than double by 2030,” according to the International Energy Agency. This rapid escalation in energy demand carries severe environmental implications. Such an increase could necessitate the burning of “more fossil fuels such as coal and gas,” leading to heightened “greenhouse gas emissions that contribute to warming temperatures, sea level rise and extreme weather.”

The scale of this issue is further illuminated by recent research. Studies have indicated that merely by being “more judicious in which AI models we use for tasks,” there’s a potential to “save 31.9 terawatt-hours of energy this year alone.” To put this into perspective, that amount is “equivalent to the output of five nuclear reactors.” This underscores that while AI offers tremendous potential for energy optimization, addressing its own inherent energy demands is a non-negotiable step toward true sustainability.

Read more about: Earth Unburdened: An In-Depth Exploration of What Happens When Humanity Disappears

6. **Strategic Model Selection: A Path to Significant Energy Savings**

Given the substantial energy footprint of AI, a critical strategy for mitigating its environmental impact lies not just in optimizing the infrastructure that hosts it, but in the intelligent selection and deployment of the AI models themselves. Researchers are actively exploring how choosing different models for the same task can lead to significant variations in energy consumption, unveiling a powerful lever for global energy savings that often goes unnoticed.

Tiago da Silva Barros at the University of Cote d’Azur and his team conducted an insightful study, examining “14 different tasks that people use generative AI tools for,” spanning from “text generation to speech recognition and image classification.” They then meticulously assessed public leaderboards to compare model performance, measuring energy efficiency during “inference – when an AI model produces an answer – by a tool called CarbonTracker.” Total energy use was estimated by tracking user downloads, providing a holistic view.

The findings were compelling: “across all 14 tasks, switching from the best-performing to the most energy-efficient models for each task reduced energy use by 65.8 per cent.” Crucially, this remarkable energy saving came at a surprisingly low cost, “only making the output 3.9 per cent less useful.” This trade-off, where substantial environmental benefits are gained for a minimal decrease in utility, is one “they suggest could be acceptable to the public,” marking a viable pathway towards a more sustainable AI ecosystem.

7. **Overcoming Hurdles in AI Energy Efficiency Adoption**

While the promise of strategic model selection for energy savings is clear, translating this potential into widespread impact faces practical hurdles. The journey towards a truly energy-efficient AI landscape requires concerted effort from both the creators and the consumers of artificial intelligence. It’s not simply a matter of technical capability, but also one of behavioral change, industry transparency, and anticipating unforeseen consequences.

The researchers acknowledge this complexity. “If people in the real world swapped from high-performance models to the most energy-efficient model,” there could be a “27.8 per cent reduction in energy consumption overall.” However, as da Silva Barros points out, “that would require change from both users and AI companies.” Users need to be willing to potentially accept a slight performance dip, and companies must be proactive in providing information about their models’ energy consumption.

Another significant challenge is the “rebound effect,” as Chris Preist at the University of Bristol highlights. “Reducing energy per prompt will simply allow more customers to be served more rapidly with more sophisticated reasoning options,” potentially offsetting initial savings by increased usage. Furthermore, Sasha Luccioni at Hugging Face emphasizes a critical need for “more transparency from AI companies, data center operators and even governments” to allow researchers and policymakers to make truly informed decisions and projections about AI’s long-term energy impact. Without this data, comprehensive analyses remain reliant on external estimates.

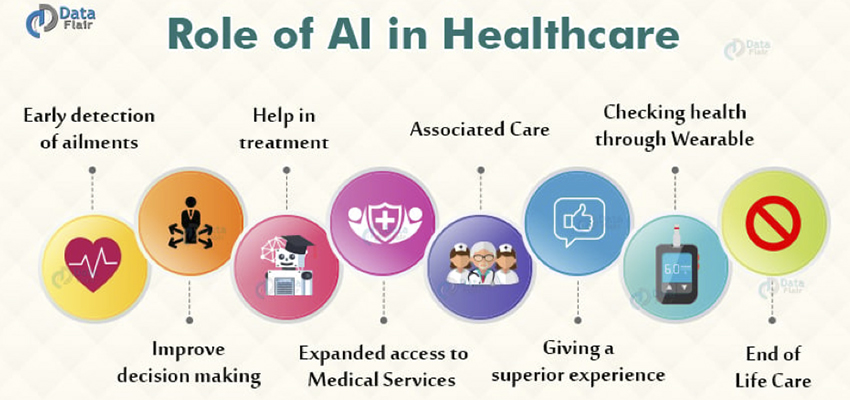

The initial deep dive into AI’s role in data center optimization and the critical need to address its own energy footprint sets the stage for a broader exploration. Artificial intelligence is not merely a tool for internal infrastructure efficiency; its analytical prowess is being unleashed across a myriad of industries, offering unprecedented opportunities to revolutionize energy efficiency and pave the way for a truly sustainable future. From the intricate systems governing our buildings to the vast networks of transportation and the delicate balance of natural resources, AI is proving to be a versatile and indispensable ally in the global quest for energy conservation and environmental stewardship.

Read more about: The Unseen Force: What You Need to Know About Electricity Powering Next-Gen Vehicles

8. **AI in Building Efficiency: Smart Structures, Smarter Consumption**

Our built environment, often an overlooked culprit in the climate crisis, stands to gain immensely from AI’s touch. Around one-third of U.S. greenhouse gas pollution stems directly from homes and buildings, making this sector a prime target for intelligent optimization. AI is transforming traditional building management from a static, reactive process into a dynamic, energy-saving operation, creating structures that learn and adapt.

Intelligent systems automatically adjust a building’s internal climate and lighting, moving beyond crude manual adjustments that often lead to waste. By leveraging real-time weather data, electricity usage patterns, and occupancy rates, AI fine-tunes ventilation, heating, and cooling systems. For instance, automated thermostats, particularly cost-effective for smaller buildings where a full HVAC overhaul isn’t feasible, can precisely schedule temperature regulation around worker arrivals and departures, eliminating the energy-intensive “blast the air” instinct.

Beyond daily adjustments, AI offers a leap forward in maintenance. It can monitor HVAC systems and other critical equipment, predicting and detecting potential failures before they escalate into costly repairs or significant energy drains. This predictive capability minimizes downtime and ensures that equipment operates at peak efficiency, reducing the unnecessary power consumption associated with malfunctioning hardware.

Collectively, these AI-driven automations are not just theoretical improvements; experts affirm they can reduce a building’s total energy consumption by a significant “10% to 30%.” This substantial saving represents a “super low-hanging fruit” for energy conservation, showcasing AI’s immediate and tangible impact on daily power use.

9. **Optimizing EV Charging and Grid Load with AI**

The electric vehicle (EV) revolution, while vital for decarbonizing transportation, introduces new demands on electricity grids. Ensuring that this growing fleet charges efficiently and without overwhelming existing infrastructure is a complex challenge, one that AI is uniquely positioned to solve by optimizing charging schedules for both cost and environmental impact.

AI can meticulously schedule the most efficient times for charging EVs and other devices like smartphones. This involves identifying periods when drawing power from the grid is least impactful – typically overnight, when overall demand and electricity rates are lower, and the likelihood of relying on fossil fuels for generation is reduced. Imagine plugging in your car during a peak period, only for AI to intelligently defer charging until the grid is less stressed, as highlighted by Alexis Abramson, dean of the Columbia University Climate School.

Concrete pilot programs have already demonstrated this efficacy. In California, a system shifted EV charging to times when more renewable energy was available, not only benefitting the environment but also saving customers money. Furthermore, AI helps homeowners with solar panels manage their energy storage, optimizing when to store excess energy in batteries for later use, maximizing the utility of clean power.

Read more about: Beyond Books: How Local Libraries Are Reshaping Their Vital Role in the 21st Century Community

10. **AI Combatting Methane Emissions in Oil and Gas**

The energy sector, traditionally associated with significant environmental impact, is also embracing AI for sustainability. Methane, a potent greenhouse gas, is responsible for approximately 30% of today’s global warming. A critical challenge in oil and gas operations is the release and flaring of methane to relieve pressure in pipes, a practice that both harms the planet and wastes valuable resources.

Enter Geminus AI, a Boston-based company employing deep learning and advanced reasoning to tackle this issue head-on. Their technology helps oil and gas companies drastically reduce methane flaring and venting, alongside optimizing energy use in extraction and refining processes. It represents a direct intervention to curb one of the fastest pathways to mitigating climate change’s worst effects.

Geminus’s approach involves continuously monitoring vast networks of wells and pipes. Through AI-driven simulations, their system can instantaneously suggest precise changes to compressor and pump settings, thereby eliminating the need for wasteful venting and flaring. While engineers traditionally require about “36 hours” to run similar simulations, Geminus performs these complex analyses in mere “seconds,” underscoring the revolutionary speed and efficiency AI brings to critical environmental challenges. As CEO Greg Fallon emphasizes, scaling this technology across the industry presents a “massive opportunity to reduce greenhouse gas emissions.”

11. **Unearthing Geothermal Potential with AI-Driven Exploration**

Geothermal energy, harnessing the Earth’s natural heat to generate clean electricity, offers a consistent and low-carbon alternative to fossil fuels. However, discovering viable geothermal hot spots deep underground is a notoriously complex and capital-intensive endeavor. This is where AI is stepping in, transforming the geological survey process and unlocking previously overlooked resources.

Salt Lake City-based startup Zanskar is pioneering this frontier by building sophisticated AI models to understand the Earth’s subsurface with unprecedented clarity. These models simulate and assess a vast number of possible geological scenarios, allowing the company to estimate precisely where pockets of very hot water exist. From these insights, Zanskar can identify optimal locations and drilling directions, drastically improving the success rate of geothermal exploration. Zanskar CEO Carl Hoiland rightly states, “AI is becoming the solution to its own energy problem… It’s showing us a way to unlock resources that weren’t possible without it.”

The practical application of Zanskar’s AI has yielded remarkable results. Last year, their AI modeling successfully identified an untapped geothermal reservoir beneath an underperforming power plant in New Mexico, enabling its repowering. Even more impressively, they made a second geothermal discovery in Nevada, a site previously dismissed by industry experts as “too cold to support a utility-scale power plant,” demonstrating AI’s power to challenge conventional wisdom and unlock new energy frontiers.

12. **AI’s Role in Smarter Urban Traffic Flow and Emissions Reduction**

Urban transportation is another significant contributor to greenhouse gas emissions, with passenger cars and small trucks accounting for about 16% of the U.S. total. The incessant stop-and-go traffic that plagues cities worldwide not only wastes time but also consumes excessive fuel and spews pollutants. Google is leveraging AI and its ubiquitous Maps data to address this pervasive issue through Project Green Light.

Launched in 2023, Project Green Light is an innovative initiative that employs artificial intelligence to identify optimal traffic light adjustments. By analyzing real-time traffic patterns and historical data, AI can recommend synchronization changes that smooth traffic flow, reducing instances of vehicles idling and accelerating. This intelligent orchestration of urban mobility is already making a tangible difference across continents.

Project Green Light is currently active in “20 cities on four continents,” with Boston, renowned for its notoriously bad traffic, being among the most recent additions. Each city receives bespoke AI-generated recommendations, which city engineers then evaluate and implement. Google reports that Project Green Light can reduce stop-and-go traffic by an impressive “up to 30%,” leading to a “10% cut in emissions” and a noticeable improvement in urban air quality. As Juliet Rothenberg, Google’s product director of Earth and resilience AI, succinctly puts it, “We’re just scratching the surface of what AI can do.”

Read more about: 14 Wild Ways Cycling Is More Than Just a Ride (Plus, How You Can Join the Fun!)

13. **The Broader Vision: AI as a Catalyst for Industrial Transformation**

The myriad applications of AI in energy efficiency, from optimizing data centers to revolutionizing urban traffic and geothermal exploration, paint a vivid picture of its transformative power. It’s clear that AI is not just a technological advancement but a fundamental catalyst, reshaping how industries operate and how societies manage their energy footprint. This extends far beyond mere incremental gains, promising a paradigm shift in how we approach sustainability.

AI’s unparalleled ability to process vast datasets and identify intricate patterns allows it to provide predictive insights and enable real-time adjustments across vastly disparate systems. Whether it’s anticipating maintenance needs in a building’s HVAC system, scheduling the most opportune moment for an EV to charge, or optimizing the flow of traffic in a bustling city, AI introduces a level of precision and responsiveness previously unattainable. It empowers systems to learn, adapt, and operate at their energetic optimum, moving away from static, often wasteful, traditional methods.

In essence, AI is emerging as an indispensable tool for any industry committed to both operational efficiency and environmental stewardship. It facilitates a critical transition from reactive management, where resources are consumed and issues are addressed after the fact, to a proactive, intelligent ecosystem. This intelligent intervention minimizes waste, maximizes resource utility, and fundamentally redefines the relationship between industrial activity and environmental impact, driving towards a future where efficiency is inherent to design.

Read more about: Inside the US Army’s 250th Anniversary Parade: Tanks, Logistics, and Spectacle on the National Mall

14. **Charting the Course for Sustainable AI: Transparency, Innovation, and Policy**

As AI’s impact on global energy efficiency expands, so too does the imperative for a deliberate and forward-looking strategy to ensure its sustainable evolution. While the progress is undeniably impressive, the path ahead requires vigilance, concerted effort, and a multi-faceted approach that addresses both technological advancements and the societal implications. This includes a critical need for greater transparency across the board. As Sasha Luccioni at Hugging Face aptly points out, “What we need… is more transparency from AI companies, data center operators and even governments” to allow for truly informed decisions and projections.

Innovation continues apace, with techniques like “model distillation” allowing large AI models to train smaller, more energy-efficient counterparts, as seen with Google’s Gemini achieving a “33-fold energy-efficiency improvement” in a year. Yet, we must also grapple with the “rebound effect,” highlighted by Chris Preist at the University of Bristol. The risk is that reducing energy per prompt might simply enable “more customers to be served more rapidly with more sophisticated reasoning options,” potentially offsetting initial savings with increased usage. This underscores that technological fixes alone may not suffice without broader considerations.

Looking further ahead, advancements such as edge computing and federated learning promise to further decentralize processing and reduce latency, potentially making AI even more efficient. However, achieving true sustainability requires more than just technological refinement. It demands collective responsibility, proactive engagement from users and developers, and robust policy frameworks. Regulatory pressures will undoubtedly intensify globally, pushing data centers and AI developers to meet increasingly stringent sustainability demands. As Alexis Abramson optimistically suggests, while AI use will continue to increase, “we’re going to see our ability to process be much more efficient and as a result, the energy consumption won’t go up as much as some are predicting.”

Ultimately, the journey towards a truly sustainable AI future hinges on a holistic approach that deftly balances exponential technological advancement with profound ecological responsibility. It requires us to continuously refine our AI-driven energy management insights, foster open collaboration, and embrace transparency as a foundational principle. By doing so, we can ensure that AI not only powers our digital world but also stewards our planet, becoming a net positive force for global sustainability. This is the grand challenge, and the immense opportunity, that lies buried in the servers and beyond, waiting for us to unlock its full, responsible potential.