The narrative around autonomous driving, once a beacon of futuristic innovation, has increasingly become mired in complex regulatory scrutiny and pressing safety concerns, particularly for Tesla. The US National Highway Traffic Safety Administration (NHTSA) has launched a significant new investigation covering nearly 2.9 million Tesla vehicles, targeting their “Full Self-Driving” (FSD) mode after a wave of reports detailing traffic law violations and serious crashes. This probe signals a pivotal moment for the automaker, which has positioned its self-driving technology as a cornerstone of its brand and future vision, including the ambitious rollout of a “Robotaxi” service.

This latest inquiry by federal regulators is not an isolated incident but rather another addition to a growing dossier of official investigations and legal battles confronting the Elon Musk-led company. For years, Tesla has endeavored to perfect its self-driving capabilities, yet persistent challenges have emerged, ranging from alleged software malfunctions that defy basic traffic rules to questions surrounding the very nomenclature of its driver-assistance systems. The cumulative weight of these probes, coupled with substantial financial penalties from recent court rulings, places immense pressure on Tesla to demonstrate the safety and reliability of its advanced driver-assistance features.

As the automotive industry continues its rapid evolution towards greater automation, the rigorous oversight exercised by agencies like the NHTSA underscores the critical importance of public safety and regulatory compliance. This article will provide an in-depth examination of the key investigations, reported incidents, and legal implications surrounding Tesla’s self-driving technology, offering a comprehensive overview of the challenges that both the company and the broader autonomous vehicle sector must navigate as they strive for widespread adoption. We delve into the specific details that have prompted these extensive reviews, revealing the intricate landscape of a technology still very much in development, despite its advanced branding.

1. **The Latest NHTSA Probe: A Sweeping Investigation into Tesla’s FSD Violations**

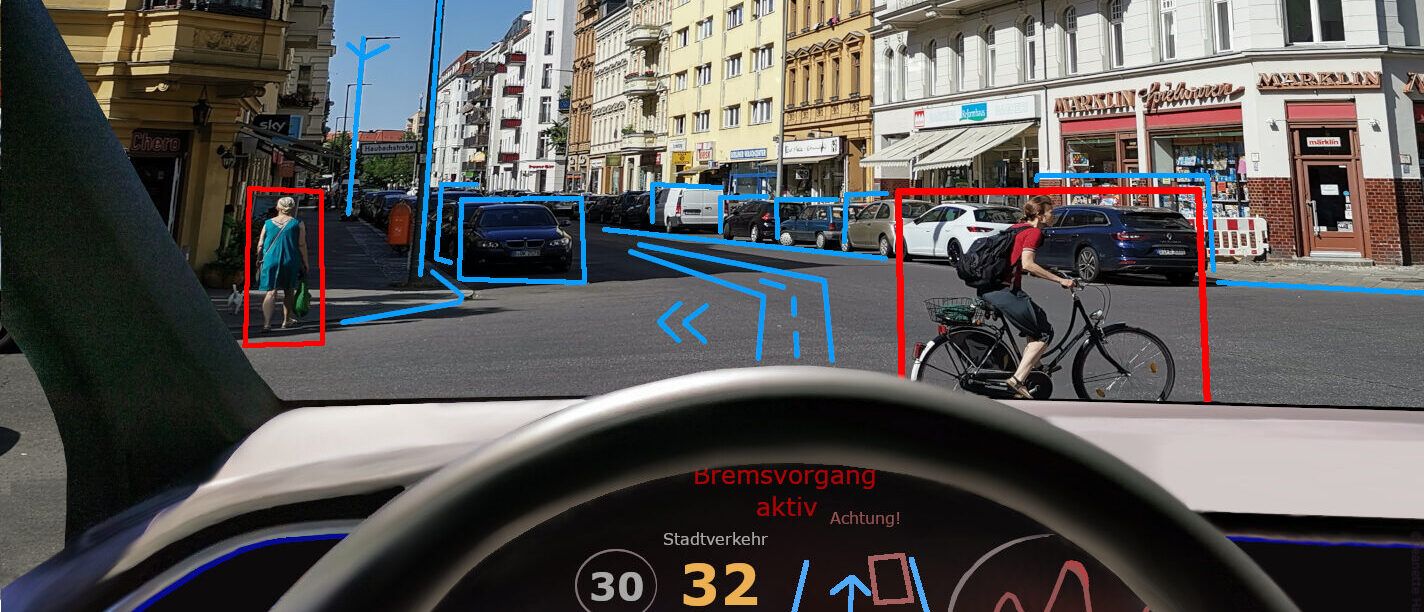

On Thursday, the US National Highway Traffic Safety Administration announced a new, extensive investigation into 2.88 million Tesla cars equipped with its “Full Self-Driving” (FSD) mode. This probe was initiated following numerous reports of vehicles appearing to disregard traffic laws and becoming involved in crashes. The scope of this investigation is vast, encompassing essentially all Teslas on the road that feature this advanced, albeit critically examined, technology. It highlights a mounting concern among federal regulators regarding the real-world performance and safety implications of FSD.

The Office of Defects Investigation (ODI) within the NHTSA specified that it had received 58 reports of Teslas operating with FSD engaging in traffic safety violations. These incidents included vehicles running red lights and driving on the wrong side of the road. Out of these reported violations, 14 involved crashes, and a concerning 23 resulted in injuries. The sheer number of vehicles covered by this investigation—nearly 2.9 million—underscores the potential widespread impact should a recall be deemed necessary, marking a significant preliminary evaluation that could have profound consequences for the automaker.

Officials have detailed the most common types of safety violations reported to the NHTSA under this new probe. One primary scenario involves a vehicle operating with FSD proceeding into an intersection in direct violation of a red traffic signal. The second major type of scenario outlines FSD commanding a lane change into an opposing lane of traffic, a maneuver fraught with extreme danger. These specific categories of incidents point to fundamental issues in the software’s ability to interpret and react safely to standard road conditions and traffic signals, raising serious questions about its readiness for unsupervised public deployment.

The agency is actively working to determine the repeatability of some reported problems, collaborating with state authorities like Maryland’s Transportation Authority and State Police. This cooperation aims to ascertain if issues, such as those that occurred at a specific intersection in Joppa, Maryland, are recurring patterns, indicating a systemic flaw rather than isolated incidents. While Tesla has reportedly taken action to address the issue at the aforementioned Maryland intersection, the broader investigation into millions of vehicles suggests that such localized fixes may not fully resolve the systemic challenges confronting the FSD system.

2. **Documented FSD Malfunctions: Running Red Lights and Other Critical Failures**

The reports prompting the latest NHTSA investigation provide a stark picture of the FSD system’s alleged shortcomings. Among the most alarming documented incidents are those where FSD-enabled vehicles actively violated crucial traffic laws. The NHTSA stated that in six specific crashes, FSD “approached an intersection with a red traffic signal, continued to travel into the intersection against the red light and was subsequently involved in a crash with other motor vehicles in the intersection.” This description paints a vivid and concerning image of autonomous software directly contributing to severe collisions due to a failure to adhere to basic road rules.

Further illustrating these critical failures, a Houston driver lodged a complaint with the agency in 2024, explicitly stating that FSD “is not recognizing traffic signals.” This driver recounted instances where the system caused the vehicle to run through red lights while inexplicably stopping at green lights. The severity of the complaint was compounded by the driver’s assertion that “Tesla doesn’t want to fix it, or even acknowledge the problem,” despite having conducted a test drive where company representatives reportedly witnessed the issue firsthand. Such accounts from actual users underscore the real-world dangers and the frustration faced by those encountering these system malfunctions.

Beyond red light violations, the NHTSA is also examining other perilous behaviors exhibited by the driving software, particularly concerning railroad crossings. Recent attention has been drawn to incidents where Teslas engaged in FSD have driven “straight into oncoming trains” or otherwise behaved erratically when approaching railway intersections. These reports indicate a systemic vulnerability in the FSD’s ability to safely navigate complex and high-risk environments, where errors can have catastrophic consequences. The software’s erratic behavior at these crossings adds another layer of serious concern to its overall safety profile, suggesting a profound struggle with diverse and dynamic traffic scenarios.

The agency’s Office of Defects Investigation identified multiple complaints regarding FSD entering opposing lanes of travel during or after turns, crossing double-yellow lane markings, or attempting to turn onto a road in the wrong direction despite clear signage. Some incidents reportedly involved FSD executing lane changes into opposing traffic with minimal warning to the driver, significantly limiting opportunities for human intervention. These examples collectively illustrate a pattern of behavior that directly contravenes established traffic safety norms, posing substantial risks not only to Tesla occupants but also to other road users.

3. **Previous FSD Investigations: Crashes in Low-Visibility Conditions**

The current sweeping investigation into Tesla’s Full Self-Driving system is not the first instance of the NHTSA examining its safety performance. Prior to this latest probe, the agency had already initiated another significant investigation into FSD, which commenced in October of the previous year. This earlier inquiry was specifically focused on several crashes that reportedly occurred under poor visibility conditions, highlighting a different, yet equally critical, set of vulnerabilities within the software’s operational parameters. The consistent regulatory attention signals a sustained pattern of concern surrounding Tesla’s autonomous capabilities.

The October 2024 probe by the NHTSA centered on approximately 2.4 million Tesla vehicles, following reports of four crashes, one of which tragically proved fatal. These incidents were reported to have occurred “after entering an area of reduced roadway visibility conditions.” The agency cited specific environmental factors such as “sun glare, fog, or airborne dust” as contributing to these conditions. This focus on visibility issues suggests that the FSD system, which heavily relies on camera-based vision, may struggle to accurately perceive and process its surroundings when environmental factors compromise its optical sensors.

A particularly grim detail from this previous investigation involved a fatal crash where a pedestrian was struck and killed by a Tesla vehicle. Footage of one of the incidents under review reportedly showed FSD’s camera obscured by sunlight just before the car collided with an elderly woman on the side of the road, resulting in her death. Such an event underscores the dire consequences when advanced driver-assistance systems fail to account for common environmental challenges. It raises fundamental questions about the robustness of Tesla’s vision-only system in real-world, less-than-ideal driving scenarios, and whether it adequately safeguards vulnerable road users.

These investigations collectively build a comprehensive picture of the diverse challenges inherent in developing truly autonomous driving. The focus on low-visibility conditions points to a critical area where human drivers typically compensate with experience and alternative senses. If FSD, despite its sophistication, cannot reliably navigate common weather or lighting impediments, its deployment on public roads without constant, active human supervision presents a significant and acknowledged safety hazard. The layering of these investigations highlights a continuous cycle of identified issues, regulatory review, and the ongoing quest for verifiable safety.

4. **Autopilot Under Persistent Scrutiny: A History of Fatal Incidents**

Separate from the investigations into Full Self-Driving, Tesla’s less advanced driver-assistance system, Autopilot, has also been under intense and prolonged scrutiny by the NHTSA for over a year. While distinct from FSD, Autopilot represents an earlier iteration of Tesla’s automated driving ambitions and has its own history of regulatory concerns and severe incidents. This ongoing investigation into Autopilot underscores a broader pattern of safety issues across Tesla’s semi-autonomous technologies, demonstrating that challenges are not exclusive to the more advanced FSD system.

In August, a Miami jury delivered a significant blow to Tesla, ordering the company to pay more than $240 million in damages after a car operating on Autopilot struck and killed a woman in 2019. The jury found Tesla to be partly responsible for the deadly accident, signaling a clear legal precedent regarding the company’s liability in crashes involving its driver-assistance features. Tesla has publicly stated its intention to appeal this decision, arguing that such verdicts could stifle innovation in self-driving technology. However, the ruling undeniably places a substantial financial burden and legal responsibility on the automaker for the performance of its systems.

The NHTSA had previously closed an investigation into Tesla’s Autopilot system in April 2024, after identifying 13 fatal crashes related to the misuse of that software. While that particular inquiry was closed, a separate investigation remains open, focusing on the efficacy of a fix Tesla issued for Autopilot. This indicates that even when specific probes conclude, the underlying concerns about the system’s reliability and its propensity for misuse by drivers, sometimes with tragic outcomes, persist. The continuous monitoring and re-evaluation by federal safety agencies reflect a commitment to ensuring long-term safety.

These protracted investigations and legal challenges concerning Autopilot serve as a critical backdrop to the current FSD probes. They highlight a consistent thread of questions about driver monitoring, system limitations, and the human-machine interface across Tesla’s automated driving offerings. The legal findings and regulatory concerns surrounding Autopilot reinforce the notion that even with advanced technology, the responsibility for safe operation remains paramount, and failures can lead to severe consequences, both human and financial.

Read more about: Tesla’s Strategic Price Adjustments and Their Profound Impact on the Used Car Market

5. **Mounting Legal Liabilities: Landmark Damages for Autonomous System Failures**

The recent jury verdicts against Tesla concerning its Autopilot system mark a significant escalation in the company’s legal liabilities, setting potentially far-reaching precedents for the autonomous vehicle industry. In August, a Miami jury concluded that Tesla was partly responsible for a deadly 2019 crash in Florida, where a car utilizing Autopilot technology caused the death of a woman. This led to a substantial award of over $240 million in damages to the victims, a figure that underscores the severe financial ramifications when autonomous driving features are implicated in fatal accidents. Tesla has announced its intention to appeal this judgment, asserting that the verdict threatens innovation in the self-driving technology sector.

Another incident, detailed as occurring in August, saw a jury ordering Tesla to pay $329 million in damages after a car running Autopilot struck and killed a woman. This ruling similarly found Tesla partially responsible for the deadly accident, reinforcing the legal stance that the automaker bears a degree of culpability for the performance of its driver-assistance systems. The consistent nature of these verdicts—attributing partial responsibility to Tesla—signals a growing judicial understanding of the complexities and duties associated with developing and deploying advanced automated driving technologies. These cases are pivotal in defining the legal boundaries for autonomous system developers.

These landmark legal decisions are not merely financial setbacks; they are powerful affirmations of accountability. They send a clear message that claims of advanced self-driving capabilities must be rigorously matched by real-world safety performance and that manufacturers cannot fully insulate themselves from the consequences of their technology’s failures. The substantial damages awarded reflect the tragic human cost of these accidents and the legal system’s response to holding corporations responsible for ensuring their products operate safely, particularly when they involve complex, life-critical functions like vehicle control.

The ongoing legal battles, especially those involving significant monetary damages, cast a shadow over Tesla’s broader autonomous driving ambitions. They serve as a stark reminder that even as the company pushes the boundaries of technological innovation, it must contend with the tangible and severe legal implications of its systems’ performance on public roads. These cases, whether appealed or not, will undoubtedly influence future product development, marketing claims, and the regulatory landscape for autonomous vehicles, making them crucial markers in the evolution of this technology.

6. **The “Full Self-Driving” Paradox: Misleading Branding and the Need for Human Supervision**

One of the most persistent and perhaps foundational criticisms leveled against Tesla’s autonomous technology centers on the name itself: “Full Self-Driving.” Regulators and critics alike have long argued that this branding is a “misnomer” that inaccurately portrays the system’s true capabilities, leading drivers to a false sense of security and encouraging them to relinquish too much control. Despite its advanced nomenclature, the FSD system under investigation is explicitly classified as Level 2 driver-assistance software, a designation that unequivocally requires drivers to pay full attention to the road and be prepared to intervene at all times.

The California Department of Motor Vehicles (DMV) has taken significant legal action against Tesla, suing the company for false advertising due to FSD’s misleading branding. The core of this legal challenge stems from the undeniable fact that, despite its name, the software cannot fully drive by itself and necessitates constant human supervision to prevent accidents. This lawsuit highlights the direct conflict between Tesla’s marketing and the operational reality of the technology. Recognizing the pressure, Tesla notably added “(Supervised)” to the driving mode’s official name last year, a tacit acknowledgment of the system’s limitations and the ongoing requirement for driver engagement.

Tesla has consistently argued to regulators and in court cases that it has repeatedly informed drivers that the system cannot operate the cars autonomously and that whoever is behind the wheel must always be ready to intervene. However, critics, including money manager Ross Gerber, contend that the “misnomer” itself actively “lull[s] drivers into handing full control over to their cars.” This creates a dangerous disjunction where drivers, influenced by the promise of “Full Self-Driving,” may not fully grasp or adhere to the critical need for continuous active supervision, contributing to the very incidents regulators are now investigating.

The tension between the aspirational branding of “Full Self-Driving” and the practical demands of a Level 2 driver-assistance system represents a critical safety paradox. It raises profound ethical and regulatory questions about how advanced technologies are named and marketed to the public, especially when human lives are at stake. As the latest investigations unfold, the implications of this branding strategy, and the extent to which it may contribute to driver over-reliance and subsequent accidents, will remain a central point of contention, influencing both regulatory outcomes and public perception of Tesla’s autonomous ambitions.

7. **The Scrutiny of Tesla’s Robotaxi Ambitions**

Elon Musk’s ambitious vision for a network of driverless Robotaxis has long been a cornerstone of Tesla’s future strategy, promising to revolutionize urban transportation. However, the reality of its limited deployment has quickly attracted scrutiny, adding another layer of complexity to the company’s already challenging regulatory landscape. The launch of a limited “Robotaxi” service this summer in Austin, Texas, was met with keen observation from both the public and federal regulators, particularly the NHTSA, which has expanded its purview to include the real-world operational safety of these cabs.

Passenger videos emerging from the initial Austin operation almost immediately drew the attention of the NHTSA. These recordings showcased self-driving cabs, despite being accompanied by a human “safety monitor” sitting shotgun, exhibiting problematic behaviors. Reports indicated instances of these vehicles “careening over the speed limit and driving erratically,” raising serious questions about the system’s readiness for unsupervised public deployment and the effectiveness of the human override in dynamic urban environments.

Adding to the pressure, the new version of FSD, introduced earlier this week, is reportedly designed to incorporate training data acquired during this limited Robotaxi pilot. This integration suggests that the experiences and anomalies observed during Robotaxi operations are directly feeding back into the broader FSD software. While intended to refine the system, the documented erratic behavior of the Robotaxis means that potential flaws from this testing phase could be disseminated across the wider fleet of FSD-enabled vehicles, amplifying existing safety concerns.

The viability and expansion of the Robotaxi service are inherently tied to the perceived safety and reliability of FSD. As the company rapidly expands its autonomous driving operations in Texas, the NHTSA’s continued vigilance serves as a critical check on Musk’s promise to roll out hundreds of thousands of driverless taxis across US cities by the end of next year. The ongoing investigations into FSD’s foundational capabilities directly impede the realization of this grand vision, forcing a re-evaluation of the timeline and practical challenges inherent in truly autonomous mobility.

Read more about: Encountering the Unconventional: An In-Depth Look at Tesla’s Cybertruck and the Engineering Philosophy Behind It

8. **The ‘Summon’ Feature Under Regulatory Lens**

Beyond its core Full Self-Driving and Autopilot systems, Tesla’s auxiliary autonomous features have also come under the scrutiny of federal regulators. The “Summon” technology, designed to allow drivers to command their cars to drive to their location to pick them up, represents another facet of Tesla’s innovative approach to vehicle interaction. While seemingly convenient, this feature has also prompted an investigation by the NHTSA earlier this year, following reports of several minor collisions and fender benders occurring in parking lots.

The concept of Summon is that a driver, through their smartphone, can instruct their parked Tesla to navigate autonomously to their immediate vicinity. This can involve maneuvering out of a parking spot and across a lot. However, the reported incidents of fender benders suggest that the system’s ability to safely perceive and react to dynamic, low-speed environments, such as busy parking lots with unpredictable pedestrian and vehicle movements, may still be underdeveloped. These incidents, while often minor in terms of damage, highlight a pattern of shortcomings in the software’s environmental awareness and predictive capabilities.

The NHTSA’s decision to launch a specific investigation into Summon underscores the agency’s broad approach to evaluating all aspects of Tesla’s automated driving offerings. It indicates that even features not directly involved in high-speed highway driving or complex urban navigation are subject to rigorous safety assessments. The reported issues with Summon contribute to the overarching narrative of Tesla’s autonomous software requiring further refinement and robust validation before widespread, unsupervised use can be considered entirely safe.

The outcome of the Summon investigation will further inform the regulatory body’s comprehensive understanding of Tesla’s overall autonomous technology stack. It reinforces the idea that every automated function, regardless of its perceived complexity or operational speed, must meet stringent safety standards. Such probes ensure that incremental advancements are not prioritized over the foundational requirement for absolute reliability and public safety across the entire spectrum of automated vehicle features.

Read more about: NHTSA Intensifies Scrutiny of Tesla: Unpacking Safety Concerns from Faulty Door Handles to Advanced Driver-Assistance Systems

9. **Non-Compliance in Crash Reporting: A Growing Concern**

A critical element of automotive safety regulation involves the accurate and timely reporting of incidents, especially those involving advanced driver-assistance systems. The National Highway Traffic Safety Administration launched an investigation in August, specifically looking into why Tesla has not been reporting crashes promptly, as required by law. This inquiry adds a serious dimension to the company’s regulatory challenges, suggesting potential lapses in compliance with fundamental safety reporting protocols.

Federal regulations mandate that companies developing and deploying autonomous or partially autonomous vehicles submit information about crashes involving their systems. This Standing General Order for Crash Reporting (SGO) is crucial for regulators to monitor safety trends, identify potential defects, and make informed decisions regarding necessary interventions, such as recalls. Any failure to comply with these reporting requirements can impede the NHTSA’s ability to perform its oversight function effectively, potentially obscuring critical safety patterns.

The agency’s Office of Defects Investigation (ODI) notably identified six reports from Tesla under the SGO in its latest probe into FSD. While these specific reports are part of the larger FSD investigation, the separate August inquiry into general non-compliance with reporting suggests a broader, systemic issue. If Tesla is found to be routinely delaying or omitting crash reports, it could face heavy penalties and further erode trust with regulatory bodies, creating a significant impediment to its autonomous ambitions.

This investigation into crash reporting compliance highlights the foundational importance of transparency and accountability in the development of cutting-edge automotive technology. Accurate data is indispensable for ensuring public safety and fostering a robust regulatory framework. The resolution of this probe will not only determine potential penalties for Tesla but also set a precedent for how other manufacturers of advanced driver-assistance systems adhere to critical safety reporting obligations.

10. **Expert Criticisms and Hardware Debates for FSD**

Beyond regulatory investigations and legal battles, Tesla’s Full Self-Driving software faces significant critiques from automotive safety experts and technology analysts. These expert opinions often challenge the fundamental approach Tesla has taken, particularly its reliance on a “vision-only” system that primarily uses cameras to perceive the environment. This technical debate forms a crucial part of the ongoing conversation around the reliability and safety of FSD.

Money manager Ross Gerber, a long-time Tesla investor who once strongly supported the company’s driver assistance features, has become a prominent voice advocating for a change in Tesla’s hardware strategy. Gerber contends that the vision-only system, which relies solely on cameras, needs to be “supplemented with radar sensors and other hardware.” His argument posits that without the redundancy and diverse sensing capabilities offered by technologies like radar, the FSD system struggles to accurately interpret complex or adverse driving conditions, contributing to the very accidents under investigation.

Gerber’s critique extends to the operational effectiveness of the FSD software itself, stating unequivocally that “the software doesn’t work right.” He asserts that Tesla must “take responsibility” for these shortcomings, suggesting that the company either needs to “adjust the hardware accordingly — and Elon can just deal with his ego issues — or somebody is gonna have to come in and say, ‘Hey, you keep causing accidents with this stuff and maybe you should just put it on test tracks until it works.’” This strongly worded advice underscores the urgency many experts feel regarding the system’s current state.

These expert criticisms, particularly those advocating for hardware changes, present a direct challenge to Elon Musk’s long-held philosophy of a camera-centric approach to autonomous driving. The debate over whether FSD requires additional sensors highlights a fundamental divergence in engineering philosophy within the industry. As regulators continue their probes, the technical efficacy of Tesla’s chosen hardware and software architecture remains a central point of contention, influencing perceptions of safety and the path forward for truly autonomous vehicles.

11. **Market Performance Under Pressure: Stock Volatility and Sales Challenges**

The cumulative weight of regulatory investigations, mounting legal liabilities, and persistent safety concerns has cast a discernible shadow over Tesla’s market performance. Once a darling of investors, the company’s stock has shown increased volatility, reflecting investor apprehension regarding the future trajectory of its autonomous driving ambitions and broader business challenges. This pressure is evident in both stock movements and sales figures, painting a picture of a company navigating significant headwinds.

News of the new NHTSA investigation into FSD, covering nearly 2.9 million vehicles, saw Tesla’s stock fall almost 3% at one point on Thursday, though it closed with a loss of just 0.7%. While such daily fluctuations are common, the sustained scrutiny contributes to a broader investor sentiment of caution. Seth Goldstein, a Morningstar analyst with a “sell” rating on the stock, encapsulated this sentiment by asking, “The ultimate question is, ‘Does the software work?’” This directly links the technological performance to financial valuation.

Beyond the self-driving narrative, Tesla’s core business of selling cars is also struggling, adding to the pressure on the company. The context reveals a 12% revenue drop to $22.5 billion and a 13.5% year-over-year decline in vehicle deliveries. Several factors contribute to this, including customer boycotts linked to Elon Musk’s political views and intense competition from rival electric vehicle makers, notably China’s BYD, which are gaining market share with “cheaper, high-quality offerings.”

In response to these sales challenges, Musk announced two new, stripped-down and cheaper versions of existing models, including the best-selling Model Y. However, this move failed to impress investors, who had perhaps hoped for more aggressive pricing or entirely new offerings, pushing the stock down another 4.5%. The intersection of regulatory issues, legal setbacks, market competition, and political headwinds presents a complex and challenging environment for Tesla, underscoring how central the success of FSD is to the company’s perceived value and future growth.

12. **Elon Musk’s Vision vs. Reality: The Future of Tesla’s Autonomous Driving**

Elon Musk’s pronouncements regarding Tesla’s self-driving capabilities have consistently been characterized by ambitious timelines and often exaggerated claims, shaping both public perception and investor expectations. He has long openly stated that Tesla cars can “drive themselves,” promising that fully autonomous driving is perpetually “right around the corner.” This aspirational narrative, however, stands in stark contrast to the escalating reality of regulatory probes, legal judgments, and expert critiques that highlight the significant gap between vision and current operational capability.

Musk’s fortune as the world’s richest man is partly derived from Tesla’s “levitating stock,” a valuation often fueled by the promise of groundbreaking technologies like FSD and Robotaxis. He has pledged to roll out hundreds of thousands of driverless taxis in cities across the United States by the end of next year, a timeline that appears increasingly challenging given the current preliminary stage of investigations and the persistent safety concerns. The pressure to demonstrate success with FSD is immense, especially as the company’s traditional car sales face mounting difficulties.

Despite the setbacks, Tesla continues to iterate and develop, with a new version of FSD recently introduced and a “vastly upgraded version that does not require driver intervention” reportedly being tested. This ongoing development indicates a clear commitment to the long-term goal of full autonomy. However, the path forward is now undeniably complicated by regulatory requirements, legal precedents, and public trust issues, which demand a more measured and verifiable approach to deployment.

Ultimately, the future of Tesla’s autonomous driving rests on its ability to bridge the chasm between its visionary promises and the verifiable safety performance demanded by regulators and the public. The current barrage of investigations serves as a critical juncture, forcing Tesla to confront the practical and ethical implications of its technology. The outcome will not only define Tesla’s position in the autonomous vehicle race but also shape the broader regulatory framework and public acceptance of self-driving cars globally, determining whether Musk’s ambitious vision can truly transcend the challenges of reality.

The journey toward widespread autonomous driving is clearly fraught with complexities, as evidenced by the intense scrutiny facing Tesla. The numerous investigations by the National Highway Traffic Safety Administration, coupled with significant legal liabilities and expert critiques, underscore the paramount importance of safety and regulatory compliance in this rapidly evolving technological landscape. While the promise of self-driving cars remains compelling, the ongoing challenges confronting Tesla highlight that the road to full autonomy is not merely a matter of technological innovation but also of rigorous validation, transparent accountability, and the unwavering commitment to public safety. The future will undoubtedly require a delicate balance between pushing the boundaries of what’s possible and ensuring that those advancements are deployed responsibly and safely for all road users.