The tech world watched intently as Apple, a titan known for its meticulously crafted ecosystem, orchestrated a series of monumental shifts in its core silicon strategy. What often appears as a simple product update on the surface is, in reality, the culmination of decades of calculated decisions, strategic pivots, and an unwavering pursuit of control and optimization. From its earliest days with Motorola to its lengthy partnership with Intel, and then its groundbreaking leap into custom Apple Silicon, the company’s processor journey has been anything but linear.

This complex narrative isn’t just a tale of technological advancement; it’s a deep dive into the business acumen and engineering prowess that underpins Apple’s entire product philosophy. Understanding why Apple transitioned between three distinct chip architectures in just seven years—a period marked by both triumphs and frustrations with external suppliers—reveals the critical motivations driving one of the industry’s most influential companies. It’s about more than just speed; it’s about integration, efficiency, and a singular vision for user experience.

Join us as we pull back the curtain on the 14 fundamental reasons that compelled Apple to continually re-evaluate and redefine its chip strategy, ultimately leading to the “strategic triumph” of its custom silicon. We’ll explore the historical context, the technical limitations, and the strategic imperatives that forged Apple’s path to becoming a semiconductor powerhouse in its own right, starting with the early chapters of its processor evolution.

1. **Motorola/PowerPC Stagnation: Why the first chip relationship faltered.**Apple’s journey with processors is a long and winding road, stretching back to the very first Macintosh computers. These early machines, foundational to Apple’s identity, relied on Motorola chips to power their innovative graphical interfaces. For a time, this partnership was effective, providing the necessary processing muscle for Apple’s nascent computing ambitions.

However, as the personal computer market matured, Motorola’s strategic priorities began to shift. The company increasingly focused its efforts and resources on the embedded computer market, a sector quite different from the general-purpose PCs Apple was building. This reorientation meant that innovation for desktop and laptop computing, which Apple desperately needed, started to stagnate within Motorola’s offerings.

This stagnation became a critical limitation for Apple. As a company striving for cutting-edge performance and user experience, being tied to a chip supplier whose priorities lay elsewhere was unsustainable. The lack of forward movement in Motorola’s chip development compelled Apple to look for more dynamic and innovative partners, setting a precedent for future strategic pivots when external dependencies began to hinder its progress.

2. **Intel’s Early Promise: The allure of x86 performance per watt.**In the mid-1990s, Apple attempted to address these limitations by forming the AIM Alliance with IBM and Motorola. Their collaborative effort resulted in the PowerPC processor, a formidable challenger designed to go head-to-head with Intel’s dominant x86 chips. For a significant period, PowerPC indeed delivered superior performance and impressive efficiency, carving out a niche for Apple’s Macs.

Yet, by the early 2000s, the tides began to turn once more. The development of PowerPC processors, mirroring earlier issues, started to slow considerably. IBM, one of the key partners, increasingly focused on its lucrative server business, diverting resources from general-purpose desktop chip innovation. Motorola, still grappling with scaling production profitably, also contributed to the PowerPC’s lagging development.

This confluence of factors left Apple facing a critical choice by 2005. PowerPC chips had undeniably fallen behind Intel’s rapidly advancing x86 processors across several key metrics, including raw performance, efficiency, and the critical timelines for development. Intel’s roadmap, in stark contrast, promised significantly better performance per watt – a metric that was rapidly gaining importance for Apple. This was particularly true as its MacBook line began to dominate its Mac lineup, necessitating highly efficient portable computing solutions. These compelling factors ultimately led Steve Jobs to announce the historic transition to Intel processors in 2005.

3. **Intel’s Performance Per Watt Plateau: Why Apple started to look beyond Intel for efficiency.**The initial move to Intel processors delivered immediate and tangible benefits for Apple. Macs became noticeably faster, enabling more demanding tasks and enhancing the overall user experience. They also became thinner and more energy-efficient, paving the way for revolutionary products like the MacBook Air, which famously required a custom version of Intel’s Core 2 Duo chip to achieve its iconic compact design. This collaboration brought about a renaissance for the Mac platform, solidifying its place in the modern computing landscape.

However, the honeymoon period eventually waned, and the inherent limitations of relying on a third-party chip supplier, even one as powerful as Intel, gradually became apparent. While Intel chips were optimized for a vast array of devices across the entire PC industry, this “one-size-fits-all” approach often meant they fell short of Apple’s increasingly specific and demanding requirements. Apple had a singular vision for its hardware, and Intel’s general-purpose designs couldn’t always match it.

A major sticking point for Apple was the performance per watt metric. Apple “prioritised efficiency as much as raw performance,” especially for its laptops and mobile devices where battery life and thermal management are paramount. Intel’s processors, designed for a broader market, experienced a stagnation in this crucial area. This made it increasingly difficult for Apple to innovate within its desired thin and light form factors, as it couldn’t achieve the necessary power efficiency without compromising design or performance.

4. **The Imperative for Customization: Intel’s “one-size-fits-all” limiting Apple’s unique designs.**

As Apple continued to expand and diversify its product lineup, its need for highly tailored silicon grew exponentially. Each new device, from the sleek MacBooks to the powerful iMacs, came with its own unique set of design constraints, thermal requirements, and performance targets. The aspiration was to create hardware and software that were seamlessly intertwined, delivering an experience simply unattainable with off-the-shelf components.

Intel’s approach, while providing robust processors for the wider PC market, inherently operated on a “one-size-fits-all” philosophy. This meant that Apple, despite being a major customer, couldn’t deeply customize the chips to precisely match its unique designs and thermal architectures. The inability to finely tune the processor’s characteristics for each specific device created bottlenecks, preventing Apple from fully realizing its ambitious product visions.

The appeal of custom silicon, therefore, became undeniable. Designing its own chips offered Apple “the flexibility to design chips for each device, from iPhones to Macs, maximising performance and efficiency.” This was a pivotal strategic insight, allowing Apple to create silicon that was not just powerful, but perfectly symbiotic with its hardware, unlocking entirely new levels of optimization and innovation that were simply out of reach when relying on external, generalized solutions.

5. **Intel’s Manufacturing Bottlenecks: How delays impacted Apple’s product roadmap.**Beyond performance and customization, the practicalities of chip manufacturing posed significant challenges in Apple’s relationship with Intel. Intel, once a clear leader in semiconductor fabrication, began to experience considerable difficulties with its advanced process nodes, particularly its 10-nanometer process, which faced repeated delays in the mid-2010s. These internal struggles within Intel had direct and detrimental ripple effects on Apple’s ambitious product release schedules.

Delays in chip availability meant Apple couldn’t launch its new Mac models as planned, creating gaps in its product refresh cycles and potentially ceding market momentum. This dependence on Intel’s manufacturing timelines directly constrained Apple’s ability to “innovate on its own terms, release products on schedule,” and integrate cutting-edge features. For a company that prided itself on meticulous planning and timely, impactful product launches, these external delays were a profound frustration.

In stark contrast, Apple had already developed a robust and highly effective strategy for its custom A-series chips used in iPhones and iPads. Its reliance on external partners like TSMC (Taiwan Semiconductor Manufacturing Company) for fabrication had consistently demonstrated “the advantages of more reliable and advanced semiconductor manufacturing.” This divergence in manufacturing reliability highlighted the growing disparity between Intel’s internal capabilities and Apple’s needs, further solidifying the case for independent silicon development.

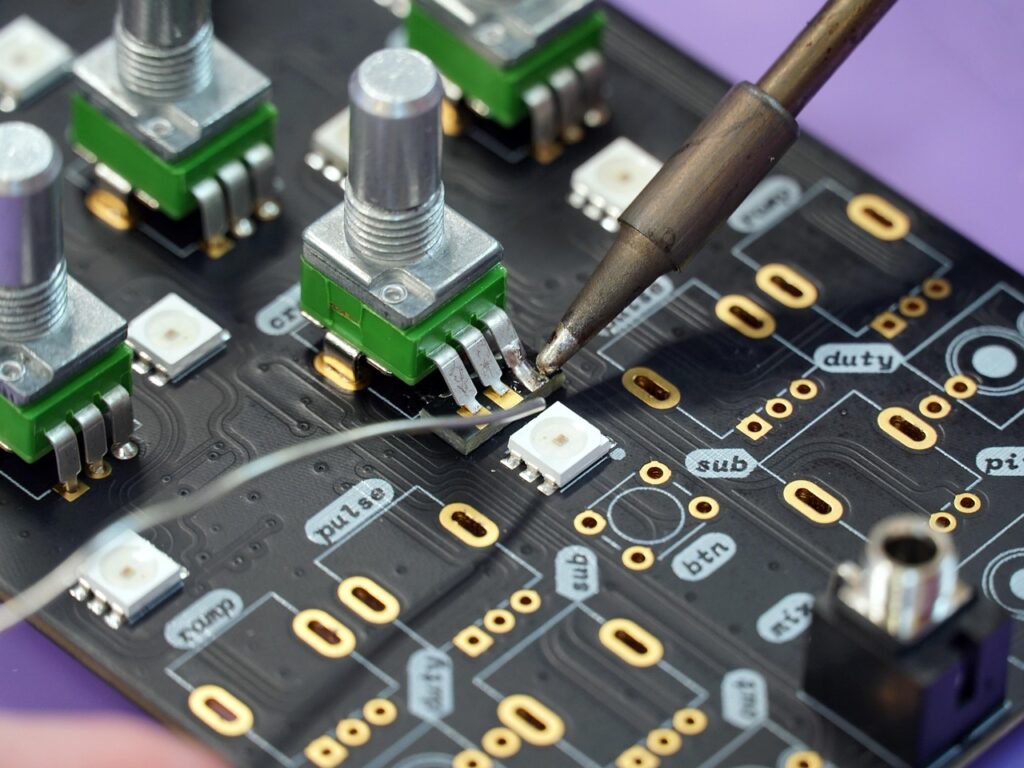

6. **The Skylake Crisis: The “abnormally bad” quality assurance that became a turning point.**Among the various issues that strained the Intel-Apple partnership, the quality of Intel’s Skylake processors proved to be a particularly significant catalyst for change. François Piednoël, a former Principal Engineer and Performance Architect at Intel, offered a candid explanation for Apple’s eventual departure. He revealed that “the quality assurance of Skylake was more than a problem… It was abnormally bad.”

Piednoël explained the severity, stating, “We were getting way too much citing for little things inside Skylake. Basically our buddies at Apple became the number one filer of problems in the architecture. And that went really, really bad.” This implied that Apple’s engineers were effectively performing Intel’s quality assurance work, identifying an excessive number of bugs that should have been caught internally. For a company like Apple, known for its meticulous attention to detail and control over its products, this situation was profoundly frustrating.

The former Intel engineer considered this period to be “the inflection point,” where Apple’s frustration with “buggy Intel processors” reached a breaking point. It directly led Apple to “decide to pursue what has become known as Apple Silicon as a consequence.” This critical failure in quality assurance, compelling Apple to expend its own resources on fundamental chip validation, starkly underscored the vulnerabilities of its reliance on an external, increasingly unreliable supplier.

7. **Desire for Tighter Ecosystem Integration: The vision for seamless hardware-software synergy.**From its very inception, Apple has championed the philosophy of tight integration between hardware and software. This synergistic approach is a cornerstone of the Apple experience, enabling devices to perform beyond the sum of their individual parts. However, when relying on third-party processors like Intel’s, achieving this ultimate level of integration was always going to be a compromise. The design of the chip, the operating system, and the applications were never truly conceived as a single, unified entity.

By designing its “own silicon, Apple could achieve tighter integration between hardware and software.” This was not just a minor improvement; it was a foundational shift that unlocked groundbreaking new possibilities. It meant that the operating system, macOS, could be meticulously optimized to run with unparalleled efficiency on Apple’s own hardware, leading to superior “performance, energy efficiency, and system stability.” This level of co-design simply isn’t possible when the chip architecture is external.

This holistic approach also extended to user-facing features, allowing for advancements such as “seamless app compatibility across devices, enhanced battery life, and consistent performance across product lines.” The success of Apple’s A-series chips in iPhones, which had already demonstrated their potential by “outperforming competitors in both speed and efficiency” through this very integration, served as a powerful proof of concept. It highlighted that true innovation and differentiation lay in having complete vertical control over the silicon, fostering an unparalleled user experience.

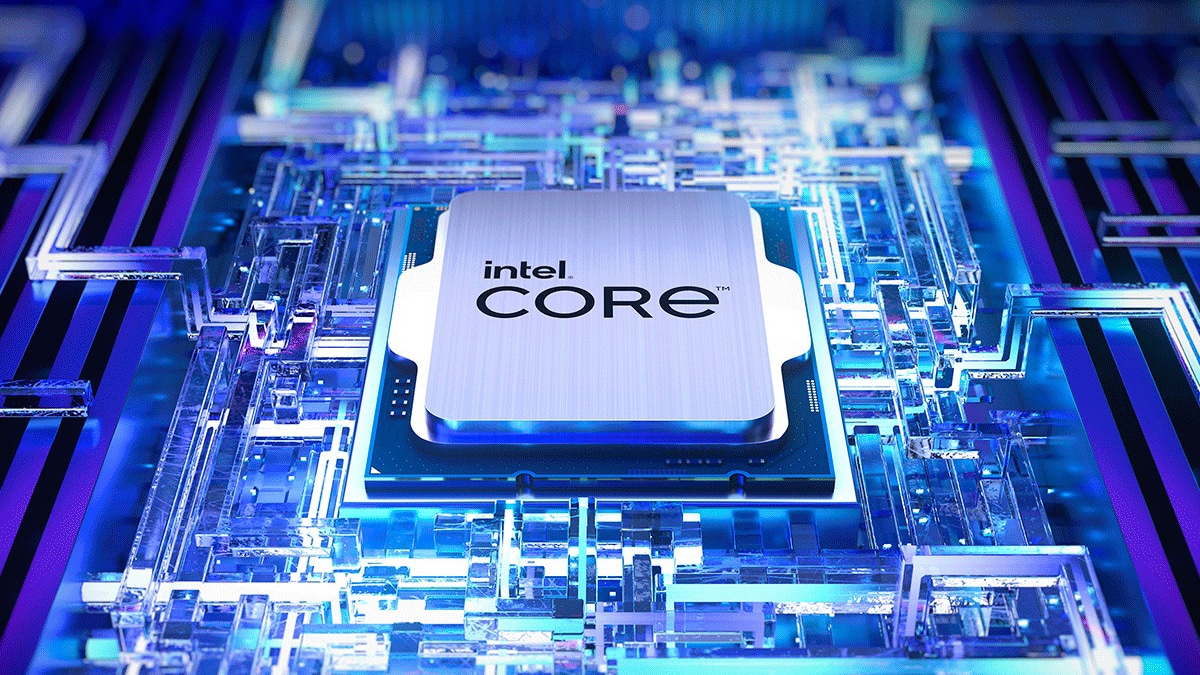

8. **The M1 Revolution: Unprecedented Performance and Power Efficiency.**The accumulated frustrations with external chip suppliers, meticulously detailed in the preceding section, finally culminated in a transformative moment for Apple in 2020. The introduction of the M1 chip, the first in its custom silicon series for Macs, wasn’t just another product announcement; it was a resounding declaration of independence. Built upon the ARM architecture, the M1 signaled a radical shift in how Apple approached performance, efficiency, and ultimately, the entire user experience of its desktop and laptop computers.

From day one, the M1 delivered staggering improvements, immediately outperforming Intel’s processors while consuming significantly less power. This wasn’t merely a marginal upgrade; it was a fundamental redefinition of what was possible in consumer-grade computing. The efficiency gains translated directly into real-world benefits, most notably in battery life, with M1-powered MacBooks offering industry-leading performance that dramatically extended the time users could work untethered from a power outlet.

Crucially, Apple anticipated the compatibility challenges of such a monumental architectural shift. Their solution, Rosetta 2, proved to be a masterful stroke of engineering, ensuring a seamless transition by allowing Intel-based applications to run effortlessly on the new ARM-based Macs. This foresight alleviated user concerns and accelerated developer adoption, proving that Apple could innovate without alienating its existing software ecosystem. As Craig Federighi, Apple’s Senior Vice President of Software Engineering, candidly put it, “We overshot. This is working better than we even thought it would.” The M1’s success paved the way for subsequent iterations like the M2, M3, and M4 chips, each further cementing Apple’s lead in performance per watt and pushing the boundaries of what consumer devices can achieve.

9. **Superior Thermal Efficiency: Enabling Apple’s Signature Designs.**Apple’s design philosophy has always championed thin, light, and aesthetically minimalist devices. However, this pursuit of sleek form factors was frequently at odds with the thermal realities of Intel’s x86 chips. As Intel struggled with heat management and power consumption, particularly with its advanced process nodes, it became increasingly challenging for Apple to fit these chips into the compact, often fanless designs that define its product aesthetic.

The advent of Apple Silicon chips fundamentally resolved this long-standing tension. Designed from the ground up for superior power efficiency, these custom processors run significantly cooler than their Intel counterparts. This reduction in heat generation drastically minimizes the need for elaborate cooling mechanisms, translating into quieter machines and enabling the development of even thinner and lighter designs that perfectly align with Apple’s iconic design philosophy. It’s a symbiotic relationship where silicon and chassis are engineered in concert.

Beyond just the M-series for Macs, Apple’s commitment to thermal efficiency extends across its entire product lineup. For instance, the new iPhone 17 Pro models, powered by the A19 Pro chip, incorporate an innovative “vapor chamber” positioned precisely in concert with the System on a Chip (SoC). This advanced cooling solution, laser-welded into the forged unibody aluminum design, ensures optimal heat dissipation. This meticulous attention to thermal management allows Apple to push the boundaries of chip performance within increasingly compact and powerful devices, ensuring sustained performance without compromising user comfort.

10. **A Unified Architecture: Seamless Ecosystem and Developer Empowerment.**One of the most profound strategic advantages unlocked by Apple Silicon is the establishment of a unified architecture across its entire device ecosystem. With ARM-based Apple Silicon, the fundamental silicon design that powers iPhones and iPads now extends seamlessly to Macs. This means a consistent underlying technological foundation across a vast array of devices, from the most portable smartphone to the most powerful desktop computer.

This architectural consistency translates directly into a more cohesive and predictable user experience. Furthermore, and perhaps even more significantly, it dramatically simplifies the development process for software creators. Developers can now craft applications with greater ease, knowing they will function optimally and consistently across all Apple platforms. This not only encourages innovation but also makes it far easier for iOS apps to run natively or with minimal modification on Macs, blurring the lines between mobile and desktop computing.

As Johny Srouji, Apple’s head of silicon, eloquently articulated, “Because we’re not really selling chips outside, we focus on the product. That gives us freedom to optimize, and the scalable architecture lets us reuse pieces between different products.” This singular focus on the product, combined with a scalable architecture, empowers Apple to create an intensely optimized and deeply integrated experience. It fosters a more robust and vibrant developer community, leading to a richer array of applications and functionalities across the entire Apple ecosystem, a level of synergy unattainable with disparate chip architectures.

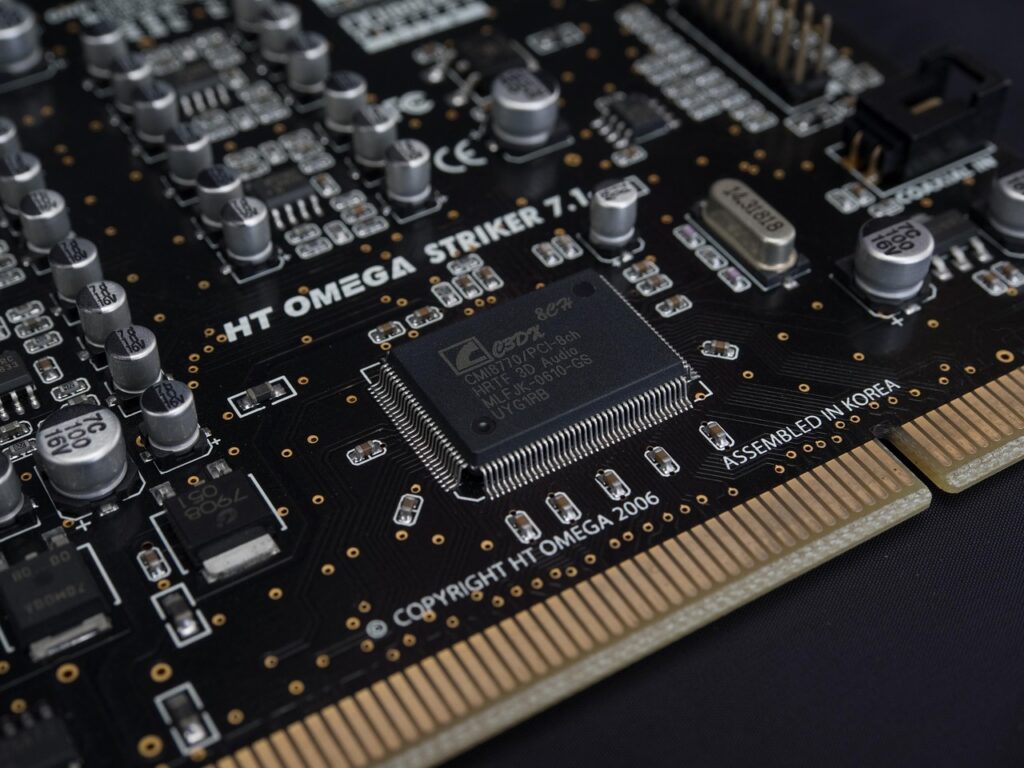

11. **Advanced Security and On-Device AI: Intelligence and Privacy at the Core.**Apple Silicon chips are not merely about raw processing power; they are foundational to Apple’s evolving strategies in security and artificial intelligence. These custom chips come equipped with integrated security features, such as the Secure Enclave, which provides enhanced protection for sensitive user data and robust privacy safeguards. This on-chip security is a cornerstone of Apple’s commitment to user trust, ensuring that critical information remains protected at the hardware level.

Beyond security, Apple’s ARM-based chips are meticulously optimized for machine learning tasks, featuring dedicated neural engines capable of handling an astonishing 11 trillion operations per second. This unparalleled on-device AI capability is crucial for features ranging from sophisticated image recognition and voice processing to advanced computational photography, all executed with remarkable speed and efficiency without relying on cloud processing.

The latest iteration, the A19 Pro chip, introduces a major architectural shift specifically to prioritize AI workloads. It boasts neural accelerators directly integrated into each GPU core, dramatically increasing compute power for artificial intelligence. Tim Millet, Apple’s vice president of platform architecture, emphasized this focus, stating, “We are building the best on-device AI capability that anyone else has.” He further explained that Apple’s prioritization of on-device AI is driven by privacy concerns, as well as the benefits of efficiency, responsiveness, and greater control over the user experience. The integration of neural processing is now achieving “MacBook Pro class performance inside an iPhone,” signaling a significant leap forward in machine learning compute, including dense matrix math capabilities previously absent in their GPUs. Features like the new front camera that uses AI to detect a new face and automatically switches to taking a horizontal photo are prime examples of this deep integration, leveraging the full complement of capabilities in the A19 Pro.

12. **Regaining Control: Unlocking Custom Innovation and Product Timelines.**Apple’s previous reliance on Intel meant it was perpetually dependent on a third-party’s roadmap, release schedules, and chip advancements. This often led to frustrating delays, such as Intel’s repeated struggles with its 10-nanometer process, which directly hampered Apple’s ability to innovate on its own terms and release new Mac models on schedule. This external dependency was a significant constraint on a company that prides itself on meticulous planning and timely, impactful product launches.

The strategic decision to design its own ARM-based chips fundamentally restored Apple’s autonomy. Now, Apple can innovate on its own schedule, introduce new features tailored precisely to its needs, and release products with predictable timelines. This newfound flexibility allows Apple to push the envelope in terms of product capabilities and fully control the integration of its hardware and software, a strategic imperative that was compromised when relying on external, generalized solutions.

Beyond the immediate benefits of innovation and scheduling, there are also significant long-term cost advantages. While the upfront investment in custom chip design is substantial, Apple no longer needs to pay Intel’s margins for every processor used. Given Apple’s immense scale and its robust partnerships for chip fabrication, such as with TSMC, this internal production can lead to considerable long-term savings. This confluence of control, innovation, and cost-efficiency underscores why the transition to Apple Silicon is widely regarded as a strategic triumph, securing Apple’s product roadmap and reducing its reliance on external suppliers.

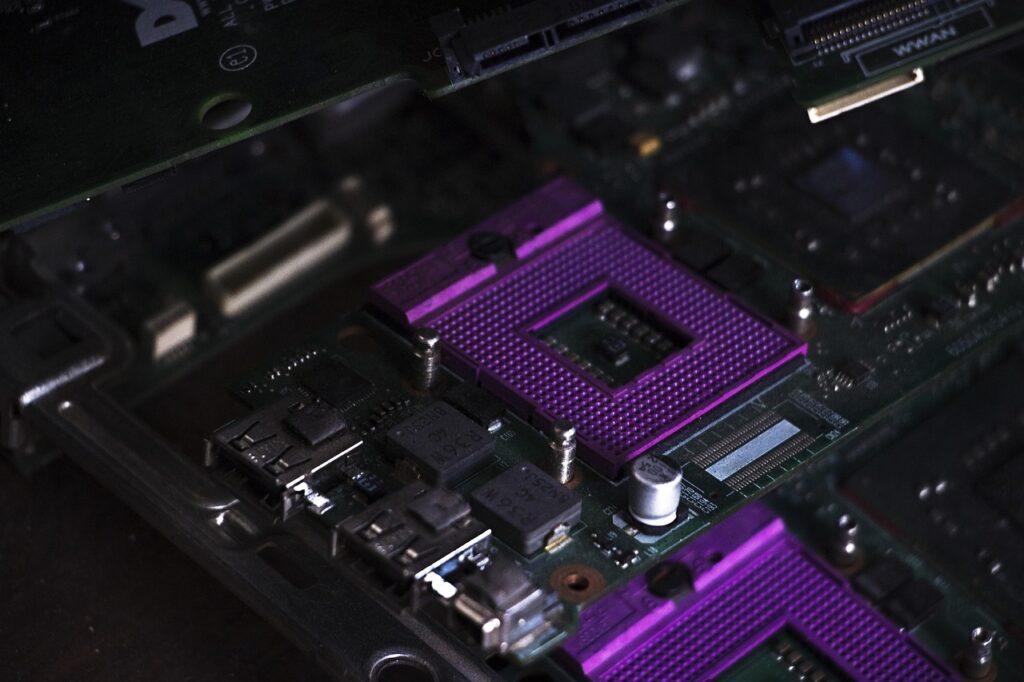

13. **The Pivotal Shift to TSMC: Securing A-Series Dominance.**While the M-series chips revolutionized the Mac, Apple’s pursuit of custom silicon dominance actually began much earlier with its A-series chips for iPhones and iPads. This journey, too, involved a crucial pivot from one manufacturing partner to another. Initially, after Intel famously declined to manufacture iPhone processors believing the smartphone market was too small, Apple turned to Samsung, one of the few companies capable of producing high-performance smartphone chips in the early days. Samsung manufactured Apple’s processors from the first iPhone until 2014, with both companies gradually moving to smaller process nodes like 32nm for the iPhone 5’s A6 processor.

The turning point came in 2014 with the A8 chip, where Apple split production between Samsung and TSMC (Taiwan Semiconductor Manufacturing Company). This dual-sourcing provided Apple with a direct comparison, and TSMC’s 20nm process quickly demonstrated superior efficiency and higher yield rates. This pivotal moment set the stage for a long-term partnership, culminating in Apple fully transitioning its A9 chip production, mostly to TSMC’s 16nm FinFET process, by 2015.

Apple’s decision to exclusively partner with TSMC was driven by several compelling advantages. TSMC consistently provided better usable chip yields per wafer, directly reducing Apple’s manufacturing costs. Furthermore, TSMC’s advanced FinFET (Fin Field-Effect Transistor) technology offered significantly improved power efficiency, a critical factor for extending battery life in iPhones. TSMC also proved to be more aggressive in moving to smaller process nodes, enabling Apple to develop faster, more efficient chips ahead of the competition. Critically, unlike Samsung, which also manufactures chips for many of Apple’s direct competitors, TSMC offered an exclusive partnership that prioritized Apple’s specific needs.

The close collaboration between Apple and TSMC allowed for deep custom optimization of chip designs, consistently resulting in industry-leading performance for the A-series chips. This partnership has been instrumental in the rapid evolution of iPhone processors, from the A8 to the cutting-edge A17 Pro at 3nm. Today, Apple relies solely on TSMC for its A-series chips, benefiting from their continuous advancements in manufacturing processes.

Looking ahead, Apple’s exclusive reliance on TSMC underscores the foundry’s continued dominance in chip manufacturing. With TSMC moving towards 2nm process nodes by 2025, this partnership is set to continue pushing the boundaries of mobile computing. While competitors like Intel and Samsung continue to strive to match TSMC’s process technology, Apple’s strategic choice has undoubtedly given its iPhones a significant technological edge, securing its lead in smartphone performance and power efficiency for the foreseeable future.

14. **Reshaping the Semiconductor Landscape: Broader Industry Ripple Effects.**Apple’s series of monumental chip transitions, particularly its break from Intel and its strategic partnership with TSMC, sent powerful ripple effects across the entire technology industry. What was once a relatively stable landscape, dominated by a few key players, has been profoundly reshaped, highlighting a broader shift away from general-purpose solutions towards highly customized silicon.

The most immediate and significant impact was felt by Intel. Losing Apple as a major customer, especially given the scale and prestige of Apple’s Mac line, was a tremendous blow. Intel has since struggled to recover, facing revenue declines, a measurable drop in market share, and significant organizational challenges as it endeavors to regain its footing in a rapidly evolving semiconductor landscape. It was a stark reminder of the perils of complacency and the strategic value of a major design win.

Conversely, other semiconductor companies have capitalized on Intel’s faltering dominance. AMD, a long-standing rival, has seen significant growth in market share, while TSMC has solidified its position as the undisputed leader in advanced chip manufacturing, attracting high-profile customers due to its reliability and cutting-edge technology. Even in the realm of wireless and modem chips, where companies like Qualcomm and Broadcom historically held sway for iPhones, Apple is now poised to phase them out with its own N1 wireless chip and C1X modem, a plan initiated with the 2019 acquisition of Intel’s modem business. This internal development, as Ben Bajarin, CEO of Creative Strategies, noted, allows Apple to control performance and power, leading to better battery life.

Perhaps the most compelling ripple effect is the “Shift Towards Custom Silicon” that Apple has inspired across the tech industry. Witnessing Apple’s success, other tech giants like Google and Microsoft have begun designing their own processors. Google’s Tensor chips for its Pixel phones and Microsoft’s initiatives in custom silicon for its Surface line exemplify this trend, as companies seek similar advantages in performance, integration, and control over their product roadmaps. This indicates a clear recognition that deep vertical integration, from chip design to software, is the new paradigm for competitive advantage.

Ultimately, Apple’s silicon journey has not only redefined benchmarks for performance, efficiency, and integration but has also underscored a broader truth: the era of general-purpose chips is waning. Companies are increasingly turning to custom solutions tailored precisely to their specific needs. Apple, with its unwavering focus on performance per watt and seamless ecosystem integration, has not just set a new standard for consumer technology; it has charted a strategic course that others are now racing to follow, fundamentally altering the future of computing.

***

The rise of Apple Silicon, from the groundbreaking M1 to the innovative M4, has not merely transformed Apple’s product lines; it has irrevocably reshaped the entire tech landscape. Apple’s bold decision to break away from Intel and invest deeply in developing its own custom chips represents far more than a technical upgrade. It is a testament to an unwavering commitment to controlling its destiny, a strategic masterstroke that has allowed the company to craft products that push the very boundaries of performance, energy efficiency, and seamless innovation. This narrative of audacious strategic pivots, from the early days of external reliance to the current era of custom silicon dominance, highlights a company that continuously redefines what’s possible.

As the industry continues its relentless evolution, Apple is poised to remain at the forefront, not just adapting to change, but actively shaping the future of computing and intelligent devices. This pivotal transition marks not merely the end of an era for Intel’s long-standing dominance in a key sector, but unequivocally the beginning of a vibrant new one for Apple—an era where the possibilities, powered by its bespoke silicon, truly seem endless.