Let’s face it, we all love a good survival story, don’t we? There’s something undeniably captivating about tales of people pushing the limits, facing down danger, and somehow making it out alive. But sometimes, the thrilling narratives we consume in movies, books, and even on reality TV get things spectacularly wrong, planting seeds of misinformation that could actually put us in more danger if we ever find ourselves in a sticky situation.

These aren’t just minor inaccuracies; we’re talking about potentially life-threatening misconceptions that have persisted despite easy access to real information. Why do they stick around? Maybe it’s the drama, the shock value, or perhaps just well-meaning but misinformed repetition. Whatever the reason, it’s time to set the record straight on some of the most pervasive myths out there, not just about wilderness survival, but about other critical areas of safety and history where common knowledge fails us.

We’ve enlisted the help of true experts, like survival instructor Jessie Krebs, and delved into historical research to separate the helpful truths from the harmful fictions. Get ready to toss aside some long-held beliefs, because understanding what doesn’t work is just as crucial as knowing what does when facing life-or-death decisions or simply understanding the world around us. Let’s jump in and start debunking!

1. **Myth: Moss only grows on the north side of trees.**

The classic “nature’s compass”! The story sounds so simple and intuitive: moss prefers shady, damp spots, and in the Northern Hemisphere, the north side of a tree gets less direct sun. So, if you’re lost, just find some moss and head south, right? Unfortunately, this is one of those urban legends that just isn’t reliable in the real world.

While the scientific principle behind it makes sense, the conditions moss needs – shade and dampness – can be created by many other factors besides the sun’s position. Other trees can cast shade, slopes can alter moisture retention, and the overall environment plays a huge role. Moss is perfectly capable of growing on any hard surface, regardless of its orientation. Relying solely on moss to find your way could lead you even further astray.

Survival expert Jessie Krebs and many others agree: this bit of folklore, while charming, is simply not accurate enough to use as a reliable navigation tool when your life might depend on it. It’s a romantic idea perpetuated in media, but it falls apart when faced with the varied conditions of nature. Better to learn actual navigation techniques!

2. **Myth: If you’re dying of thirst, you should drink your own pee.**

This one seems to pop up everywhere, especially in dramatic survival scenarios on screen. The idea is that urine is mostly water, so it must be a source of hydration in a pinch. It’s visually shocking and memorable, which is probably why it persists, but it’s a deeply dangerous misconception that survival experts emphatically debunk.

Jessie Krebs is clear: “No, you should not” drink your own urine. She explains that people who survived after drinking urine did so in spite of it, not because of it. It might seem logical because urine is about 95 percent water, but consider seawater, which is 96 percent water. We know drinking seawater is deadly because of its high salt content; your body has to use more water from your tissues to eliminate the excess salt, dehydrating you faster.

The same principle applies to urine, albeit with different waste products like urea. Your body created urine to get rid of these substances. Ingesting them forces your body to use its precious water reserves to flush them back out again. You’re essentially taking “two steps back” for every “step forward” you thought you were making. It’s a net loss and adds harmful waste back into your system. Don’t do it.

3. **Dangerous Action: Eating food without a water source in a survival situation.**

This might sound counterintuitive. Aren’t you supposed to eat whatever you can find to keep your energy up? While food provides calories, if you don’t have a reliable water source, eating can actually harm you more than help you. This is a critical point that often gets overlooked in the rush to find sustenance.

Our digestive system is incredibly water-intensive. It needs a significant amount of hydration to break down food, absorb nutrients, and eliminate waste properly. If your body is already dehydrated, introducing food forces it to divert water from other essential functions to the gut, exacerbating your overall dehydration much faster. Think about it: digestion requires pulling in water and juices, separating out waste, and ensuring enough moisture for that waste to pass through.

Jessie Krebs highlights this logical point: if you shouldn’t even be eating when you lack water because it dehydrates you, then it makes even less sense to drink something your body is actively trying to expel. The fundamental rule is: secure your water source first. Only when you have adequate hydration should you consider consuming food, as doing so without water will accelerate your body’s decline.

4. **Myth: Fire is your first priority when cold, wet, or miserable in a survival scenario.**

Popular media often portrays starting a fire as the immediate go-to survival skill, the magical solution to cold, wet, and despair. While fire is undeniably a crucial tool for warmth, signaling, and purifying water, survival experts stress that it is not your first line of defense. Jumping straight to fire can be a waste of critical energy and time, especially in challenging conditions.

Jessie Krebs ranks survival priorities differently. Your absolute first lines of defense against the elements are clothing and shelter. You need to maximize the insulation your clothing provides – stuff it with dry leaves or other materials if necessary to create dead air space. Your second priority is getting a shelter established. Most creatures on the planet survive perfectly well with just their natural “clothing” (fur, feathers, scales) and some form of shelter.

Trying to start a fire in nasty weather – howling wind or pouring rain – is incredibly difficult, even for experienced hands. You’re trying to coax a tiny, fragile flame into existence while exposed to the very conditions you’re fighting. Even if you succeed, standing by a fire in a storm means one side is warm while the other gets blasted by wind and rain. A sturdy shelter provides protection while you attempt firecraft, or it might be sufficient on its own to get you out of immediate danger and allow you to rest and recover before tackling the more complex task of fire. Think of starting a fire like having a baby in a snowstorm – you need a protected space first.

5. **Myth: If bitten by a venomous snake, you should cut the wound and suck the venom out.**

Ah, the classic Western movie trope! Hero gets bitten, sidekick whips out a knife, makes an incision, and dramatically sucks out the poison. It looks decisive and action-packed on screen, but in reality, this method has been thoroughly debunked and can cause more harm than good. It’s a persistent myth that needs to die out for good.

Jessie Krebs explains the reality: snake fangs inject venom deep into tissue, often muscle, where it’s quickly picked up by your blood flow and lymphatic system and circulated away from the bite site. The chances of being able to extract a significant, life-saving amount of venom by cutting and sucking are virtually zero. Your blood is moving too fast, and the venom is dispersed rapidly. Cutting the wound, meanwhile, only introduces more damage, increasing the risk of infection and tissue injury.

The best course of action if bitten by a venomous snake (like a rattlesnake in the US) is generally to remain calm and minimize movement to keep your blood pressure down, which slows the spread of venom. Get yourself or the victim to medical help as quickly and calmly as possible. Taking a picture of the snake if it’s safe to do so can help medical professionals identify the type of venom and administer the correct antivenom. Marking the edge of swelling can also be useful. Panicking or attempting the “cut and suck” method will only worsen the situation.

6. **Myth: Skin cancer is something that only affects certain people, like the elderly or those with fair skin, or only happens in sunny climates.**

This is a constellation of dangerous misconceptions surrounding skin cancer, particularly melanoma, its deadliest form. Many people harbor beliefs that they are somehow exempt based on their age, ethnicity, skin tone, or where they live. The reality is far more indiscriminate, and these myths are literally costing lives by preventing people from taking precautions and checking their skin.

Contrary to these beliefs, no one is truly exempt from skin cancer. Non-melanoma types like basal cell carcinoma (BCC) and squamous cell carcinoma (SCC), as well as melanoma, can develop at any stage of life, including adolescence. Skin cancers do not discriminate based on age, ethnicity, skin tone, or geographic location. While the risk *does* increase with age and fair skin is a known risk factor, young people, individuals with darker skin tones, and those living in less sunny climates are absolutely still at risk. Melanoma, in fact, is a leading cancer for young women aged 20-24 in the UK, and is the nation’s most rapidly-increasing cancer type overall.

Susanna Daniels, CEO of UK charity Melanoma Focus, stresses the importance of raising awareness across *all* demographics precisely because “so many prevailing myths and misconceptions surrounding skin cancer… are stopping people from protecting themselves.” People with darker skin tones, for example, may tan easily and rarely burn, feeling a false sense of security. However, detecting skin cancer signs can be more challenging in darker skin, and melanoma can present differently, often in areas like the palms, soles, or under nails that get minimal sun exposure (acral lentiginous melanoma or ALM). This can lead to later diagnoses and poorer outcomes, as tragically reflected in lower survival rates for non-White ethnic groups compared to White patients. Education is crucial because, while dangerous, skin cancer is highly treatable when caught early – but you have to know it *can* happen to you and know what to look for, regardless of who you are or where you live.

Alright, we’ve busted some serious myths about wilderness survival and even started chipping away at dangerous misconceptions about health. But the world of misleading information is vast, and sometimes the most dangerous untruths aren’t about dodging snakes or staying warm, but about history and even our own public health. Let’s keep going and shine a light on a few more widely held beliefs that can have real-world consequences, separating dusty Hollywood narratives from the facts we need to know.

7. **Myth: The American West was an inherently lawless, anarchic hotbed of violence.**

Movies, books, and television have painted a vivid picture of the 19th-century American West as a chaotic free-for-all, a place where law and order were virtually nonexistent, and disputes were settled primarily through gunfights in the dusty streets. It’s a powerful narrative, full of lone sheriffs battling ruthless gangs and everyday life teetering on the brink of anarchy. But what does the historical research actually say? It turns out this widely accepted image is largely a myth.

Historians who have dug into the reality of the era often find that the civil society of the American West was surprisingly orderly and much less violent than modern American cities. Writers like Eugene Hollon argue that the western frontier was “a far more civilized, more peaceful and safer place than American society today.” This isn’t to say there was *no* violence, but the pervasive, anarchic violence depicted in popular culture simply doesn’t hold up to scrutiny when examining the historical record. The picture was far more nuanced and, frankly, less dramatic than the silver screen suggests.

So, if government was sparse or absent in many areas, how was order maintained? This is where the historical findings become particularly interesting. Instead of anarchy, various *private* agencies stepped up to fill the void, providing the necessary framework for an orderly society where property was protected and conflicts were resolved, often without resorting to violence. These weren’t official government bodies, but organizations formed by the people living there – groups like land clubs, cattlemen’s associations, mining camps, and wagon trains. These voluntary associations created their own rules, settled disputes, and enforced property rights, proving that order didn’t solely depend on a government monopoly on force.

8. **Myth: Violence in the American West was caused by a lack of government and reflects a “frontier heritage” of violence.**

Stemming from the previous myth, the idea persists that because the West was supposedly lawless and violent, this lack of governmental control was the *cause* of the violence. Furthermore, some theories even blame this alleged frontier violence for contributing to violence in the United States today, suggesting a “frontier heritage” of lawlessness and aggression. It’s a tidy explanation that links the past to the present, but it completely misses the mark on the actual historical drivers of significant conflict in the region.

The historical research challenges this cause-and-effect. Instead of an inherent tendency towards violence unleashed by the absence of government, the evidence suggests that the *introduction* and *expansion* of the U.S. government’s presence was the real catalyst for a culture of significant violence in the American West. The private mechanisms developed by civil society were often quite effective at minimizing conflict and maintaining order among settlers themselves. Land clubs had constitutions to define and protect property rights, arbitrating disputes to minimize violence. Wagon trains established judicial systems where ostracism or banishment, not violence, were usually sufficient to correct behavior. Mining camps formed contracts, hired “enforcement specialists,” and developed laws that resulted in very little violence and theft. Cattlemen’s associations hired private protection agencies to deter rustling. These systems worked, suggesting civil society was quite capable of self-regulation.

If civil society was managing relatively well, where did the major violence come from? The historical evidence points overwhelmingly to U.S. government policies, particularly towards the Plains Indians, as the primary source of the real culture of violence in the latter half of the 19th century. It wasn’t the absence of government, but specific, aggressive governmental actions enabled by a fundamental shift in approach that spurred the most brutal conflicts. This flips the common narrative on its head – the significant violence wasn’t a result of rugged individualism run amok, but a consequence of state-sponsored expansion and aggression.

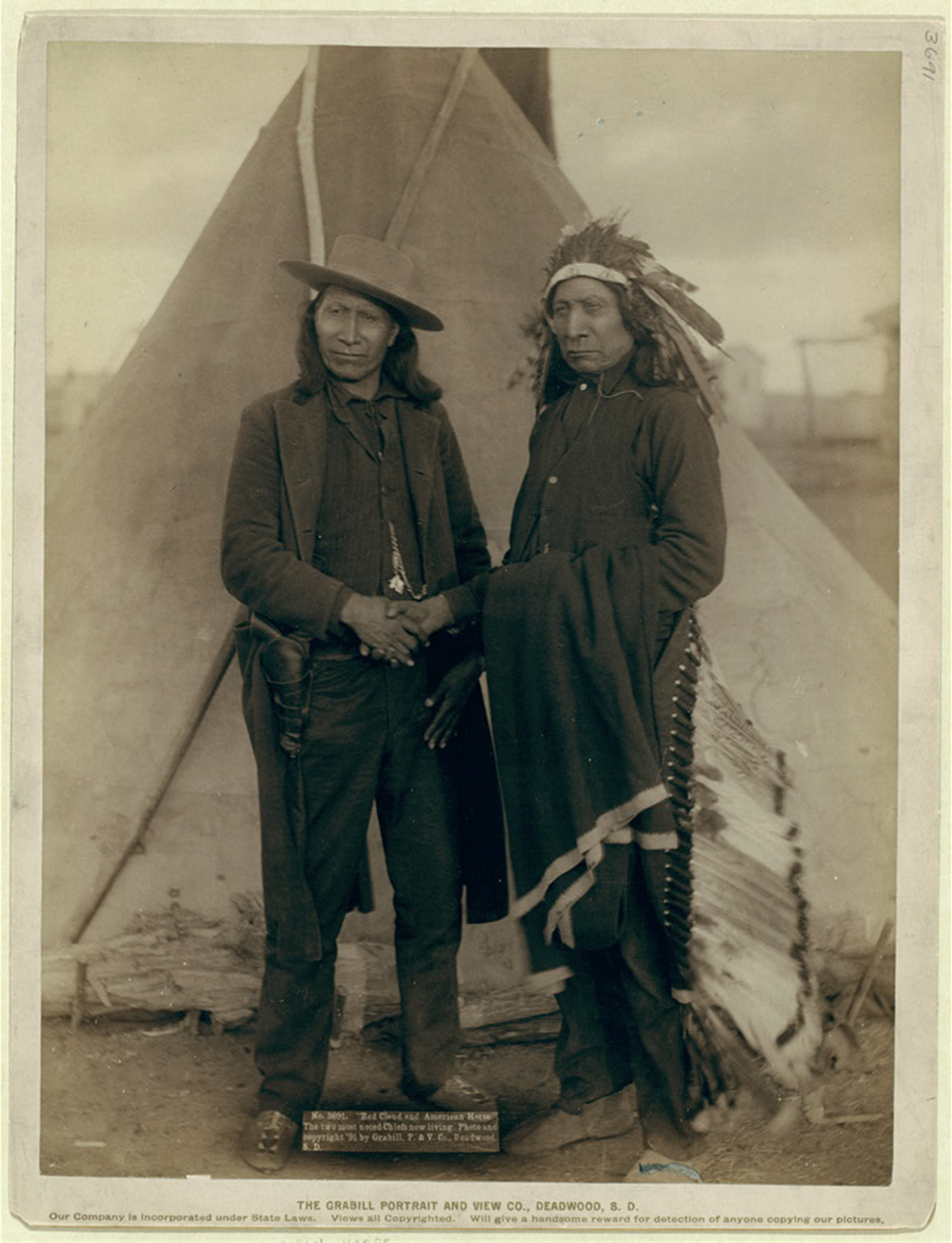

9. **Myth: White settlers were constantly at war with Native Americans in the West.**

This misconception often goes hand-in-hand with the “violent West” narrative, portraying interactions between white settlers and Native Americans as one of perpetual, inevitable warfare. The image is often of skirmishes, raids, and constant conflict driven by irreconcilable differences and competition for land. While conflict certainly occurred and tragically escalated, the idea that it was non-stop war from the start is an oversimplification that ignores a significant part of historical reality.

Historical accounts reveal a more complex initial relationship between European settlers and Native American tribes. Early interactions often involved trade and cooperation. The fur trade, in particular, incentivized peaceful relations because war was costly and trade was profitable. Many early leaders recognized the advantage of friendly terms. The historical record shows that for a significant period, negotiation was the predominant means of acquiring land from Indigenous peoples, with substantial amounts of money paid for land by the early 20th century. This demonstrates that mutually agreed-upon transactions, not just outright conquest, were common in the first half of the nineteenth century.

The shift toward increased conflict and violence—particularly after the Civil War—correlates strongly with a change in U.S. government policy. Prior to 1865, militias in the West were often composed of laborers or tradesmen. The introduction of a standing army after the war created a class of professional soldiers whose welfare depended on warfare. This standing army, combined with government subsidies to railroad corporations and other politically connected businesses, allowed white settlers and corporations to socialize the costs of seizing Indigenous lands through violence supplied by the army. This shifted incentives away from peaceful trade and toward violent seizure, fundamentally altering the relationship between settlers and Native Americans and escalating conflict. This proves the “constant war” narrative is inaccurate for the entire period and often misattributes the cause of escalation.

10. **Myth: Suicide is a rare event that only affects a few isolated individuals.**

Many people tend to think of suicide as an uncommon tragedy—something that happens only to a small, specific group, perhaps those with severe and obvious mental health conditions. This misconception can lead to a dangerous lack of awareness, preventing us from recognizing the signs, offering support, or understanding the scale of the problem. The reality is starkly different: suicide is a serious, widespread public health issue affecting individuals across all demographics.

The statistics paint a sobering picture that completely debunks the idea of suicide being rare. It was responsible for 49,316 deaths in the United States in 2023 alone, averaging about one death every 11 minutes. These deaths are just the most visible tip of the iceberg. The number of people affected is vastly larger: in 2022, an estimated 12.8 million adults seriously thought about suicide, 3.7 million planned an attempt, and 1.5 million actually attempted it. These numbers show that suicidal thoughts and behaviors are experienced by millions, far from being confined to a small, isolated group.

Furthermore, suicide affects people of all ages. In 2023, it ranked among the top 8 leading causes of death for those aged 10–64 and was tragically the second leading cause for young people aged 10–34. While risk factors exist, its impact spans generations. The idea that it only affects a specific, easily identifiable group is also inaccurate: suicide rates vary by race/ethnicity (with non-Hispanic American Indian/Alaska Native and non-Hispanic White populations having the highest rates), age, location (rural areas), occupation (mining, construction), and identity (LGBTQ+ youth). This widespread impact means it’s a concern for everyone, not just a select few—and underscores why broad awareness and prevention efforts are crucial.

11. **Myth: Suicide risk is confined to obvious cases of severe mental illness.**

While mental health conditions like depression and anxiety are significant risk factors for suicide, focusing solely on this connection leads to another dangerous misconception: the belief that only individuals with clear, diagnosable, and often visible severe mental illness are at risk. This narrow view can cause us to overlook warning signs in people who don’t fit this stereotype, or to fail to address other critical risk factors that contribute to suicidal thoughts and behaviors.

The reality is that suicide is complex, and many factors beyond obvious mental illness can significantly increase a person’s risk. The context points to several such factors, illustrating that vulnerability stems from a variety of personal experiences and circumstances. For example, people who have experienced violence, including child abuse, bullying, or sexual violence, have a higher suicide risk. This highlights the impact of trauma and adverse life events, which may or may not result in a formal mental health diagnosis but are clearly linked to increased risk.

Other risk factors mentioned include demographic and situational elements that aren’t solely about individual psychological conditions. These include living in rural areas, working in certain industries like mining and construction, and identifying as lesbian, gay, or bisexual, as young people in this group have higher prevalence of suicidal thoughts and behavior compared to their heterosexual peers. Being connected to family and community support and having easy access to healthcare are protective factors, underscoring that lack of support and barriers to care also play a role. This broader understanding of risk, encompassing trauma, social support, economic factors, identity, and environment, is crucial because it allows us to recognize vulnerability and intervene more effectively in diverse situations, rather than narrowly focusing on just obvious mental illness presentations.

Understanding dangerous myths, whether they’re about navigating the wilderness, protecting our health, or understanding history and public health crises like suicide, is about more than just correcting facts. It’s about equipping ourselves and our communities with the knowledge needed to make better decisions, challenge harmful stereotypes, and ultimately, stay safer and build a more informed world. By replacing misconception with reality, we’re better prepared for whatever challenges life throws our way, from a surprise downpour to supporting someone in need. It’s a lot to take in, but getting the truth out there is the vital first step in protecting ourselves and each other.