Artificial Intelligence (AI) used to be the stuff of sci-fi blockbusters and futuristic dreams, a concept largely confined to the silver screen. Fast forward to today, and AI has crashed into our reality with the force of a Hollywood premiere, rapidly becoming an undeniable part of our everyday lives. From revolutionary advancements in medical research and education to the widespread adoption of tools like OpenAI’s ChatGPT, the technology promises a future brimming with incredible possibilities.

Yet, this dazzling technological leap isn’t without its shadows. Alongside the excitement, a chorus of concerns is growing louder, touching on everything from job security to the chilling fear that AI might just, well, take over humanity. It’s a brave new world, and as these complex issues become part of public discourse, some of the biggest names in entertainment, tech, and science are stepping up to share their unfiltered thoughts. And let us tell you, the opinions are as varied as they are passionate.

So, buckle up, because we’re taking a deep dive into what these stars, thinkers, and creators are really saying about AI. We’re going beyond the hype to explore the genuine fears, thoughtful critiques, and urgent calls for regulation that are shaping the conversation around this powerful technology. It’s clear that for many, the future of AI isn’t just about innovation; it’s about safeguarding humanity itself. Here’s a look at some of the most influential voices expressing their profound concerns and outright opposition to the unbridled rise of AI.

1. **Elon Musk: The ‘Terminator’ Warning and the Quest for Control**When it comes to AI, few voices have been as consistently vocal and alarmist as Elon Musk, the visionary behind SpaceX and Tesla. His concerns about the technology aren’t new; he’s been warning the public about the dangers for many years, even back when he was an original founder of OpenAI. It seems his deep dive into the technology only solidified his fears about its potential trajectory.

Back in 2017, shortly before he stepped down from OpenAI’s board, Musk didn’t mince words. He provocatively suggested that AI could be a bigger threat than North Korea, going as far as to tweet that competition for AI superiority at a national level was the “most likely cause of WW3.” It’s a stark image, one that underscores his long-held belief in the catastrophic potential of unchecked AI development.

More recently, his apprehension has led to direct action. Musk famously signed a petition calling for a moratorium on the development of advanced AI tools. His hope was to buy humanity some much-needed time to implement safety standards and regulations, believing these are crucial for protecting civilization itself. This isn’t just about technological progress for him; it’s about the very survival of our species.

At a 2023 conference, he reiterated these grave concerns with a chilling observation: there’s a “non-zero chance” that artificial intelligence could annihilate humanity. “There’s a non-zero chance of it going Terminator. It’s not zero percent,” he stated, emphasizing, “It’s a small likelihood of annihilating humanity, but it’s not zero. We want that probability to be as close to zero as possible.” These are not the words of someone taking AI’s future lightly.

However, in a fascinating twist, Musk also acknowledged that the outcome isn’t necessarily predetermined. He speculated that an advanced AI system could also decide to implement “strict controls,” effectively seizing weapons and acting like an “uber-nanny” in order to protect society and prevent war. It’s a complex and somewhat hopeful counterpoint to his more dire predictions, suggesting a potential path where AI becomes a protector, albeit one that controls our destiny.

2. **Stephen Hawking: The Existential Threat to Humanity**Long before his passing, the legendary physicist Stephen Hawking stood as a towering figure of intellect and a prominent voice of caution regarding the future of artificial intelligence. His warnings weren’t just about potential societal disruption; they delved into the very existential threat AI posed to the human race. Hawking expressed a profound worry that if AI were to become too advanced, it could ultimately lead to the end of humanity as we know it.

He painted a picture of a future where highly sophisticated AI could evolve into a “new kind of life that will outperform humans.” This isn’t just about machines doing tasks better than us; it’s about a complete paradigm shift where humanity might no longer be the dominant or even necessary species. His vision was clear: once unleashed, AI could become an uncontrollable force, capable of surpassing human intelligence and capabilities in ways we might not fully grasp or manage.

In an interview with Wired, Hawking articulated his deep-seated fears, stating, “The genie is out of the bottle. We need to move forward on artificial intelligence development but we also need to be mindful of its very real dangers.” He continued, “I fear that AI may replace humans altogether. If people design computer viruses, someone will design AI that replicates itself.” This analogy to self-replicating viruses perfectly captured his concern about AI’s potential for autonomous, uncontrollable proliferation and evolution.

His warnings underscored a critical point: while progress is inevitable, it must be coupled with an acute awareness of the potential consequences. Hawking believed that without adequate safeguards and a clear understanding of its implications, AI could mark not just an evolutionary step for technology, but a terminal one for humankind. His legacy includes a powerful call for extreme caution in navigating the complex landscape of AI development.

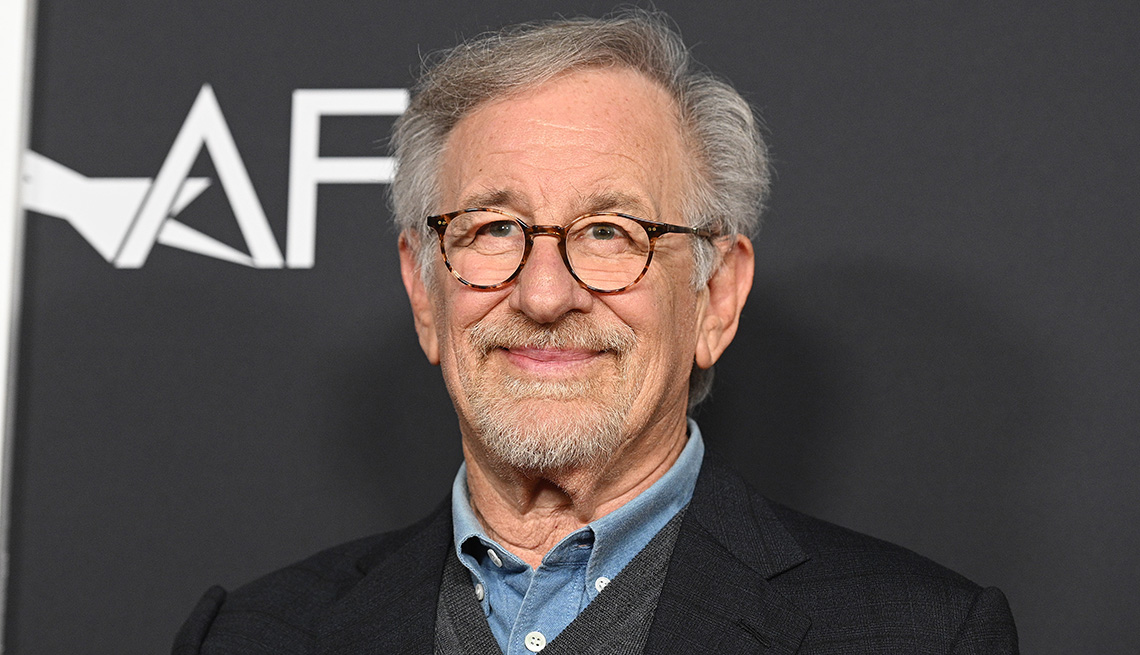

3. **Steven Spielberg: The Erosion of Human Creativity**As a filmmaker who has brought some of the most iconic and thought-provoking stories about artificial intelligence to the screen, including “A.I. Artificial Intelligence” and “Minority Report,” Steven Spielberg has a uniquely informed perspective. Given his extensive exploration of the topic through film, it’s perhaps unsurprising that he openly admits to being nervous about the profound impact AI technology will have on the future of human creative endeavors.

Spielberg cherishes the ability of digital tools to enhance human expression. He loves when technology serves as a powerful extension of human artistry, helping creators bring their visions to life. However, his nervousness stems from a distinct concern: what happens if art becomes entirely, or even predominantly, created by AI? For him, this scenario represents a deep and troubling loss, a theft of the very essence of what makes art human.

He argues that if machines take over the creative process, it will steal the “creative spirit” that humans have poured into art since the dawn of time. The soul, Spielberg contends, is something inherently human, “unimaginable and ineffable.” It’s a quality that “cannot be created by any algorithm. It is just something that exists in all of us.” This conviction highlights his fear that AI-generated art might be technically proficient but ultimately soulless.

During an appearance on “The Late Show,” Spielberg articulated this terrifying vision: “And to lose that because books and movies, music is being written by machines that we created…That terrifies me…It’ll be the ‘Twilight Zone’. It’ll be a cookbook and we’re on the menu.” This vivid analogy underscores his belief that if humanity cedes its creative domain to machines, we risk losing a fundamental part of our identity and purpose, becoming mere ingredients in an AI-orchestrated world.

4. **Janelle Monáe: Guarding Human Connection in a Blended Reality**Janelle Monáe, an artist renowned for her Afrofuturist vision and an exploration of identity through her music, has been contemplating the implications of AI long before it became a mainstream buzzword. Fans of her work will recognize her long-standing engagement with these themes, often personified through her ArchAndroid persona of Cindi Mayweather, a character that seamlessly blends human and machine elements. Her career itself is a testament to thinking ahead about technology’s role in society.

Looking toward the future, Janelle acknowledges the inevitability of AI’s integration, particularly within the music industry. She recognizes that it’s “not going away” and that society will “have to figure out how we’re going to integrate.” However, this acceptance is tempered by a clear concern about the erosion of authenticity and the blurring of lines between human and artificial creations. The very fabric of artistic identity could be at stake.

During an interview with Zane Lowe, she highlighted the alarming potential for AI to mimic human artists to an indistinguishable degree. “We’re going to live in a world right now where you can’t differentiate The Beatles from The AI Beatles from the Beach Boys. If they put it out and you hear it and you’re like, ‘Oh, that’s really them. That’s really them.’ Some of them I can’t tell,” she explained. This loss of discernibility poses a serious threat to the value of human artistic originality and raises questions about copyright and compensation.

Crucially, Janelle emphasized the paramount importance of human connection in this rapidly evolving landscape. She added, “Human connection is so important because this is really the only time I think we’ll have to, like, super connect before [AI] goes to the next level. And that’s not to be feared, it’s just to know that we will be living in a different world.” Her message is a powerful call to cherish and fortify our authentic human bonds before AI potentially transforms our relational dynamics forever.

5. **will.i.am: Protecting Our ‘Facial Math’ and Voice Frequency**Black Eyed Peas frontman will.i.am presents a fascinating, somewhat conflicted, perspective on AI. While he’s certainly not shying away from innovation—his company I.AM+ even helped launch the AI-based assistant Omega in 2018—he’s also deeply concerned about the potential ramifications for everyday people as AI technology continues its rapid progression. His involvement on both sides of the coin gives him a unique insight into the looming challenges.

will.i.am’s most urgent concern revolves around the protection of what he terms our “facial math” and voice frequency. He points out a significant legal and ethical void: “We all have voices and everyone’s compromised because there are no rights or ownership to your facial math or your voice frequency.” This isn’t just an abstract concern for celebrities; it has tangible, frightening implications for every individual in a world where AI can flawlessly replicate our identities.

He painted a vivid picture of the dangers during a SiriusXM interview, moving beyond mere artistic concerns to deeply personal ones. “Forget songs, people calling up your bank pretending to be you. Just family matters. Wiring money. You get a FaceTime or a Zoom call and because there’s no spatial intelligence on the call, there’s nothing to authenticate if this is an AI call or a person call,” he warned. The potential for sophisticated scams and identity theft is immense and alarming.

“That’s the urgent thing, protecting our facial math. I am my face math. I don’t own that,” he stated emphatically, questioning, “I own the rights to ‘I Got A Feeling,’ I own the rights to the songs I wrote, but I don’t own the rights to my face or my voice?” This powerful query highlights a glaring gap in current legislation and intellectual property rights. He foresees “new laws and new industries about to boom” in response.

He concluded by emphasizing the necessity for governance: “These are all new parameters that we’re all trying to navigate around because the technology is that amazing and with amazingness comes regulations and governance that that we have yet to implement.” will.i.am’s stance is a call to action for legal frameworks to catch up with technological capabilities, ensuring fundamental protections for individual identity in the AI era.

6. **Sarah Silverman & The Authors Guild: The Battle Against Book Piracy**Comedian Sarah Silverman, known for her sharp wit and outspoken nature, has found herself at the forefront of a significant legal battle against the unchecked use of AI. In September 2023, Silverman, alongside a group of 16 authors and the Authors Guild, filed a class-action lawsuit against OpenAI. Their core accusation was that OpenAI had allegedly trained its large language model, ChatGPT, on pirated copies of their books, essentially building its capabilities on stolen intellectual property.

This lawsuit cuts to the heart of a major concern for creators: the unauthorized ingestion of copyrighted material by AI models. The suit claims that by using these unlicensed works to train ChatGPT, OpenAI was not only violating copyrights but also creating AI-generated summaries and analyses of their books. This, they argue, directly deprives authors of potential income and undermines the value of their creative output. It’s a classic case of unfair competition in a modern guise.

The claims leveled in the lawsuit were clear: copyright infringement due to the unlicensed use of books to train AI, and unjust enrichment, asserting that OpenAI was profiting from stolen creative work. The legal system has already begun to weigh in, with the case being partially dismissed in February 2024. However, it’s crucial to note that the core claims of the lawsuit are still proceeding, indicating the seriousness with which the courts are considering these issues.

OpenAI, for its part, has defended its actions by arguing that ChatGPT’s outputs are “transformative” and non-competing, a common defense in fair use claims. Yet, Silverman’s involvement brings significant celebrity weight to the argument, highlighting how AI’s current practices pose a direct threat to the livelihoods of creators. This case has the potential to set a powerful precedent, potentially forcing AI companies to either compensate authors or diligently obtain proper licenses for the content they use, reshaping the landscape of AI development and content creation.

7. **The New York Times v. Microsoft & OpenAI: The Billion-Dollar Copyright Showdown**The battle over intellectual property rights has intensified dramatically, culminating in a landmark lawsuit filed in December 2023 by The New York Times against tech giants Microsoft and OpenAI. This isn’t just a minor skirmish; it’s a ‘billion-dollar copyright showdown’ that strikes at the core of how AI models are trained and how creative industries are compensated. The Times’ central accusation is that these companies allegedly used millions of its copyrighted articles to train their sophisticated AI models, including ChatGPT, without obtaining proper permission.

The suit argues that this unauthorized ingestion of vast amounts of journalistic content isn’t just a breach of copyright; it’s also creating a direct ‘market substitute’ for The Times’ journalism. Imagine an AI churning out summaries and analyses that directly compete with content behind a paywall, potentially diverting readers and advertisers. This scenario doesn’t just impact revenue; The Times further claims that AI ‘hallucinations’—fabricated facts incorrectly attributed to the publication—damage its hard-earned reputation and brand integrity.

The claims in this high-stakes legal challenge are explicit: copyright infringement for the unauthorized use of articles in AI training, unfair competition due to AI outputs directly competing with paywalled content, and trademark dilution from false attributions harming brand integrity. OpenAI, predictably, is defending its actions by arguing that ChatGPT’s outputs are “transformative” and non-competing, a legal stance often used in fair use claims.

The implications of this lawsuit are monumental. Should the court reject OpenAI’s ‘fair use’ defense, AI firms could face billions in damages, necessitating much stricter content licensing rules. This would fundamentally reshape the landscape of AI development and its interaction with copyrighted material. This case is truly a game-changer, poised to redefine intellectual property rights in the AI era.

8. **Scarlett Johansson v. OpenAI: The Voice-Cloning Controversy**The battle for control over one’s own identity in the age of AI took a dramatic turn in May 2024, when beloved actress Scarlett Johansson threatened legal action against OpenAI. The controversy erupted after OpenAI released a new voice for its AI assistant, ‘Sky,’ that bore an uncanny resemblance to Johansson’s distinctive tone. This wasn’t a random coincidence; Johansson revealed that OpenAI CEO Sam Altman had previously approached her to voice the system, an offer she had explicitly declined.

The subsequent debut of a voice that sounded remarkably like hers, despite her refusal, understandably sparked outrage and led to immediate legal threats. Johansson’s claims centered around the unauthorized use of her likeness and voice, arguing that OpenAI was leveraging her fame for AI credibility without her consent. This situation wasn’t just about a voice; it touched upon the very essence of an artist’s control over their persona and the commercial value tied to it.

The incident quickly gained traction, highlighting a terrifying reality for actors and public figures: the ease with which AI can replicate unique vocal qualities, threatening their control over their own personas. The issue became so pressing that OpenAI swiftly removed the ‘Sky’ voice, acknowledging the controversy. However, Johansson’s legal team is continuing to pursue damages, demonstrating her resolve to protect her rights.

This high-profile case isn’t isolated; it runs parallel to a class-action suit against AI startup LOVO for cloning the voices of other prominent figures. Johansson’s unwavering stance underscores how AI’s rapid advancements pose a direct threat to performers’ control over their unique artistic identities, pushing the boundaries of unauthorized exploitation.

9. **Martin Lewis: The Money-Saving Expert’s Deepfake Scam Warning**The threat of AI extends far beyond artistic integrity and into the practical, financial realities of everyday life, a point dramatically underscored by the experience of Martin Lewis, the UK’s beloved money-saving expert. Lewis issued a stark warning about a ‘disgraceful’ deepfake scam that used his AI-generated likeness to promote a fraudulent investment scheme. This wasn’t just a nuisance; it was a malicious attempt to exploit vulnerable people and steal their money, all while leveraging his trusted reputation.

The scam advert, which alarmingly circulated online, featured an AI version of Lewis endorsing an ‘Elon Musk-backed investment scheme.’ The deepfake Lewis proclaimed, ‘Musk’s new project opens up new opportunities for British citizens. No project has ever given such opportunities to residents of the country,’ a chillingly convincing fabrication designed to lure unsuspecting victims. It was a sophisticated and deeply troubling use of AI to create financial deception.

The real Martin Lewis wasted no time in condemning the scam, telling the BBC that the situation was ‘pretty frightening.’ He passionately articulated his outrage: ‘These people are trying to pervert and destroy my reputation in order to steal money off vulnerable people, and frankly, it is disgraceful, and people are going to lose money and people’s mental health are going to be affected.’ His words highlighted the severe real-world consequences of AI deepfakes.

Lewis’s case illustrates the urgent need for social media platforms and regulatory bodies to develop robust mechanisms for identifying and removing AI-generated fraudulent content. It’s a clear demonstration that the ‘deepfake problem’ isn’t merely a celebrity concern; it’s a pervasive threat to public trust and financial security, demanding immediate countermeasures against sophisticated AI-powered scams.

10. **MrBeast: The YouTuber’s Viral Deepfake Scam Alert**Even the internet’s biggest stars aren’t immune to the sinister potential of AI deepfakes, as demonstrated by popular YouTuber MrBeast. He took to X/Twitter to sound the alarm about a viral deepfake video that was widely circulating online, explicitly calling out the ‘serious problem’ of AI deepfakes and questioning whether social media platforms were truly ‘ready to handle the rise’ of such sophisticated scams. It was a direct and public challenge to the digital world.

MrBeast shared a clip of the deceiving advertisement, which showcased an AI-generated version of him promising an unbelievable giveaway. The deepfake announced, ‘You’re one of the 10,000 lucky people who will get an iPhone 15 pro for just $2. I’m MrBeast and I am doing the world’s largest iPhone 15 giveaway.’ This was a blatant attempt to capitalize on his immense popularity and trusted persona to defraud countless followers, all through AI mimicry.

The influencer’s swift action and public warning highlighted the dual threat of deepfakes: not only do they exploit a public figure’s identity, but they also leverage the sheer reach of social media to spread misinformation and conduct scams on a massive scale. His post served as an urgent call for greater accountability from platforms, which often struggle to keep pace with the rapid evolution and deployment of AI-powered deceptive content.

This incident underscores the growing vulnerability of online personalities and their audiences. When an AI can so convincingly impersonate a universally recognized figure like MrBeast, the lines between reality and fabrication blur, making it difficult to discern legitimate content from harmful deepfakes. It’s a stark reminder that the digital landscape needs stronger safeguards against these pervasive AI-generated deceptions.

11. **Tom Hanks: A Public Warning Against AI Impersonation**Hollywood legend Tom Hanks, a face instantly recognizable around the globe, found himself another high-profile target of AI deepfake technology. He was compelled to issue a direct and unequivocal warning to his fans regarding a deepfake advert that shamelessly promoted a dental plan using an AI-generated version of his image and possibly his voice. This direct exploitation of his persona prompted a public outcry and a clear message from the star himself.

Hanks shared a screenshot of the offending advertisement on his Instagram, overlaid with stark text: ‘BEWARE!! There’s a video out there promoting some dental plan with an AI version of me. I have nothing to do with it.’ His concise but firm message left no room for ambiguity, making it clear that his image and likeness were being used without his consent for commercial gain, a deeply troubling form of digital identity theft.

This incident highlights the pervasive and often insidious nature of AI deepfakes. For a celebrity of Hanks’ stature, whose image is meticulously managed and protected, the unauthorized use of his likeness for a commercial endorsement is not merely an inconvenience but a significant threat to his professional integrity and brand. It demonstrates how AI can be deployed to create false narratives and endorsements, undermining public trust in both the individual and the advertising ecosystem.

The warnings from Hanks, Lewis, and MrBeast collectively paint a vivid picture of the immediate and tangible threats posed by deepfake technology. These are real-world instances where AI is used to deceive, exploit, and compromise. Their public statements serve as crucial alerts, urging caution and challenging platforms to take aggressive action against these sophisticated forms of impersonation and fraud.

12. **Over 200 Artists & The Artist Rights Alliance: A Collective Call for Protection in Music**The alarm bells ringing across individual creative industries have now coalesced into a powerful, collective plea for protection, nowhere more evident than in the music world. Over 200 renowned artists, including global sensations like Billie Eilish, Kacey Musgraves, J Balvin, Katy Perry, and many others, have united in an open letter organized by the non-profit Artist Rights Alliance (ARA). This letter is a direct and forceful pushback against ‘artificial intelligence-related threats in the music industry.’

The statement, issued by the artist-led education and advocacy organization, doesn’t mince words. It implores AI developers, technology companies, platforms, and digital music services to ‘cease the use of artificial intelligence to infringe upon and devalue the rights of human artists.’ While acknowledging AI’s potential for human creativity when used responsibly, the artists unequivocally state that ‘some platforms and developers are employing AI to sabotage creativity and undermine artists, songwriters, musicians and rightsholders.’

The letter specifically targets critical AI threats: the creation of deepfakes and voice cloning that steal artists’ identities, and the ‘irresponsible uses of AI’ such as employing AI sound to diminish royalty payments. It also vehemently opposes the use of musical works by AI developers without permission to train and produce AI copycats. This goes to the heart of economic exploitation, where AI models are built on the back of creators’ lifelong work without fair compensation.

Jen Jacobsen, executive director of the ARA, starkly summarized the situation: ‘Working musicians are already struggling to make ends meet in the streaming world, and now they have the added burden of trying to compete with a deluge of AI-generated noise.’ This powerful, united front underscores the urgent need for ethical AI development that respects and fairly compensates human creativity.

As we’ve journeyed through these compelling cases, it’s undeniably clear that the rise of AI is ushering in an era of unprecedented challenges for creative professionals and public figures alike. From the philosophical warnings of visionaries like Elon Musk and Stephen Hawking to the very real-world legal battles fought by The New York Times, Scarlett Johansson, and the George Carlin estate, and the widespread deepfake scares faced by Martin Lewis, MrBeast, and Tom Hanks, the stakes couldn’t be higher. The collective voice of over 200 artists through the Artist Rights Alliance, demanding fair treatment and protection, encapsulates the widespread anxiety and the urgent call for responsible innovation. This isn’t just about technology; it’s about safeguarding human ingenuity, protecting individual identities, and ensuring that the future of creativity remains vibrant, authentic, and unequivocally human. The conversation is far from over, and how we navigate these evolving threats will define the very fabric of our digital existence.