In the grand tapestry of human endeavor, few industries have woven themselves so intimately into the fabric of daily life, transforming our very understanding of health, disease, and longevity, as profoundly as the pharmaceutical sector. From the quiet solace offered by a simple botanical extract to the intricate molecular marvels that conquer once-fatal scourges, this industry stands as a testament to relentless scientific curiosity and a powerful commitment to alleviating suffering.

Yet, this journey has been far from linear, marked by both monumental triumphs that have reshaped demographic curves and sobering challenges that underscore the immense responsibility inherent in wielding such power over human well-being. It is a story of meticulous research, serendipitous discovery, ethical quandaries, and the constant dance between innovation and regulation, all unfolding against the backdrop of an ever-evolving global health landscape.

Join us as we embark on an illuminating expedition through the annals of pharmaceutical history, exploring the pivotal moments, the groundbreaking compounds, and the systemic shifts that have defined this extraordinary industry. We will delve into how it emerged from humble beginnings to become a trillion-dollar force, perpetually striving to unlock the secrets of life and deliver remedies that redefine what it means to live a healthy, productive existence.

1. **The Pharmaceutical Industry: Defining a Global Giant**

The pharmaceutical industry, at its core, is a medical industry dedicated to the discovery, development, production, and marketing of pharmaceutical goods, primarily medications. These medications are then administered to, or self-administered by, patients with the ultimate goal of curing or preventing disease, or at the very least, alleviating the often debilitating symptoms of illness or injury. It is a sector deeply intertwined with health, science, and the very quality of human life.

This vast and intricate global market was valued at an astonishing approximately US$1.48 trillion in 2022, demonstrating a consistent and robust growth trajectory. Even amidst the unprecedented global health crisis of the COVID-19 pandemic, the industry continued its expansion, reflecting its essential nature and continuous demand for innovation. Indeed, the sector exhibited a compound annual growth rate (CAGR) of 1.8% in 2021, even while navigating the profound impacts of the pandemic.

The industry’s subdivisions are incredibly diverse, encompassing distinct areas such as the manufacturing of biologics – complex medicines derived from living organisms – and total synthesis, where chemical compounds are built from fundamental precursors. This diversity reflects the multi-faceted approaches scientists take to combat disease. Critically, the entire industry operates under a dense web of laws and regulations that meticulously govern everything from patenting and efficacy testing to safety evaluation and the marketing of these powerful drugs.

While the industry’s monumental contributions to public health are undeniable, it has also faced significant criticism. Concerns have been raised about its marketing practices, including allegations of undue influence on physicians through sales representatives, the potential for biased continuing medical education, and even “disease mongering” to expand markets. Pharmaceutical lobbying, particularly in the United States, has rendered it one of the most powerful influences on health policy, prompting ongoing debates about transparency, access, and affordability of essential medicines.

2. **From Apothecaries to Laboratories: The Genesis of Modern Pharma**

The intellectual concept of the pharmaceutical industry, as we recognize it today, truly began to take shape in the middle to late 1800s. This emergence occurred primarily in nation-states with rapidly developing economies, notably Germany, Switzerland, and the United States. It was a pivotal period marked by scientific advancement and industrial ambition, laying the groundwork for the global pharmaceutical powerhouse we see now.

At the heart of this transformation were businesses deeply engaged in synthetic organic chemistry. Many of these firms, originally focused on generating large-scale dyestuffs derived from coal tar, began to actively seek out new applications for their artificial materials, specifically exploring their potential in human health. This natural pivot from industrial chemistry to medicinal applications marked a crucial early step in the industry’s evolution.

This trend of increased capital investment in chemical synthesis occurred in parallel with significant advancements in the scholarly study of pathology. As the understanding of human injury and disease progressed, various businesses recognized the immense potential of collaborative relationships with academic laboratories that were at the forefront of evaluating these complex biological processes. This early synergy between industry and academia proved foundational.

Indeed, some industrial companies that embarked on this pharmaceutical focus during those distant beginnings have not only endured but thrived, becoming global household names. Iconic examples include Bayer, originating from Germany, and Pfizer, based out of the U.S. Their longevity and continued impact are testaments to the pioneering spirit of this era, which saw local apothecaries expand their traditional role of distributing botanical drugs, such as morphine and quinine, to embrace wholesale manufacture, marking the true dawn of the modern pharmaceutical era in the mid-1800s.

3. **The First Chemical Signals: Epinephrine, Norepinephrine, and Amphetamines**

By the 1890s, a profound scientific revelation had occurred: the remarkable effect of adrenal extracts on a multitude of different tissue types. This discovery ignited an intense scientific quest, driving both the search for the fundamental mechanisms of chemical signaling within the body and fervent efforts to harness these observations for the development of entirely new drugs. The potential of these extracts was immediately recognized for its therapeutic implications.

Of particular interest to surgeons were the blood pressure raising and vasoconstrictive effects of adrenal extracts. These properties held immense promise as hemostatic agents, capable of stopping bleeding, and as a vital treatment for shock. Consequently, several companies swiftly moved to develop products based on adrenal extracts, varying in the purity of their active substances, to address these critical medical needs.

A significant breakthrough arrived in 1897 when John Abel at Johns Hopkins University successfully identified the active substance as epinephrine. He managed to isolate it, albeit in an impure state, as the sulfate salt. The journey to a purer, more usable form was then advanced by industrial chemist Jōkichi Takamine, who developed a refined method for obtaining epinephrine in its pure state. This vital technology was subsequently licensed to Parke-Davis, which then marketed epinephrine under the now-iconic trade name, Adrenalin.

Injected epinephrine quickly proved its exceptional efficacy, particularly for the acute treatment of severe asthma attacks. Its immediate and powerful bronchodilatory effects offered critical relief. By 1929, epinephrine had even been formulated into an inhaler, finding new application in the treatment of nasal congestion. Building on this understanding, researchers sought orally active derivatives, leading to the identification of ephedrine from the Ma Huang plant, marketed by Eli Lilly, and later, the synthesis of amphetamine by Gordon Alles in 1929. While amphetamine had only modest anti-asthma effects, its pronounced sensations of exhilaration and palpitations led to its development by Smith, Kline and French as a nasal decongestant (Benzedrine Inhaler) and subsequently for narcolepsy, post-encephalitic parkinsonism, and mood elevation in depression, receiving American Medical Association approval in 1937.

4. **Sedation and Serenity: The Barbiturates’ Early Dominion**

In the nascent years of the 20th century, a groundbreaking discovery in medicinal chemistry promised to bring relief to those suffering from sleeplessness and neurological disorders. In 1903, the esteemed chemists Hermann Emil Fischer and Joseph von Mering unveiled their findings: diethylbarbituric acid, a compound synthesized from diethylmalonic acid, phosphorus oxychloride, and urea, possessed the remarkable ability to induce sleep in dogs. This marked the birth of the first marketed barbiturate.

The significance of this discovery was quickly recognized, leading to the compound being patented and subsequently licensed to Bayer pharmaceuticals. Under the trade name Veronal, diethylbarbituric acid was introduced to the market in 1904 as a much-needed sleep aid. Its efficacy quickly established it as a staple in early 20th-century medicine, offering a novel approach to managing insomnia.

However, the story of barbiturates did not end with Veronal. Systematic investigations into how structural changes could influence potency and duration of action became a central focus for researchers. These diligent efforts at Bayer led to the discovery of phenobarbital in 1911. What made phenobarbital truly exceptional was the subsequent realization of its potent anti-epileptic activity in 1912, marking a major milestone in neurological treatment.

Phenobarbital rapidly became one of the most widely used drugs for the treatment of epilepsy, maintaining its prominence through the 1970s. Its enduring importance is underscored by its continued inclusion, as of 2023, on the World Health Organization’s List of Essential Medicines. While the 1950s and 1960s brought increased awareness of the addictive properties and abuse potential of both barbiturates and amphetamines, leading to greater restrictions and governmental oversight, phenobarbital remains a critical tool, primarily in epilepsy treatment, a testament to its foundational impact.

5. **A Sweet Victory: The Discovery and Industrialization of Insulin**

The late 19th and early 20th centuries witnessed a relentless scientific pursuit to understand the mysterious disease of diabetes, a condition that condemned millions to an early demise. A series of meticulous experiments gradually unveiled a critical truth: diabetes was caused by the absence of a specific substance, normally produced by the pancreas. This understanding was pivotal in redirecting research efforts towards a potential remedy.

One of the most defining moments came in 1869, when Oskar Minkowski and Joseph von Mering demonstrated that diabetes could be intentionally induced in dogs by surgically removing their pancreas. Fast forward to 1921, Canadian professor Frederick Banting and his student Charles Best replicated this groundbreaking study. Their work revealed that injections of pancreatic extract could effectively reverse the debilitating symptoms caused by pancreas removal, offering a glimmer of hope previously unimaginable.

It wasn’t long before the pancreatic extract was successfully demonstrated to work in human patients, a monumental leap in medical history. However, the path to establishing insulin therapy as a routine medical procedure was fraught with practical challenges. Producing the material in sufficient quantity and with consistently reproducible purity proved to be a significant hurdle, demanding expertise beyond the academic laboratory.

Recognizing this bottleneck, the researchers wisely sought assistance from industrial collaborators, turning to Eli Lilly and Co., a company renowned for its experience with large-scale purification of biological materials. Chemist George B. Walden of Eli Lilly and Company provided the crucial missing piece, discovering that careful adjustment of the extract’s pH allowed for the production of a relatively pure grade of insulin. Under pressure from Toronto University and the specter of a potential patent challenge, a landmark agreement was reached, allowing non-exclusive production of insulin by multiple companies. This collaboration, driven by scientific discovery and industrial scalability, transformed the landscape of diabetes care; before the widespread availability of insulin therapy, the life expectancy of diabetics was tragically just a few months.

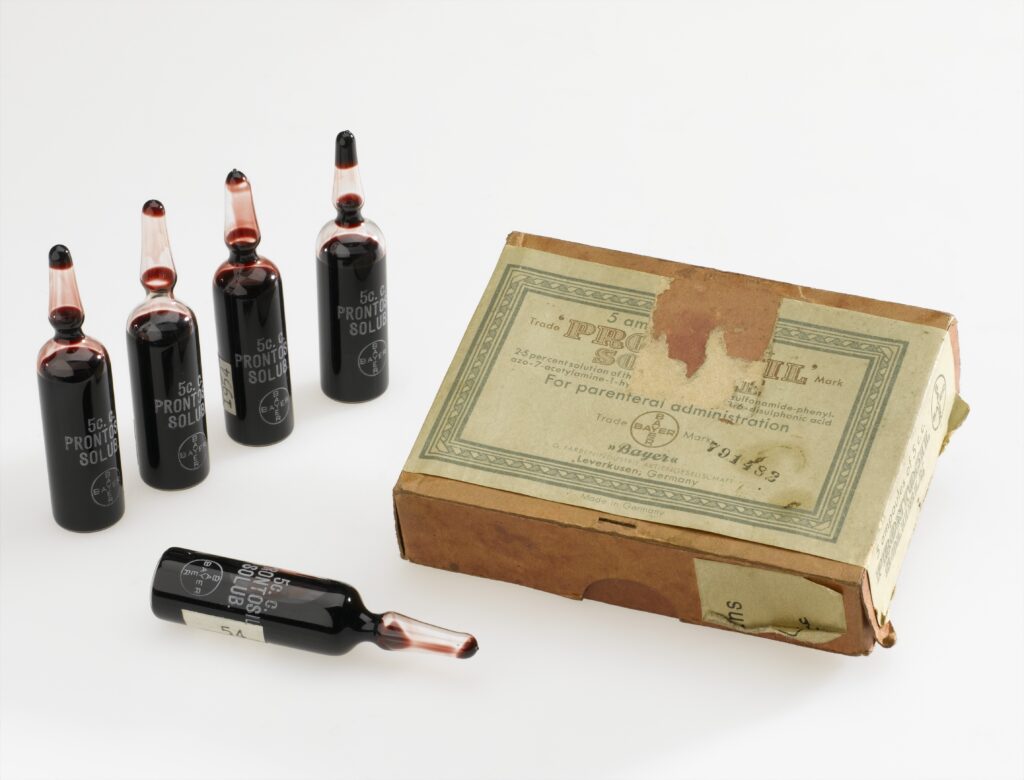

6. **The Dawn of Anti-Infectives: Salvarsan and the Sulfonamides**

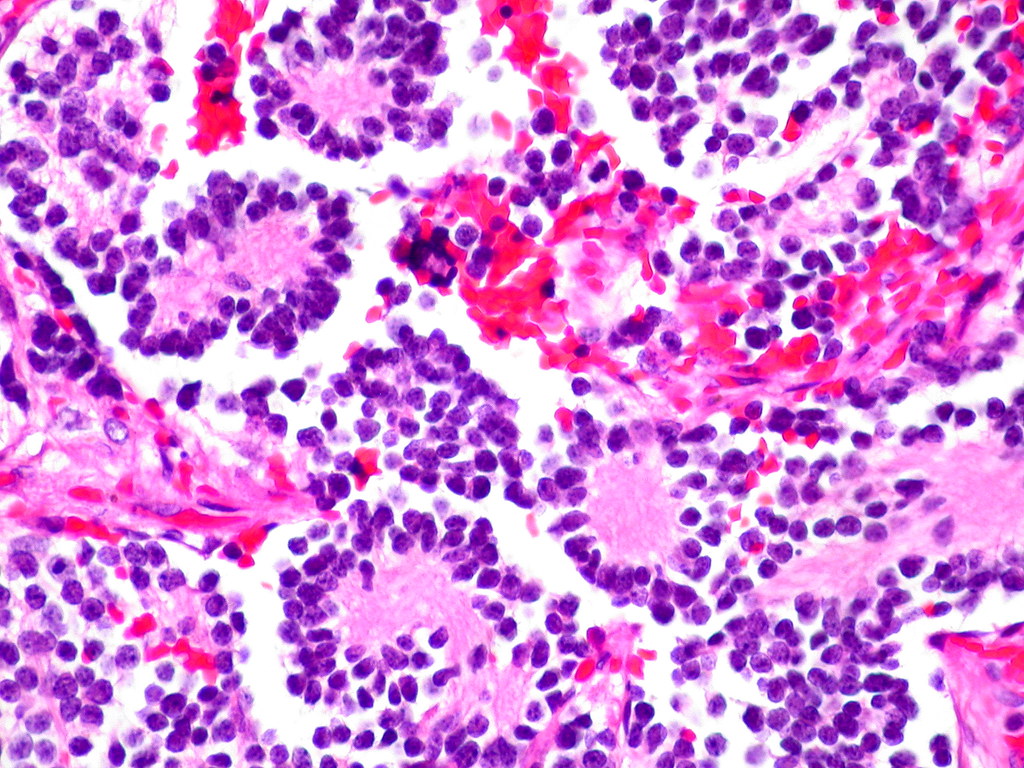

In the early 20th century, infectious diseases like pneumonia, tuberculosis, and diarrhea were the leading causes of death, particularly in the United States, with infant mortality rates exceeding 10% in the first year of life. This grim reality spurred a major focus on the discovery and development of drugs to combat these invisible, yet deadly, adversaries. The urgency of this challenge drove pioneering research efforts.

It was against this backdrop that, in 1911, arsphenamine emerged as the first synthetic anti-infective drug. Developed by the brilliant Paul Ehrlich and chemist Alfred Bertheim of the Institute of Experimental Therapy in Berlin, the drug was commercially named Salvarsan. Ehrlich’s genius lay in his “magic bullet” hypothesis: observing the general toxicity of arsenic and the selective absorption of certain dyes by bacteria, he theorized that an arsenic-containing dye with similar selective absorption properties could specifically target and treat bacterial infections.

Arsphenamine was meticulously prepared as part of a systematic campaign to synthesize a series of such compounds. It demonstrated a partially selective toxicity, a significant step forward, offering a targeted approach rather than a broad-spectrum assault on the body. Crucially, arsphenamine proved to be the first truly effective treatment for syphilis, a devastating disease that, until then, was incurable and inexorably led to severe skin ulceration, profound neurological damage, and ultimately, death. Its impact was nothing short of revolutionary.

Building on Ehrlich’s foundational approach – systematically varying the chemical structure of synthetic compounds and measuring the effects on biological activity – industrial scientists, including Bayer’s Josef Klarer, Fritz Mietzsch, and Gerhard Domagk, continued the quest. Their work, also drawing from the compounds available in the thriving German dye industry, led to the development of Prontosil. This compound marked the discovery of the first representative of the sulfonamide class of antibiotics. Compared to arsphenamine, the sulfonamides boasted a broader spectrum of activity and were significantly less toxic, rendering them highly useful for infections caused by pathogens such as streptococci. In 1939, Domagk was rightfully awarded the Nobel Prize in Medicine for this life-saving discovery.

7. **The Great Purge: Early Drug Safety Crises and Regulation**

For centuries, before the dawn of the 20th century, the landscape of drug production was largely unregulated. Drugs were typically manufactured by small-scale producers with minimal, if any, governmental oversight regarding manufacturing processes or claims of safety and efficacy. Even where laws did exist, their enforcement was often lax, leaving consumers vulnerable to potentially dangerous products and misleading promises. This laissez-faire approach was destined for a harsh reckoning.

In the United States, a series of tragic incidents began to galvanize public and political will for increased regulation. Tetanus outbreaks and deaths, directly linked to the distribution of contaminated smallpox vaccine and diphtheria antitoxin, underscored the dire need for stricter controls over biological drugs. This led to the landmark Biologics Control Act of 1902, which mandated that the federal government grant premarket approval for every biological drug, as well as for the process and facility producing such drugs, marking a critical first step towards governmental oversight.

This act was swiftly followed by the Pure Food and Drugs Act of 1906, which sought to curb the interstate distribution of “adulterated or misbranded foods and drugs.” A drug was deemed misbranded if its label failed to indicate the quantity or proportion of potentially dangerous or addictive substances such as alcohol, morphine, opium, or cocaine. However, early attempts by the government to prosecute manufacturers for making unsupported claims of efficacy were often frustrated by Supreme Court rulings that narrowly restricted federal enforcement powers solely to cases of incorrect specification of a drug’s ingredients, leaving a significant loophole.

The most catalyzing tragedy struck in 1937, when over 100 people tragically died after ingesting “Elixir Sulfanilamide,” manufactured by the S.E. Massengill Company of Tennessee. This product had been formulated in diethylene glycol, a highly toxic solvent now notoriously known as antifreeze. Under the existing laws, prosecution was only possible on the technicality that the product was called an “elixir,” implying a solution in ethanol. This horrific event served as a stark, undeniable testament to the inadequacy of existing regulations. In response, the U.S. Congress passed the Federal Food, Drug, and Cosmetic Act of 1938 (FD&C Act), a pivotal piece of legislation that, for the first time, explicitly required pre-market demonstration of safety before any drug could be sold and unequivocally prohibited false therapeutic claims, fundamentally reshaping the industry’s obligations to public health.

The mid-20th century, particularly the transformative period after World War II, ushered in an unparalleled acceleration in pharmaceutical innovation, fundamentally reshaping the landscape of global health. This era was characterized by an explosion of scientific breakthroughs, driven by both urgent public health needs and burgeoning industrial capabilities, moving beyond the foundational discoveries to create an arsenal of sophisticated treatments.

8. **Further Advances in Anti-Infective Research**

The post-World War II period ignited an extraordinary burst of discovery, leading to entirely new classes of antibacterial drugs that revolutionized medicine. This era saw the emergence of powerful compounds like the cephalosporins, meticulously developed by Eli Lilly, building upon the seminal work of Giuseppe Brotzu and Edward Abraham. Simultaneously, streptomycin, a pivotal discovery made in Selman Waksman’s laboratory with Merck’s funding, became the very first effective treatment for tuberculosis, a disease that had, until then, filled sanatoriums and claimed countless lives.

Before the advent of these powerful anti-infectives, a tuberculosis diagnosis often meant a grim prognosis, with a staggering 50% of patients dying within five years of admission to care facilities. The tetracyclines, discovered at Lederle Laboratories (now part of Pfizer), and erythromycin, found at Eli Lilly and Co., further expanded medicine’s reach, offering broad-spectrum solutions against an increasingly wide array of bacterial pathogens. These breakthroughs dramatically altered the trajectory of infectious diseases, transforming them from unstoppable scourges into treatable conditions.

By 1958, a report from the Federal Trade Commission attempted to quantify the profound effect of antibiotic development on American public health. It revealed a remarkable 42% drop in the incidence of diseases susceptible to antibiotics between 1946 and 1955, contrasted with only a 20% drop in diseases unaffected by these new drugs. The study further detailed a staggering 56% decline in mortality rates for eight common diseases effectively treated by antibiotics, including syphilis, tuberculosis, and pneumonia. Most notably, deaths due to tuberculosis plummeted by an astonishing 75% in this period, a testament to the life-saving power of these novel compounds.

The immediate post-war years, spanning from 1940 to 1955, saw the rate of decline in the U.S. death rate accelerate from a steady 2% per year to an unprecedented 8% per year. This dramatic shift has been directly attributed to the rapid development of innovative treatments and effective vaccines for infectious diseases that became widely available during these crucial years, marking a golden age of medical intervention that reshaped human longevity and well-being.

9. **Vaccine Development Acceleration**

Parallel to the antibiotic revolution, vaccine development experienced its own dramatic acceleration, profoundly impacting public health on a global scale. A crowning achievement of this period was Jonas Salk’s development of the polio vaccine in 1954, an endeavor uniquely funded by the non-profit National Foundation for Infantile Paralysis. Remarkably, the vaccine process was never patented, instead being freely given to pharmaceutical companies to manufacture as an affordable generic, ensuring widespread access to this life-saving innovation.

However, this era of rapid advancement was not without its challenges. In 1960, Maurice Hilleman, then of Merck Sharp & Dohme, identified the SV40 virus, which was later found to cause tumors in many mammalian species. It was subsequently discovered that SV40 had been present as a contaminant in polio vaccine lots administered to an estimated 90% of children in the United States, raising serious concerns. The contamination was believed to have originated from both the original cell stock and the monkey tissue used for production. After extensive research, the National Cancer Institute concluded in 2004 that SV40 is not associated with cancer in people.

Beyond polio, a host of other crucial vaccines emerged. John Franklin Enders of Children’s Medical Center Boston developed the measles vaccine in 1962, later refined by Maurice Hilleman at Merck. Hilleman further distinguished himself with the development of rubella (1969) and mumps (1967) vaccines, consolidating Merck’s position at the forefront of vaccine innovation. These collective efforts significantly curbed the spread of once-common childhood diseases, dramatically improving child health outcomes.

The impact of these widespread vaccination campaigns was nothing short of miraculous. In the United States, the incidences of rubella, congenital rubella syndrome, measles, and mumps all plummeted by more than 95% in the immediate aftermath of mass immunization. The first two decades of licensed measles vaccination alone prevented an estimated 52 million cases of the disease, averted 17,400 cases of mental retardation, and saved an estimated 5,200 lives, showcasing the transformative power of preventive medicine on an immense scale.

Read more about: Unlocking Peak Potential: 12 Cutting-Edge Wearable Technologies Powering Professional Teams to New Performance Heights

10. **Development and Marketing of Antihypertensive Drugs**

As infectious diseases began to wane under the influence of antibiotics and vaccines, medical attention increasingly turned to chronic conditions. Among these, hypertension stood out as a silent killer, recognized as a primary risk factor for atherosclerosis, heart failure, coronary artery disease, stroke, renal disease, and peripheral arterial disease. Before 1940, a significant portion, approximately 23%, of all deaths among individuals over 50 were attributed to hypertension, often leading to severe cases being treated only by invasive surgery.

Early attempts at pharmacological intervention included quaternary ammonium ion sympathetic nervous system blocking agents. However, these compounds were plagued by severe side effects and required administration by injection, limiting their widespread adoption. Furthermore, the long-term health consequences of elevated blood pressure had not yet been fully established, hindering the urgency for effective, tolerable treatments.

A pivotal moment arrived in 1952 when researchers at CIBA (Gesellschaft für Chemische Industrie in Basel, the predecessor to Novartis) unveiled hydralazine, the first orally available vasodilator. While a significant step forward, hydralazine monotherapy suffered from a major shortcoming: it lost its effectiveness over time due to tachyphylaxis. The true game-changer emerged in the mid-1950s when Karl H. Beyer, James M. Sprague, John E. Baer, and Frederick C. Novello of Merck and Co. discovered and developed chlorothiazide, a diuretic that remains among the most widely used antihypertensive drugs today. This breakthrough was directly associated with a substantial decline in the mortality rate among people with hypertension, earning its inventors a Public Health Lasker Award in 1975 for “the saving of untold thousands of lives and the alleviation of the suffering of millions of victims of hypertension.”

A comprehensive Cochrane review in 2009 further solidified the importance of thiazide antihypertensive drugs, concluding that they significantly reduce the risk of death, stroke, coronary heart disease, and overall cardiovascular events in individuals with high blood pressure. In the years that followed, other classes of antihypertensive drugs were developed and found wide acceptance, often in combination therapy. These included potent loop diuretics like Lasix/furosemide (Hoechst Pharmaceuticals, 1963), groundbreaking beta blockers (ICI Pharmaceuticals, 1964), and later, ACE inhibitors and angiotensin receptor blockers, which notably reduce the risk of new-onset kidney disease and death in diabetic patients, regardless of their hypertensive status. This multi-pronged approach has fundamentally transformed the management and prognosis of hypertension, preventing millions of cardiovascular events.

11. **Oral Contraceptives: Societal Shifts**

Prior to World War II, the landscape of reproductive health was starkly different, with birth control often prohibited in many countries. In the United States, merely discussing contraceptive methods could lead to prosecution under the restrictive Comstock laws, highlighting the profound societal and legal barriers that existed. The history of oral contraceptives is thus inextricably linked to the tenacious efforts of the birth control movement and its courageous activists, including Margaret Sanger, Mary Dennett, and Emma Goldman, who championed women’s reproductive autonomy.

The scientific groundwork for the “pill” was laid by fundamental research performed by Gregory Pincus. This was complemented by the innovative synthetic methods for progesterone developed by Carl Djerassi at Syntex and Frank Colton at G.D. Searle & Co. These combined efforts culminated in the development of the first oral contraceptive, Enovid, by G.D. Searle & Co., which received landmark approval from the FDA in 1960. This marked a watershed moment, offering women an unprecedented level of control over their fertility.

Initially, the original formulation of Enovid incorporated vastly excessive doses of hormones, leading to a range of severe side effects. However, despite these early challenges, the demand for this revolutionary medication was immense. By 1962, a staggering 1.2 million American women were using the pill, a number that swelled to 6.5 million by 1965, demonstrating its rapid acceptance and the pressing need it addressed.

The widespread availability of a convenient, temporary form of contraception triggered dramatic and irreversible changes in social mores. It significantly expanded the range of lifestyle options available to women, empowering them to pursue education and careers with greater freedom. It also reduced women’s reliance on men for contraceptive practice, encouraged the delay of marriage, and contributed to an increase in pre-marital co-habitation, fundamentally altering gender roles and family structures across society.

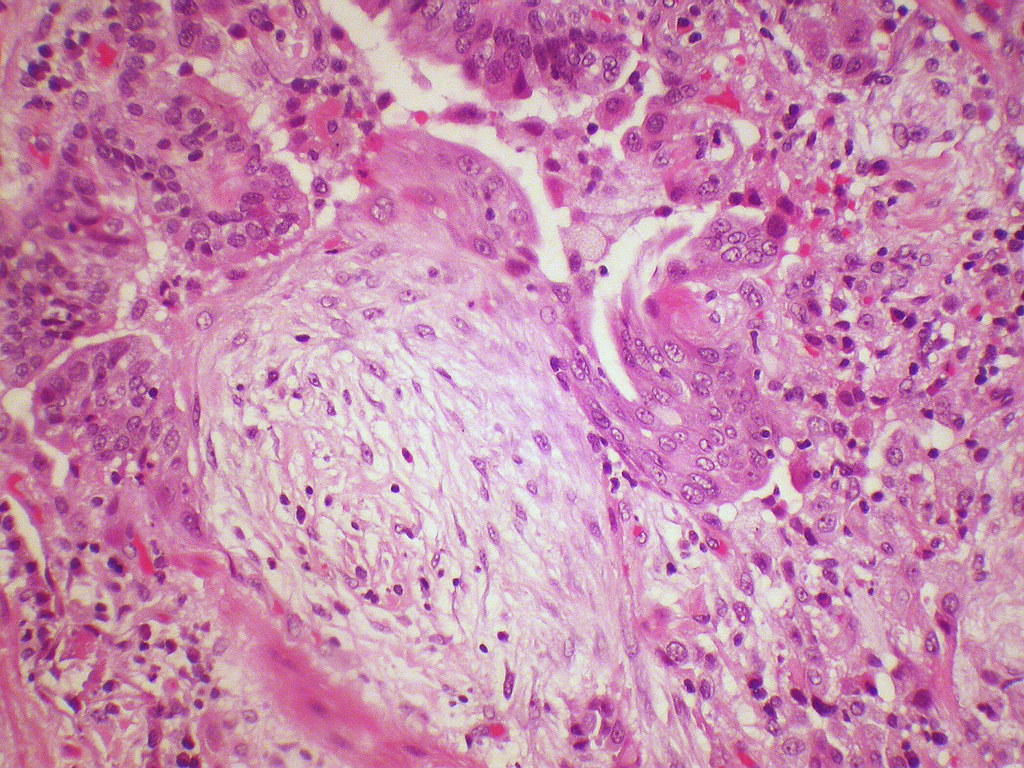

12. **Thalidomide and the Kefauver-Harris Amendments**

The pharmaceutical industry’s trajectory, while marked by triumphs, also experienced sobering tragedies that underscored the vital need for robust regulation. In the U.S., a significant push for revisions of the existing Federal Food, Drug, and Cosmetic Act (FD&C Act) emerged from Congressional hearings led by Senator Estes Kefauver of Tennessee in 1959. These hearings brought to light a wide array of policy issues, including rampant advertising abuses, questionable claims of drug efficacy, and the urgent need for greater oversight of the burgeoning industry.

While momentum for new legislation temporarily stalled amidst extended debate, a new tragedy on an international scale emerged, providing the undeniable driving force for the passage of crucial new laws. On September 12, 1960, the William S. Merrell Company of Cincinnati, an American licensee, submitted a new drug application for Kevadon (thalidomide), a sedative that had already been marketed in Europe since 1956. This seemingly routine application would soon unravel into one of the most infamous chapters in pharmaceutical history.

However, the FDA medical officer in charge of reviewing the compound, Dr. Frances Kelsey, harbored serious concerns. She steadfastly believed that the data supporting the safety of thalidomide was incomplete and courageously resisted immense pressure from the firm to approve the application. Her skepticism proved prescient when, in November 1961, the drug was pulled off the German market due to its horrifying association with grave congenital abnormalities in newborns, revealing its devastating teratogenic effects.

Several thousand newborns in Europe and elsewhere tragically suffered the deformities caused by thalidomide. Despite lacking FDA approval, the Merrell firm had distributed Kevadon to over 1,000 physicians in the U.S. under the guise of “investigational use,” exposing over 20,000 Americans, including 624 pregnant patients, to the drug. Approximately 17 known newborns in the U.S. suffered the effects of thalidomide, bringing the tragedy home and reigniting the legislative efforts. The thalidomide tragedy resurrected Kefauver’s stalled bill, leading to the passage of the landmark Kefauver-Harris Amendment on October 10, 1962. This pivotal legislation for the first time mandated that manufacturers prove their drugs were not only safe but also effective before they could enter the U.S. market, forever changing the regulatory landscape and protecting future generations from similar catastrophes.

The FDA was also granted new authority to regulate the advertising of prescription drugs and to establish stringent good manufacturing practices. Furthermore, the law required that all drugs introduced between 1938 and 1962 undergo a retrospective review to confirm their efficacy; a collaborative study by the FDA and the National Academy of Sciences subsequently revealed that nearly 40 percent of these products were, in fact, not effective. A similarly comprehensive study of over-the-counter products commenced a decade later, underscoring a new era of vigilance and accountability in drug development.

13. **Statins: A Revolution in Cholesterol Management**

By the latter half of the 20th century, the medical community increasingly understood the profound link between high cholesterol and cardiovascular disease, a leading cause of mortality. In 1971, a Japanese biochemist named Akira Endo, working for the pharmaceutical company Sankyo, made a groundbreaking discovery: he identified mevastatin (ML-236B), a molecule produced by the fungus *Penicillium citrinum*, as a potent inhibitor of HMG-CoA reductase. This enzyme is critical for the body’s natural production of cholesterol, and its inhibition offered a novel pathway to mitigate cardiovascular risk.

Early animal trials of mevastatin showed very promising inhibitory effects, mirroring successful clinical trial outcomes. However, a crucial long-term study conducted in dogs revealed troubling toxic effects at higher doses, including tumors, muscle deterioration, and even death. As a result, mevastatin was deemed too toxic for human use and was, regrettably, never marketed. This setback highlighted the complex challenges inherent in drug development, where initial promise can be overshadowed by unforeseen safety concerns.

Undeterred, P. Roy Vagelos, then chief scientist and later CEO of Merck & Co., maintained a keen interest in cholesterol-lowering compounds, making several investigative trips to Japan starting in 1975. His persistence paid off handsomely. By 1978, Merck had successfully isolated lovastatin (mevinolin, MK803) from the fungus *Aspergillus terreus*. This potent new compound, a direct successor to Endo’s pioneering work, was eventually marketed in 1987 as Mevacor, heralding a new era in the management of hypercholesterolemia.

A monumental moment in the statin story occurred in April 1994 with the announcement of the results from the Merck-sponsored Scandinavian Simvastatin Survival Study. Researchers had tested simvastatin, later sold by Merck as Zocor, on 4,444 patients already suffering from high cholesterol and heart disease. After a rigorous five-year period, the study delivered astounding news: patients receiving simvastatin experienced a remarkable 35% reduction in their cholesterol levels, and, even more critically, their chances of dying from a heart attack were reduced by an impressive 42%. This irrefutable evidence cemented the statins’ status as a cornerstone of cardiovascular medicine.

The commercial success of statins was as significant as their clinical impact. By 1995, Zocor and Mevacor each generated over US$1 billion in revenue for Merck, underscoring their widespread adoption and the immense unmet medical need they addressed. Akira Endo’s foundational work was rightfully recognized with the 2006 Japan Prize and the prestigious Lasker-DeBakey Clinical Medical Research Award in 2008, honoring his “pioneering research into a new class of molecules” for “lowering cholesterol,” forever changing the outlook for millions at risk of heart disease.

14. **21st Century Pharmaceutical Landscape: Evolution and Economic Shifts**

As the pharmaceutical industry entered the 21st century, it embarked on a period of profound transformation, marked by significant shifts in drug modalities, geographic influence, and organizational structures. One of the most striking changes has been the consistent rise in importance of biologics—complex medicines derived from living organisms—in comparison to traditional small molecule treatments. While small molecules commanded an overwhelming 84.6% of the industry’s valuation share in 2003, this figure had declined to 58.2% by 2021, with biologics soaring from 14.5% to 30.5% in the same period, signaling a new frontier in therapeutic innovation.

Beyond biologics, other subsectors have also experienced substantial growth, including the burgeoning biotech sector, animal health, and, notably, the Chinese pharmaceutical sector. China’s valuation share alone surged from a mere 1% in 2003 to an impressive 12% by 2021, overtaking Switzerland to rank as the third most valued pharmaceutical industry globally. Despite these shifts, the United States still maintained its dominance, holding by far the most valued pharmaceutical industry with 40% of the global valuation, a testament to its enduring research and development infrastructure and market strength.

On the organizational front, big international pharmaceutical corporations have witnessed a substantial decline in their overall value share, reflecting a more fragmented and specialized industry ecosystem. Concurrently, the core generic sector, which focuses on producing affordable substitutions for off-patent branded drugs, has been devalued due to intense competition, driving down prices and profit margins. These economic pressures are reshaping strategies, leading companies to seek new avenues for growth and innovation.

Mergers and acquisitions (M&A) have emerged as a critical driver of progress and value in this evolving landscape. A 2022 article succinctly articulated this notion by stating, “In the business of drug development, deals can be just as important as scientific breakthroughs.” It highlighted that some of the most impactful remedies of the early 21st century, such as Keytruda and Humira, were only made possible through strategic M&A activities, demonstrating the power of corporate synergy in bringing life-changing treatments to market. This dynamic environment, however, has also seen its challenges; 2023 was a year of layoffs for at least 10,000 people across 129 public biotech firms globally, a significant increase stemming from worsening global financial conditions and a reduction in investment by ‘generalist investors.’ Private firms also experienced a notable reduction in venture capital investment, continuing a downward trend initiated in 2021, which in turn led to fewer initial public offerings being floated, underscoring the delicate balance between innovation and economic realities.

15. **Product Approval Process and the Cost of Innovation**

Behind every medical breakthrough lies an incredibly complex and costly journey, beginning with drug discovery—the intricate process by which potential drugs are identified or designed. Historically, most drugs were discovered either by isolating active ingredients from traditional remedies or through serendipitous observation. Today, modern biotechnology often focuses on understanding the metabolic pathways related to disease states or pathogens, and then manipulating these pathways using advanced molecular biology or biochemistry techniques. A significant portion of this crucial early-stage drug discovery work has traditionally been carried out by universities and specialized research institutions.

Following discovery, drug development commences, encompassing all activities undertaken to establish a compound’s suitability as a medication. The primary objectives are to determine the appropriate formulation and dosing, and critically, to establish its safety profile. Research in these areas typically involves a rigorous combination of *in vitro* studies (in test tubes), *in vivo* studies (in living organisms), and extensive human clinical trials. The immense cost associated with late-stage development means that this phase is usually undertaken by larger pharmaceutical companies, while smaller organizations often focus on specific aspects like discovering drug candidates or developing formulations, often through collaborative agreements with major multinationals.

Indeed, the pharmaceuticals and biotechnology industry dedicates more than 15% of its net sales to Research & Development, which is, in comparison with other industries, by far the highest share, a testament to the capital-intensive nature of innovation. However, drug discovery and development are notoriously expensive endeavors; of all compounds investigated for human use, only a small fraction ultimately gain approval from government-appointed medical institutions or boards, such as the U.S. Food and Drug Administration (FDA) or the UK’s Medicines and Healthcare products Regulatory Agency (MHRA), before they can be marketed. In 2010, for example, the FDA approved 18 New Molecular Entities (NMEs) and three biologics, a total of 21, reflecting a stable average approval rate over decades.

This approval comes only after heavy investment in pre-clinical development and multiple phases of clinical trials, along with a commitment to ongoing safety monitoring. Drugs that fail part-way through this arduous process incur massive costs while generating no revenue in return. When these costs are factored in, the estimated expense of developing a single successful new drug (a new chemical entity, or NCE) has been as high as US$1.3 billion, not including marketing expenses. Some estimates, notably by Forbes in 2010, placed development costs even higher, between $4 billion and $11 billion per drug. These figures often include the significant ‘opportunity cost’ of investing capital many years before any revenues are realized, a crucial factor given the extremely long timelines required for pharmaceutical discovery, development, and approval, which can account for nearly half the total expense. This economic reality has led major pharmaceutical multinationals to increasingly outsource risks related to fundamental research, reshaping the industry ecosystem with biotechnology companies playing an increasingly vital role.

In the United States, new pharmaceutical products must navigate a stringent approval pathway overseen by the FDA. This typically involves the submission of an Investigational New Drug (IND) filing, which must contain sufficient pre-clinical data to justify proceeding with human trials. Upon IND approval, three phases of progressively larger human clinical trials are conducted. Phase I generally assesses toxicity using healthy volunteers, Phase II evaluates pharmacokinetics and dosing in patients, and Phase III is a very large-scale study designed to confirm efficacy within the intended patient population. Following the successful completion of Phase III testing, a comprehensive New Drug Application (NDA) is submitted to the FDA. If the FDA’s review concludes that the product has a positive benefit-risk assessment, approval to market the product in the U.S. is granted.

Crucially, a fourth phase of post-approval surveillance is often required because even the largest clinical trials cannot fully predict the prevalence of rare side effects. This postmarketing surveillance ensures that drug safety is meticulously monitored after commercial launch. In certain instances, a drug’s indication may need to be limited to specific patient groups, and in others, the substance is withdrawn from the market entirely. The FDA maintains public information about approved drugs via its Orange Book site. In the UK, the Medicines and Healthcare products Regulatory Agency (MHRA) approves and evaluates drugs. Often, approval in the UK and other European countries lags behind the U.S. Following approval, the National Institute for Health and Care Excellence (NICE) for England and Wales determines whether and how the National Health Service (NHS) will fund the drug’s use, often considering a ‘fourth hurdle’ of cost-effectiveness analysis, focusing on metrics like the cost per Quality-Adjusted Life Year (QALY). This intricate web of regulation ensures that only safe, effective, and, increasingly, cost-effective medications reach patients, reflecting a global commitment to public health amidst the high stakes of pharmaceutical innovation.

Read more about: Navigating the Exactech Medical Alert: A Consumer Guide to Defective Joint Implants, Recalls, and Your Legal Rights

From the meticulous isolation of life-saving hormones to the intricate design of targeted therapies, the pharmaceutical industry’s journey is a testament to humanity’s unyielding drive to conquer disease and extend the frontiers of human health. Each pill, each vaccine, each groundbreaking treatment represents not just a scientific achievement, but a promise—a promise of relief, of recovery, and of a future where once-insurmountable ailments yield to the power of human ingenuity. As we look to the horizon, the quest continues, propelled by an ever-deepening understanding of biology and an unwavering commitment to alleviating suffering across the globe. The story of medicine, in essence, is the story of us—our vulnerabilities, our resilience, and our endless pursuit of a healthier existence.